|

Quantum AI

The AI is a super wide concept. Out of the immense scope my personal interests lies in deep learning. Accordingly my attention was immediately drawn to how Quantum computer can implement the deep learning when I first heard of the term Quantum AI,

Challenging questions

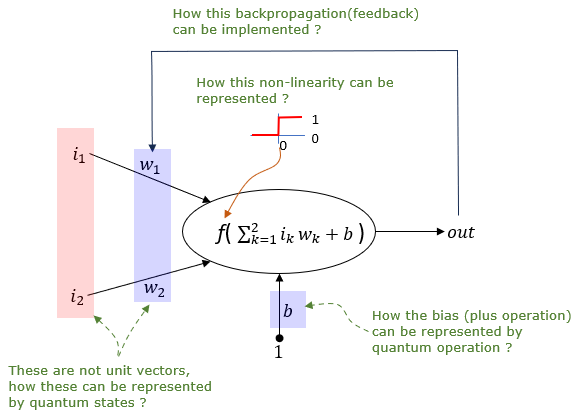

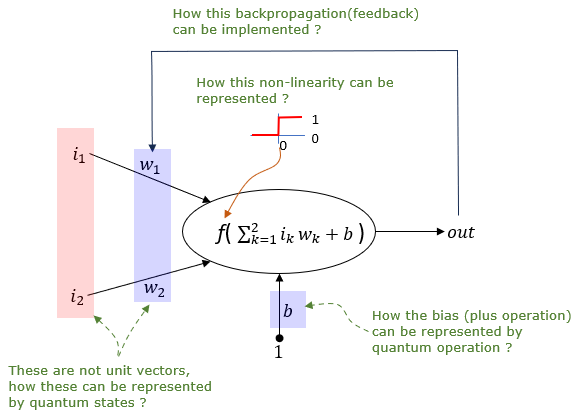

A lot of challenging questions were poping up in my mind when I was thinking of fundamental math used in quantuam computing.

In classical computing, we are used to working with any vectors, including non-unit vectors, and using bias terms freely in algorithms like neural networks. However, quantum computing operates under different rules due to the principles of quantum mechanics. Quantum states are unit vectors by necessity, as they describe probabilities that must sum to one. This constraint means that we cannot directly apply classical concepts without modification.

Followings are a set of first questions

that came to my mind.

A lot of researches has been going on about these questions and some degree of progress has been made even though we can't say these problems are solved clean.

- Representation of Non-Unit Vectors: While quantum states are indeed unit vectors, you can encode classical non-unit vectors into quantum states. Several different ways are suggested and are being investigated. Each of these methods has its own way of translating the larger, non-unit vectors into something a quantum system can work with, keeping in mind that the quantum world operates under strict rules that don't always match up with our classical intuition.

- Normalization: This is like resizing a picture to fit into a frame. You scale down your non-unit vector so that it fits within the quantum system's rules, which require vectors to be of a certain size (unit length). Once the computation is done, you can 'zoom back out' to understand the results in their original context.

- Phase Encoding:: Imagine you have a song where the volume (magnitude) stays the same, but the tone (phase) changes. Similarly, you can keep the 'volume' of your quantum states constant but change the 'tone' to represent different values of your non-unit vector. This way, the information is embedded not in the loudness but in the quality of the sound.

- Amplitude Encoding:: This is akin to creating a miniaturized model of a building. You take a large structure (the non-unit vector) and create a smaller, proportional model (the normalized quantum state) that can be used within the quantum system.

- Ancillary Systems:: You could use additional qubits, much like adding more coaches to a train. These extra coaches can be used to 'carry' the information of a non-unit vector within the quantum system by distributing the information across a larger set of qubits.

- Quantum Data Compression:: This method compresses the information of a large non-unit vector into a quantum state, similar to how a zip file compresses data to make it fit into a smaller space. The quantum system handles this compressed data, and then you can 'unzip' it back to the original non-unit vector after the computation.

- Hybrid Quantum-Classical Encoding:: Sometimes, it's easier to split the task between a quantum system and a classical one. You can handle part of the vector classically and only use the quantum system for parts of the data that are suitable for quantum processing. It's like having both a physical notebook and a digital note-taking app; you use each one for what it's best at.

- Representation of Bias (Plus Operation):: In quantum computing, adding a bias term is not as straightforward as in classical computing because quantum operations are unitary and thus must be reversible. However, you can simulate a bias by modifying the phase of a quantum state or by adding ancilla qubits that you entangle in a certain way with your system. The actual implementation would depend on the specific details of the quantum algorithm you're using.

- Non-linearity Representation:: Quantum operations are linear by the nature of quantum mechanics. However, non-linearity in quantum neural networks can be introduced through measurement, as measuring a quantum state collapses it non-linearly based on the probabilities of the outcomes. Also, variational circuits with adjustable parameters can mimic non-linear functions by changing the parameters based on classical feedback.

- Backpropagation (Feedback) Implementation:: Quantum computing currently lacks a direct equivalent to the backpropagation algorithm used in classical neural networks. However, there are quantum algorithms that approximate gradient descent (which backpropagation is a part of), such as the Quantum Approximate Optimization Algorithm (QAOA) and variational quantum eigensolver (VQE). These use parameterized quantum circuits where the parameters are updated in a classical optimization

loop, informed

by the results of quantum measurements.

Any New Approaches ?

In the history of technology, we often see the case where we getting more difficulties by trying just to translating the concept from conventional technologies to new technologies. Sometimes trying to come up with new approach may work better. Of course there are many of bright minds trying to come up with novel approaches. Some of these approaches/efforts are as follows. Each of these approaches seeks to do more than mimic classical neural networks; they aim to open new computational paradigms by integrating quantum mechanical principles directly into the computation and learning processes. This exploration is driven by the hope that quantum computing can offer computational advantages or solve problems in ways that are infeasible for classical computers. However, the field is still in its infancy, and much research is needed to understand the full potential and limitations of these quantum-native approaches.

- Quantum Circuit Learning: Utilizes the parameterized nature of quantum circuits to perform computations that inherently rely on quantum mechanical operations. This is different from classical neural networks, as it uses the principles of quantum mechanics to process and learn from data.

- Quantum Feature Maps: Encode classical or quantum data into a high-dimensional quantum space, exploiting the exponential state space of quantum systems to potentially reveal new features and correlations that classical computing methods cannot efficiently access.

- Entanglement-Based Models: Specifically use the quantum property of entanglement to model correlations and interactions within data. This quantum correlation does not have a classical equivalent and can potentially model complex patterns in data more efficiently.

- Quantum Boltzmann Machines: While inspired by classical Boltzmann machines, the quantum version uses quantum states and transitions to explore solution spaces, potentially offering faster convergence and exploration of energy landscapes thanks to quantum tunneling.

- Quantum Convolutional Neural Networks (QCNNs): Though they draw inspiration from classical CNNs, QCNNs implement convolution-like operations through quantum gates, leveraging quantum parallelism and entanglement to process quantum data in novel ways.

- Quantum Generative Models: Like classical generative models but operate in the quantum domain, using quantum circuits to generate new data samples. The quantum versions aim to utilize the quantum system's ability to represent and manipulate complex probability amplitudes.

- Hybrid Quantum-Classical Models: Integrate quantum processes with classical neural networks by using quantum computing for specific tasks (e.g., feature extraction) that could benefit from quantum advantages, and classical computing for tasks that are currently more efficiently performed on classical computers.

- Quantum Data Encoding: Involves innovative ways of representing data as quantum states that fully utilize quantum properties. This can involve complex encoding schemes that go beyond simply mimicking classical data representation.

|

|