|

6G AI/ML

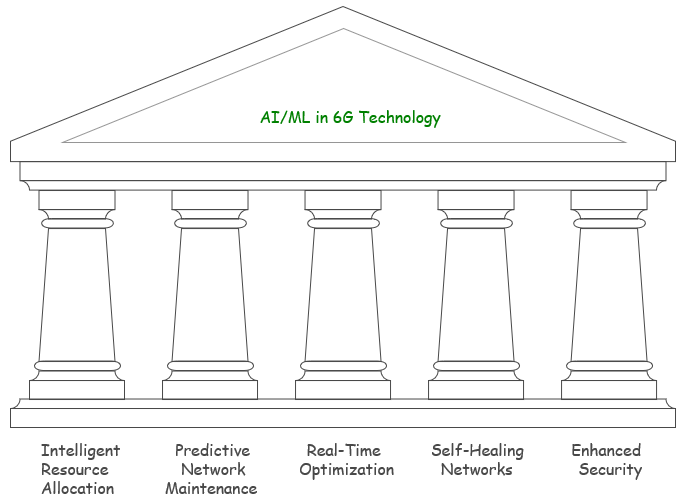

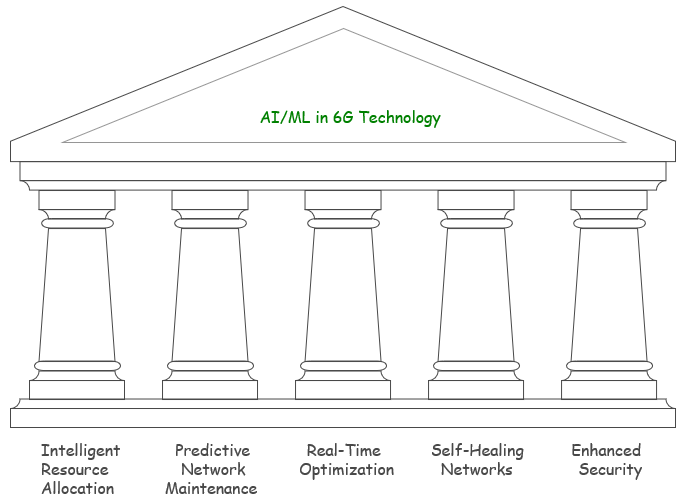

It is well known that Artificial Intelligence (AI) and Machine Learning (ML) will play a crucial role as key enablers in the development of 6G technology. AI/ML is expected to drive major innovations, optimize network performance, enhance automation, and enable new intelligent services that go beyond traditional telecommunications paradigms. However, the specific applications and use cases in which AI/ML will be integrated into 6G networks are still not clearly defined. Since the standardization

of 6G is still in its early stages, with organizations like 3GPP yet to finalize the technical specifications, there remains significant uncertainty regarding the precise scope of AI/ML's role.

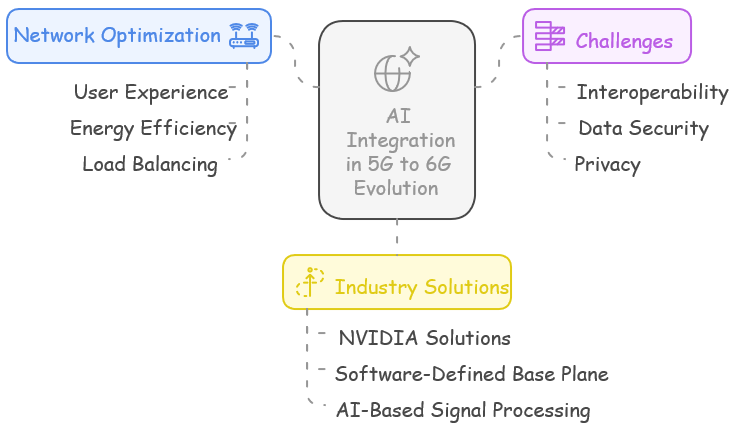

Despite this, it is possible to anticipate various potential use cases based on current technological trends and research. AI/ML could be instrumental in intelligent resource allocation, predictive network maintenance, real-time optimization, self-healing networks, and enhanced security mechanisms. Additionally, AI-driven edge computing, ultra-reliable low-latency applications, and autonomous decision-making systems are likely to be pivotal in shaping the future of 6G. While many questions

remain unanswered - such as the extent to which AI will be embedded into the network fabric, its interaction with other emerging technologies like quantum computing and the metaverse, and the regulatory and ethical considerations - it is essential to consolidate potential use cases, explore AI/ML models, and analyze possible deployment scenarios.

This effort aims to bridge the gap between current AI-driven advancements in 5G and the transformative potential of AI/ML in 6G, fostering discussions and insights that will contribute to defining its role in next-generation networks.

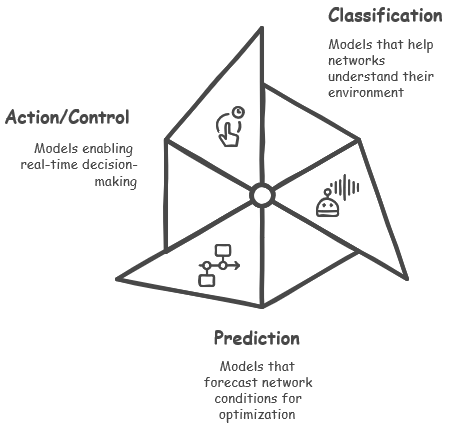

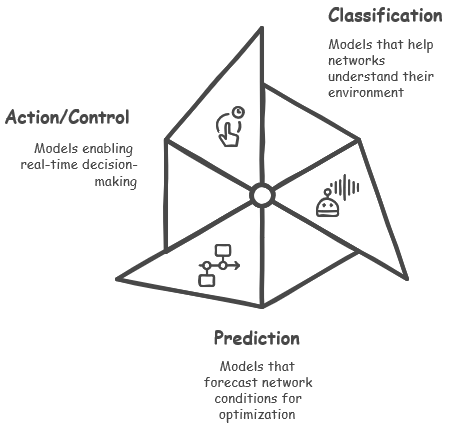

Machine learning models are designed for specific tasks, and while they excel in certain areas, they may not perform well in others. Choosing the right ML model is crucial for maximizing efficiency and achieving the best results in communication systems. The table below categorizes ML models based on their primary functions listed below:

- Classification : These models are used to identify patterns and categorize data, such as recognizing network conditions or classifying user behaviors.

- Prediction : These models forecast future trends or system behavior, which helps in proactive decision-making and resource optimization.

- Action/Control :These models enable AI to take action based on observations, optimizing real-time decision-making in dynamic network environments.

As AI/ML integration deepens in 6G networks, these models will play a crucial role in enhancing automation, reducing latency, and optimizing resource management. Classification models will help networks better understand their environment, predictive models will allow for smarter preemptive adjustments, and action/control models will enable real-time decision-making with minimal human intervention. These AI-driven innovations will make future networks more adaptive, resilient, and efficient,

paving the way for ultra-reliable and intelligent wireless communication systems.

The table below lists different ML models along with their specific use cases in modern communication networks. These examples highlight how AI/ML is enhancing performance, improving network efficiency, and enabling smarter automation.

|

Category

|

ML Model

|

Use Case

|

|

Classification

|

LSTM

|

LiDAR Aided Human Blockage Prediction – AI predicts when human movement will block signals, allowing the network to adjust and maintain connectivity.

|

|

Classification

|

GNN

|

Access Point Selection in Cell-Free Massive MIMO Systems – AI analyzes network topology and user positions to assign the best access point dynamically.

|

|

Prediction

|

LASSO

|

AI-Based Compressed Sensing for Beam Selection in D-MIMO – AI predicts the best beamforming strategy, reducing overhead and improving spectral efficiency.

|

|

Prediction

|

RNN, LSTM, Transformer

|

AI/ML-Based Predictive Orchestration – AI forecasts network conditions and automatically adjusts resources for optimal performance.

|

|

Action/Control

|

CNN, RL

|

Constellation Shaping – AI optimizes signal modulation to enhance data transmission quality and reduce interference.

|

|

Action/Control

|

FL

|

Distributed AI for Automated UPF Scaling in Low-Latency Network Slices – AI dynamically adjusts user plane function (UPF) resources to meet traffic demands while minimizing latency.

|

What kind of specific applications will the AI/ML will be used for ? In other words, what will be the use cases for the AI/ML in 6G ?

Here's a simple table to break it down for you. It lists out specific situations or "Use Cases" where ML is applied. Next to each situation, there's a brief "Description" of what's going on. "ML Model" indicates typical ML model employed for the use case.

This table is the summary of use cases based on Hera-X : Deliverable D4.2

|

Use Case

|

Description

|

ML Model

|

|

LiDAR Aided Human Blockage Prediction

|

Monitor indoor activity and predict dynamic human blockages, aimed at improving the reliability of the link, reducing link failures while supporting mobility and adapting to the dynamics of the environment.

|

LSTM

|

|

Access point selection in cell-free massive MIMO systems

|

Predict candidate Access Points (APs) based on limited measurements, improving latency and achieving mobility support targets.

|

GNN

|

|

AI based compressed sensing for beam selection in D-MIMO

|

Beam selection in D-MIMO" . The technique leverages the sparsity of radio propagation in multipath channels, enabling fewer measurements for beam selection. The Access Points (APs) transmit the same reference signal in all directions, and the best beam direction and corresponding channel gains are determined using compressed sensing computation.

|

LASSO

|

|

Constellation shaping

|

Removes the need for transmitting pilot symbols within the waveform, as the geometric shape of the complex-valued constellation is learned

|

CNN, RL

|

|

ML aided channel (de)coding for constrained devices

|

Enhance channel coding, particularly in the short block length regime.

|

RNN

|

|

DL for location-based beamforming

|

Learn the location/precoder mapping, overcoming limitations of existing LBB methods that assume the existence of a Line of Sight (LoS) path.

|

MLP

|

|

ML aided beam management

|

Allows a newly activated AP to start serving users immediately, using information from neighbouring APs to select the appropriate beam

|

DCB

|

|

TX-side CNN for reducing PA-induced out-of-band emissions

|

Reduce out-of-band emissions caused by a nonlinear power amplifier (PA).

|

CNN

|

|

ML/AI empowered receiver for PA non-linearity compensation

|

Compensate for the non-linearities of Power Amplifiers (PAs) at the receive. This approach allows for higher distortions to be tolerated, reducing the PA output backoff, leading to higher output power and more energy-efficient operation of the transmitters.

|

AI/ML Demapper

|

|

AI/ML-based predictive orchestration

|

Predict future demands and arrange resource provisioning in advance. Reduce service instantiation time and provide resilience for potential hazards that could emerge from connectivity failure

|

RNN, LSTM

|

|

Distributed AI for automated UPF scaling in low-latency network slices

|

trigger preemptive auto-scaling of local UPFs at the network edge. This is achieved by deploying a distributed Network Data Analytics Function (NWDAF) instance in a strategic location that can autonomously monitor the network in the target geographical area. The AI agent, deployed in distributed edge computing resources, processes data from the NWDAF, applies the AI algorithm and models, and controls the respective UPF scaling.

|

FL

|

Followings are the acronyms used in the table

-

CNN : Convolutional Neural Network

-

RNN : Reccurrent Neural Network

-

GNN : Graph Neural Network

-

LSTM : Long Short Term Memory

-

MLP : Multi Layer Perceptron

-

RL : Reinforcement Learning

-

FL : Federated Learning

-

DCB : Deep Contextual Bandit

-

ML : Machine Learning

-

DL : Deep Learning

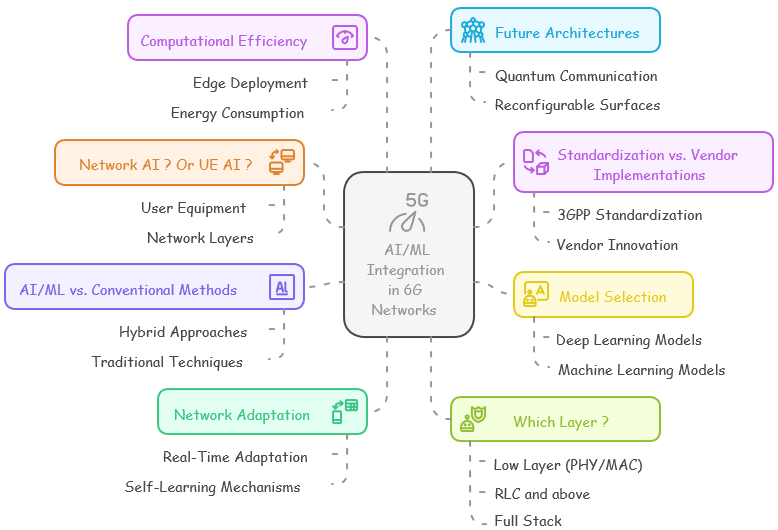

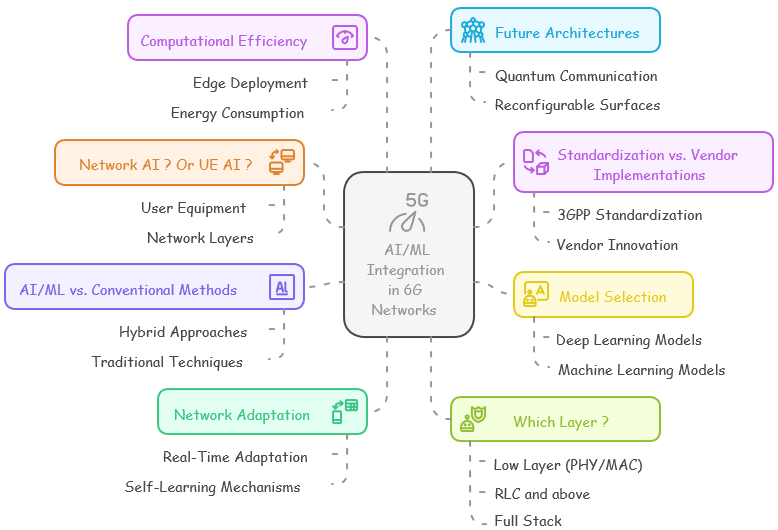

As AI and ML become important parts of 6G, there are still many questions about how they will be used in communication systems. AI has already helped improve network performance in 5G, but its role in 6G will be even bigger, possibly affecting all parts of the network. However, before AI/ML can work well in 6G, several challenges need to be solved, such as technical issues, standardization, and implementation. Key questions include where AI/ML should be applied, which parts will be standardized

and which will be left to vendors, what types of AI/ML models should be used, and whether AI/ML will completely replace existing methods or work alongside them. These decisions will shape the future of AI-powered telecommunications and determine how practical and effective AI/ML will be in 6G networks.

- AI/ML Integration Across Network Layers

- At which stages along the overall communication path should AI/ML be incorporated? (e.g., User Equipment vs. Network, Higher Layer vs. Low Layer such as PHY, MAC, RLC).

- Will AI/ML play a more significant role in lower layers (PHY, MAC) for real-time optimizations, or will it be more relevant in higher layers (RRC, application) for network intelligence and service enhancements?

- Standardization vs. Vendor-Specific Implementations

- Which aspects of AI/ML will be formally standardized by 3GPP, and which parts will be left to vendors for proprietary innovation?

- Will there be a unified AI/ML framework across vendors, or will different implementations lead to interoperability challenges?

- AI/ML Model Selection and Suitability

- What types of Deep Learning (DL) or Machine Learning (ML) models will be used for different use cases?

- Should different AI models be optimized for specific network functions, such as Reinforcement Learning for resource allocation, Supervised Learning for signal processing, or Unsupervised Learning for anomaly detection?

- AI/ML vs. Conventional Methods

- When applying AI/ML in the network, should it completely replace traditional methods, or should it serve as an optional enhancement?

- For example, if AI/ML is used for channel estimation, will it fully replace conventional techniques like Minimum Mean Square Error (MMSE), or will it work alongside them as a hybrid approach?

- AI/ML for Network Adaptation and Optimization

- How can AI/ML dynamically adapt to changing network conditions and user demands?

- Can AI-driven self-learning mechanisms continuously optimize network configurations without human intervention?

- AI/ML for Security and Privacy

- What are the security implications of embedding AI/ML into critical network functions?

- How can AI/ML be used to enhance network security, such as detecting and mitigating attacks in real time?

- What measures should be taken to ensure AI/ML-based decision-making remains transparent and free from bias?

- Computational and Energy Efficiency Challenges

- Given the high computational cost of AI models, how can we ensure efficient AI/ML processing without excessive energy consumption?

- Can AI/ML models be efficiently deployed at the edge or in power-constrained devices such as IoT and mobile terminals?

- Future AI/ML-driven Network Architectures

- Will 6G require a fundamentally new network architecture to accommodate AI-native functionalities?

- How will AI/ML interact with other emerging technologies such as quantum communication, reconfigurable intelligent surfaces (RIS), and terahertz communications?

With the widespread of AI since early 2023, I have tried a little bit of new approach of study/learning utilizing various AI solutions. In this section, I am trying to pick up some of the YouTube materials that looks informative (at least) to me. The contents that I am sharing in this section is created as follows.

-

Watch the full contents of YouTube material myself

-

NOTE : This is essential since there are a lot of visual material that cannot be shared by the summary and also some details not captured by summary. If you skip this step, nothing would go through your brain... it would just go through YouTube and directly through AI. Then, AI would learn but you would not :)

-

NOTE : I usually pick materials with more of specific technical informations and use cases rather than high level overview.

-

Get the transcript from YouTube (As of 2023, YouTube provide the built-in function to generate the transcript for the video)

-

Copy the transcribe, save it into a text file. Paste the text file into chatGPT (GPT 4o) and requested summary

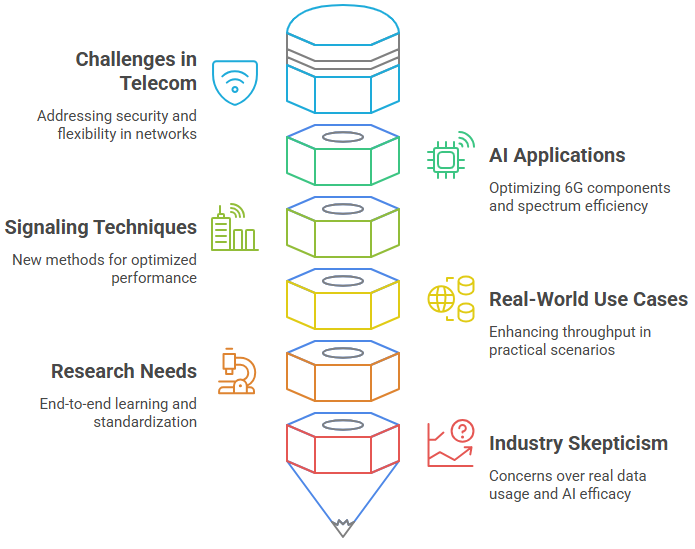

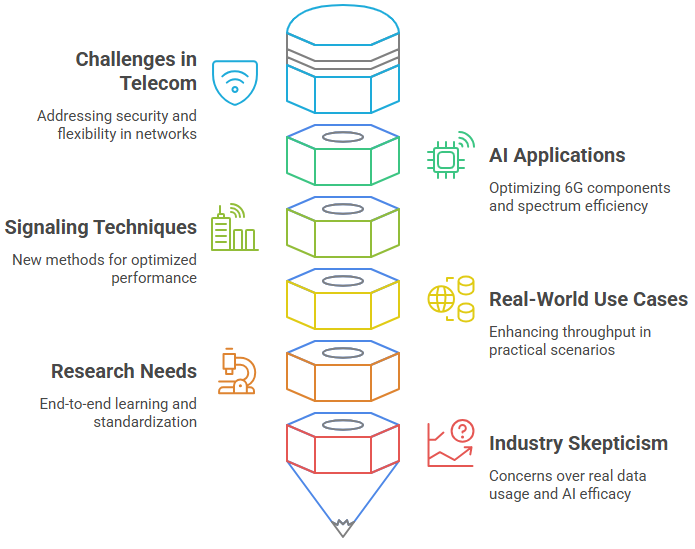

The talk delivered by Jacob Hodis, the head of the research department at Nokia Bell Labs in France, focused on exploring machine learning applications for the physical and media access control layers in communication systems. He shared a vision for a 6G system, where machine learning plays a pivotal role in designing the network, particularly the AI-native air interface. The discussion covered the potential of creating virtual digital twin worlds, augmenting intelligence, and controlling robots,

all on a global scale through 6G. Key technologies necessary for 6G were highlighted, including AI optimization, spectral sharing, and new security paradigms. Jacob also touched on the shift from traditional design approaches to data-driven machine learning techniques for enhancing communication systems. He emphasized the importance of differentiable transceiver algorithms and the potential for learning custom waveforms and signaling schemes. The talk concluded with a Q&A session discussing the impact on

standards, real-time processing, data generation for simulations, and the nature of training for these advanced systems.

- Key Takeaways from the Talk:

- Challenges & Evolution in Telecommunications: Increasing data rates require new scheduling algorithms and multiplexing waveforms. Networks are evolving to be more flexible and specialized for new verticals, while private networks face concerns over security, jamming, and information sharing.

- AI in 6G & Potential Use Cases: AI can enhance or replace 5G components by learning new waveforms, constellations, and access schemes. It optimizes hardware non-linearities, improves spectrum efficiency, and manages dynamic access methods better than humans. Larger neural networks require specialized hardware acceleration to function efficiently.

- AI-Driven Signaling & Transceivers: AI components will necessitate new signaling techniques and differentiable transceivers for optimized end-to-end performance. Binary cross-entropy loss functions align well with practical decoding tasks in communication systems.

- Real-World Use Cases & Impact on Throughput: AI can significantly improve physical layer tasks such as neural receivers and pilotless transmissions, leading to better throughput and eliminating the need for pilot placement management.

- Future Research & Standardization Needs: Research areas include end-to-end learning for custom waveforms, application-specific learning, and overcoming challenges in decentralized and federated learning. Standardization is required for machine-learned configurations and protocols to ensure seamless integration across systems.

- Industry Skepticism & Use of Real Data: While academia relies on simulations, commercial datasets are needed for practical AI advancements. Some experts remain skeptical about AI-based learning in real-world physical layer tasks.

- Key Q&A Insights:

- Shift to Data-Driven Methods & Standards: AI-driven training can improve efficiency but requires specialized hardware accelerators. Machine learning integration into physical layers is still in early stages, with minimal impact on existing standards.

- Mobility & Real-Time Processing Challenges: AI methods can adapt to different channel models and Doppler effects, making them resilient to mobility. However, AI-based physical layer processing requires ultra-fast speeds (nano- to microseconds), necessitating specialized hardware.

- Simulation vs. Real Data & Training Challenges: Research currently relies on 3GPP models due to limited real-world datasets. While offline training is viable, real-time AI adaptation remains impractical for 5G deployments.

- Adaptive Modulation, Overhead & Optimization: AI models can dynamically adjust modulation and loss functions, optimizing efficiency based on network conditions. The trade-off between training stability and real-time adaptation remains a key challenge.

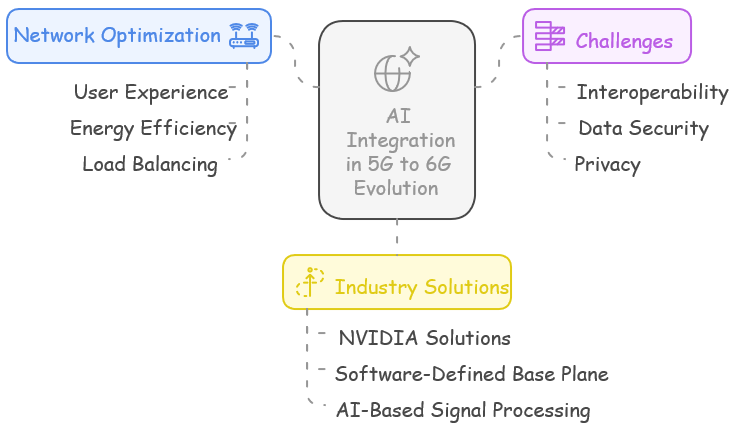

The transcript discusses various aspects of AI in 5G evolution towards 6G, focusing on AI's role in enhancing network performance and addressing new use cases in mobile communication. It covers the integration of AI in network design, the importance of data-driven architecture, and the collaboration between academia, industry, and standardization bodies for a globally aligned approach towards 6G. The presentation emphasizes the potential of AI in improving network management, user

experience, and system performance in telecommunication networks. Key points include the exploration of AI-enabled radio access networks, the study of functional frameworks for AI in telecommunication, and the challenges and opportunities in integrating AI into existing mobile network architectures.

- Key Takeaways:

- AI's Role in Network Optimization & 6G Evolution: AI enhances network management, user experience, energy efficiency, load balancing, and mobility optimization. Its significance in non-terrestrial networks is gaining recognition across academia and industry.

- Challenges in AI Integration: Implementing AI across multi-vendor environments presents interoperability issues, requiring standardized integration for global scalability. Maintaining user privacy and data security is crucial, especially when transferring AI models between network entities.

- Need for AI Validation & Collaboration: Establishing a common AI framework for 6G requires industry collaboration, along with robust methodologies to assess AI performance across diverse network scenarios.

- Industry Solutions & Developments:

- NVIDIA-Driven AI Solutions:

- Software-Defined Base Plane in 5G: The transition to GPU-implemented, CUDA-powered base plane functionality reflects the shift toward software-driven network solutions.

- Hardware & Software Framework: Nvidia Converge Accelerator integrates GPU computational power, network acceleration, and CPU security in a single high-performance package, supporting various AI applications in communication networks.

- AI-Based Signal Processing: GPUs handle critical signal processing tasks for the Physical Downlink Shared Channel (PDSCH) and Physical Uplink Control Channel (PUCCH) using proprietary methods, enhancing AI-driven air interface signal processing.

NOTE : For downloading presentation material, check this

out in linkedIn

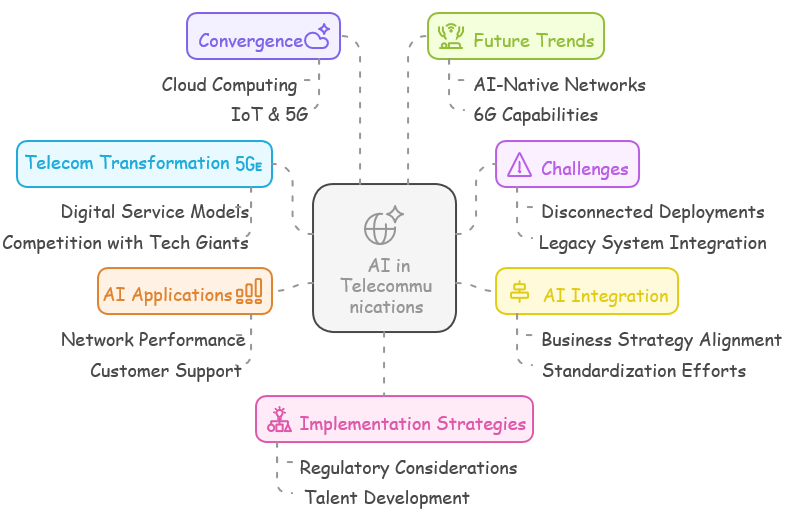

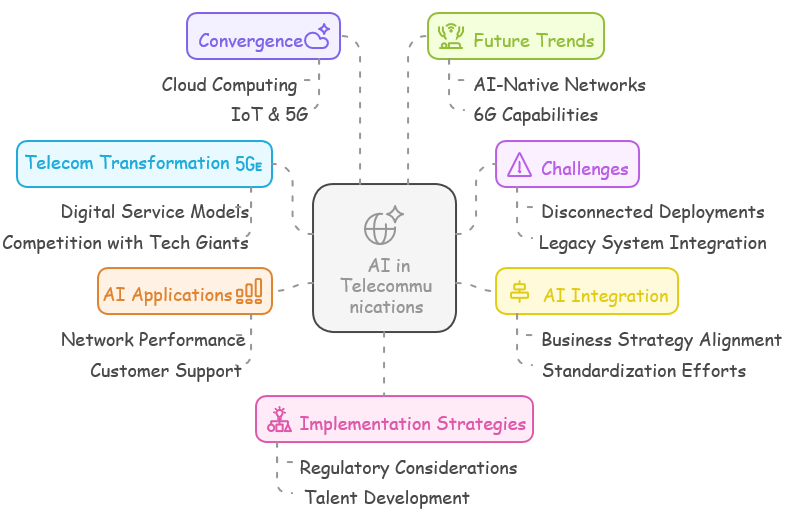

In the telecommunications sector, focusing on the transformation of service providers into digital entities and adopting AI as a core component of their services. The talk highlights how AI is dispersed across various domains like networking, IT, OSS, BSS, billing, sales, and marketing. It also addresses the challenge of scattered AI initiatives lacking a cohesive architecture. The speaker emphasizes the importance of AI in competing with digital service providers like Google and

Amazon, and discusses the need for a solid reference architecture for AI in telecommunications.

- Key Takeaways:

- AI's Role in Telecom Transformation: AI is driving telecom companies toward digital service provider models to compete with tech giants, enhancing network operations, customer service, and marketing strategies.

- Challenges in AI & ML Implementation:

- AI is often deployed in disconnected ways without a standardized framework, leading to inefficiencies.

- Integration with legacy systems and scalability pose significant challenges.

- Talent and skill gaps hinder AI expertise development in the telecom sector.

- AI Integration & Strategic Alignment:

- AI should align with business strategies for long-term success and enhanced telecom services.

- Standardization efforts are needed to unify AI implementation across different domains.

- Industry collaboration is critical to overcoming AI adoption hurdles.

- AI Applications in Telecom:

- AI Categories in Telecom:

- Narrow AI: Used for specific tasks like chatbots and network optimization.

- General AI & Super AI: Theoretical concepts with potential future applications.

- Adoption Across Telecom Domains:

- AI improves network performance, predictive maintenance, customer support, sales, billing accuracy, and IT infrastructure optimization.

- Convergence with Emerging Technologies:

- Cloud & Edge Computing: AI enhances scalability, flexibility, and real-time processing for telecom networks.

- IoT & 5G: AI-driven insights optimize data management, enabling smarter networks.

- Future Trends & AI Evolution in 6G:

- AI-Native Networks: Future networks will be inherently designed around AI capabilities, integrating sophisticated analytics and enhancing customer experience.

- 6G & AI: AI will play a key role in advancing telecom capabilities with improved automation and decision-making.

- MLOps & AI Implementation Strategies:

- Best Practices & Frameworks: AI models must be efficiently deployed, continuously adapted, and integrated seamlessly with telecom infrastructure.

- Regulatory & Ethical Considerations: Compliance with legal and ethical standards is crucial for sustainable AI adoption.

- Key Recommendations:

- Standardizing AI Architectures: The industry must establish common AI frameworks and methodologies.

- Pilot Programs for AI Adoption: Small-scale AI trials should pave the way for larger implementations.

- Collaboration & Talent Development: Strong industry partnerships and workforce training are needed to support AI-driven transformation.

|

|