|

6G Research on MAC

6G research on the Medium Access Control (MAC) layer is at the forefront of next-generation wireless communication advancements. As we transition from 5G to 6G, the MAC layer faces new challenges and demands, necessitating innovative solutions. The goal is to ensure efficient, reliable, and scalable communication in increasingly complex networks.

In this note, I would review on what kind of novel features would be introduced and why those new features/functions will be required in comparison with MAC in conventional system.

Traditional MAC protocols, such as those used in 5G, may not be directly applied to Terahertz communication due to the unique features of the Terahertz band. Here are the reasons highlighted:

-

Path and Molecular Loss: Terahertz communication experiences significant path and molecular absorption losses. These losses are unique to the Terahertz band and can significantly impact communication efficiency.

- Path Loss: High path loss in the Terahertz band means that the effective communication range can be limited. MAC protocols need to be designed to handle frequent link outages and ensure that devices within this limited range can efficiently access the medium. Due to the variability in path loss depending on distance and environment, MAC protocols might need to incorporate adaptive scheduling mechanisms to prioritize devices based on their link quality.

- Molecular Absorption: Molecular absorption leads to frequency-selective behavior, creating transmission windows. A MAC protocol needs to be aware of these windows to schedule transmissions effectively. Since molecular absorption defines several transmission windows depending on the distance, MAC protocols might need to incorporate mechanisms for dynamic frequency selection to optimize communication based on current conditions.

- Molecular Noise: The presence of molecular noise can affect the Signal-to-Noise Ratio (SNR) of a communication link. MAC protocols need to account for this when scheduling transmissions to ensure reliable communication. Given the potential for increased noise, MAC protocols might need enhanced error handling and retransmission mechanisms to maintain data integrity.

- Transmission Windows: The availability of specific transmission windows due to path losses means that not all frequency bands are equally suitable for communication at all times. MAC protocols need to be designed to select the most optimal frequency bands for transmission. MAC protocols might need to incorporate dynamic bandwidth allocation mechanisms to utilize the available transmission windows effectively. This could involve allocating more bandwidth to devices operating in optimal

windows and less to those in less favorable conditions.

-

Multipath, Reflection, and Scattering: The Terahertz band is susceptible to multipath effects, reflections, and scattering. These phenomena can cause interference and degrade the quality of communication.

- Wavelength and Diffraction: As frequencies increase into the Terahertz band, the wavelength becomes shorter, leading to less free space diffraction. This results in THz waves being more directional than millimeter waves used in 5G.

- Scattering & Reflection Effects: Both 5G and THz communications experience scattering and reflection effects, but their impact and behavior can differ significantly due to the distinct frequency bands. For THz, the indoor application differs from outdoor applications mainly because of these effects. Different channel models might be required for different environments when designing MAC protocols for THz.

- Multipath: The multipath effects in THz can be distinct from 5G due to its unique propagation characteristics. The shorter wavelength and higher directionality can lead to different multipath profiles compared to 5G.

-

Antenna Requirements: The Terahertz band requires specific antenna designs that can handle its unique properties. Traditional antennas used in lower frequency bands like 5G might not be suitable for Terahertz communication. The antenna requirements and their associated challenges are primarily related to the Physical Layer (PHY) rather than the Medium Access Control (MAC) layer. The PHY layer deals with the actual transmission and reception of signals, which includes factors

like

modulation, demodulation, and antenna design, whereas MAC layer is resposible for scheduling / planning the physical layer resources. So Antenna itself is more with physical layer, MAC need to be involved in the operation of the antenna.

- Antenna Directivity and Size: The Terahertz band allows for higher link directionality compared to mmWave for the same transmitter aperture. Additionally, the transmitted power and interference between antennas can be reduced by using smaller antennas with good directivity in Terahertz communications. This allows for more compact and efficient antenna designs. If the antennas are highly directional (as they tend to be in Terahertz communication), the MAC protocol needs to account for

this when scheduling transmissions. Highly directional antennas might require beamforming and beam alignment procedures, which the MAC layer must manage. Due to this much higher directionality of the beam (comparing to 5G), 6G MAC should come up with more efficient algorithm for beam management.

- Interference: The design and placement of antennas can influence interference levels. A MAC protocol designed for Terahertz communication might need to consider this interference when scheduling transmissions or when determining which nodes can transmit simultaneously.

- Transmission Range: The effective range of communication can be influenced by antenna design. The MAC layer needs to be aware of this range when determining which nodes can communicate with each other.

Using AI and ML in the MAC layer is very important. This is because we want our networks to work well with both digital and real-world experiences. With AI, we can solve various hard problems in the physical part of our network and make the MAC part work better. As more private networks come up, we need to make solutions that fit each one, and ML helps us do that. So, as we move to 6G, using AI and ML will help us make better and more specific communication tools

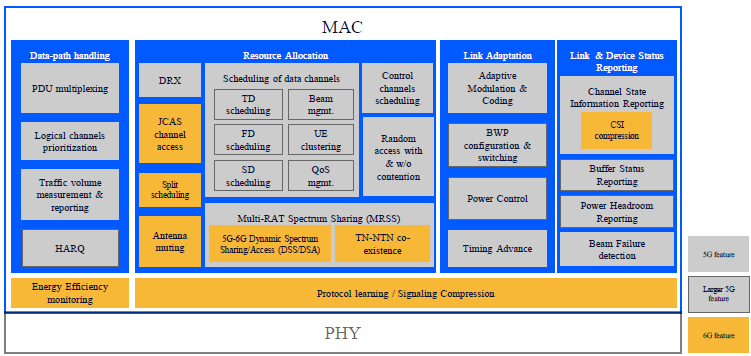

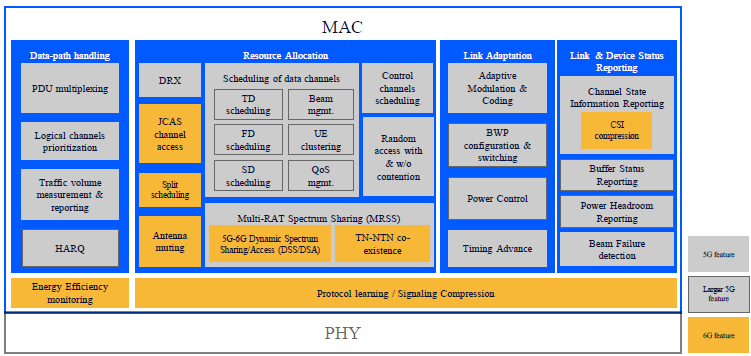

AI will be very important in the 6G MAC layer because 6G is more complicated and AI/ML would do better to handle such a complicated scenario. The MAC layer used to just help devices connect to wireless channels (wireless channel access control), but now it does more things like deciding which devices get to use the network (resource allocation), adjusting connections (link adaptation), and saving energy (energy efficiency). When 6G comes, the MAC layer will have even more new jobs,

like checking energy use (energy monitoring) and making signals more compact (signaling compression). More people will use their own networks (private wireless networks), so we need solutions that fit each person's needs. AI/ML is great for making these special solutions (tailored models) because it can learn and make things better as it goes.

Source : The Role of AI on 6G MAC

Let's look into various parts of MAC layer and see how to apply AI/ML and what are the challenges. The initial summary is mostly based on The Role of AI on 6G MAC, but I will keep adding other aspects as I investigate further.

Deep scheduling of data channels leverages AI to optimize how data is transmitted in networks. As networks evolve, especially with the onset of 6G, the complexity of managing data flow increases. Deep scheduling offers a solution, promising enhanced performance and adaptability compared to traditional methods

Motivations :

-

Traditional Packet Schedulers: These are typically divided into sub-modules for time, frequency, and spatial domains. They handle tasks like Time Domain scheduling (identifying high-priority UEs), Frequency Domain scheduling (mapping frequency resources), beam selection, and MU-MIMO pairing. These tasks are complex and lack optimal solutions, with most current solutions relying on shortcuts or heuristics.

- Improved Performance with AI: Deep scheduling, using AI, can enhance data transmission by understanding the nuances of different frequencies and adapting accordingly. This can lead to better user experiences.

- Complexity of 6G: The 6G era will introduce additional challenges for schedulers, such as managing network slicing, accommodating diverse QoS requirements, and co-scheduling 5G and 6G devices on shared carriers. AI techniques can help address these challenges.

- Intricate Dependencies: The interdependencies between various responsibilities in scheduling will challenge AI techniques to develop innovative packet scheduling methods.

Challenges :

- Computational Complexity: Deep scheduling requires significant computational resources. Reducing this complexity is a challenge, especially when real-time decisions are needed.

- Handling Dynamic UE Numbers: The number of User Equipments (UEs) or devices in a network can change frequently. Deep scheduling needs to adapt to these dynamic changes efficiently.

- Ensuring Generalization: The deep scheduling models should be able to generalize well across different scenarios and not just the ones they were trained on.

- Incorporating Multimodal Decisions: Decisions in scheduling can span multiple modes like time (TD), frequency (FD), and space (spatial). Integrating these different modes into the deep scheduling process can be challenging.

- Convergence Issues: While some deep scheduling methods, like value-based ones, converge reasonably fast, others, especially policy-based schedulers, might converge slower or get stuck in local optima.

Candidate AI/ML Models :

- DDQN Scheduler: DDQN (Double Deep Q-Network) scheduler is a type of reinforcement learning model. The learning curve of this scheduler was compared with various baselines, and it showed potential improvements in user throughput.

- Neural Scheduler: a neural scheduler design has reduced CPU execution time per TTI compared to baselines. This suggests the use of neural networks for scheduling tasks.

- Value-Based Deep Schedulers: These are prevalent and converge reasonably fast. They are based on estimating the value of different actions in a given state.

- Policy-Based Schedulers: These models learn a policy that directly maps states to actions, optimizing the scheduling decisions.

Motivations :

- Limited RACH Resources: The number of Random Access Channel (RACH) resources is restricted, making random access a contention-based process. ML can optimize the allocation of these limited resources by predicting the best times and conditions for UEs to access the RACH, thus reducing contention and improving efficiency.

- Preamble Collisions: In large massive Machine Type Communications (mMTC) networks, the chances of several UEs choosing the same preamble are high, leading to collisions. ML algorithms can be trained to detect and predict preamble collisions, allowing for dynamic adjustment and reallocation of preambles to reduce such collisions.

- Low-Latency Connectivity: With the rise of low-latency services like Extended Reality (XR) and volumetric video in 5G and 6G, resolving frequent contentions rapidly is crucial for maintaining expected low latencies. ML models can be trained to quickly resolve contentions, ensuring that devices get connected with minimal delay, essential for low-latency services.

- Classification Tasks: ML is well-suited for classification tasks like preamble detection in RA, making it a promising tool for this purpose. ML is inherently suited for classification. In the context of RACH, ML can classify and detect preambles, ensuring accurate and efficient random access procedures.

Challenges :

- False Peaks Detection: The traditional approach to detecting Random Access (RA) preambles can lead to false peaks, especially under interference-limited conditions. While ML can enhance detection accuracy, it might also introduce false positives (false peaks) if not trained adequately, especially in noisy environments.

- Meeting URLLC Requirements: While some ML methods achieve high accuracy, they might not meet the Ultra-Reliable Low-Latency Communications (URLLC) requirements. Achieving the high accuracy and reliability standards set by URLLC using ML models can be challenging, especially in dynamic and unpredictable wireless environments.

- Computational Load: Some ML methods, while promising, come with increased computational loads, especially during training. Training and deploying ML models, especially deep learning models, can be computationally intensive. This can be a concern in real-time systems where quick decisions are paramount.

- Scalability: Ensuring that ML models scale effectively across a wide range of conditions, including varying Signal-to-Noise Ratio (SNR) levels.Ensuring that ML models generalize well across various conditions and scales is a challenge. A model trained for one set of conditions might not perform well under different conditions, necessitating continuous retraining and adaptation.

Candidate AI/ML Models :

- Convolutional Networks: These deep learning models, typically used for analyzing visual imagery, have shown promising results in preamble and Timing Advance (TA) detection. They demonstrate good performance and scalability across a wide Signal-to-Noise Ratio (SNR) range.

- Fully Connected Neural Networks (FCNNs): Networks where each neuron in one layer is connected to all neurons in the next layer. FCNNs with two hidden layers have achieved high accuracy in blind preamble detection and TA value detection.

- Decision Tree Classification (DTC): This is a supervised learning method used for classification. It's mentioned as a technique explored for RA preamble detection.

- Nave Bayes: A probabilistic classifier based on applying Bayes' theorem. It's been considered for RA preamble detection.

- K-nearest neighbor: A type of instance-based learning used for classification and regression. It's been explored for RA preamble detection.

- Bagged Decision Tree Ensembles: Ensemble methods, which combine multiple models to produce better predictive performance than could be obtained from any of the constituent models alone, have been explored for RA preamble detection.

Motivations :

- Complexity of AMC: AMC is a vital MAC function that selects the best Modulation and Coding Scheme (MCS) by considering channel estimates, data size, and system constraints. Traditional methods like Inner Loop Link Adaptation (ILLA) use lookup tables for MCS selection, and Outer Loop Link Adaptation (OLLA) corrects it by offsetting SINR predictions to minimize Block Error Rate (BLER). Machine Learning can simplify the complexity of AMC by dynamically predicting the optimal

Modulation

and Coding Scheme (MCS) based on real-time channel conditions, user requirements, and system constraints. This dynamic prediction can lead to more efficient and accurate MCS selection compared to traditional methods.

- Enhancing Existing AMC Algorithms: There's a need to improve existing AMC algorithms, especially when the BLER target is below 10%, as in Ultra-Reliable Low-Latency Communications (URLLC) scenarios. ML can offer dynamic training and optimization for these algorithms. Traditional AMC algorithms might not be adaptive enough to cater to diverse and dynamic 6G scenarios. ML models can be trained on vast datasets, capturing various scenarios and conditions, allowing them to adapt

and

optimize AMC algorithms in real-time. For instance, ML can dynamically adjust hyperparameters in algorithms like OLLA to enhance performance.

Challenges :

- Balancing Transmissions: The challenge lies in balancing aggressive transmissions for spectral efficiency against conservative transmissions for latency and BLER. While ML can predict the best transmission strategy, it might struggle with sudden and unpredictable changes in the network environment. Training ML models to balance transmissions requires vast and diverse datasets, and even then, real-world scenarios can introduce unforeseen challenges that the model hasn't been

trained

on.

- Meeting Specific BLER Targets: While some AMC algorithms can serve eMBB traffic with a target BLER of 10%, challenges arise when the BLER target is below 10%. Achieving very low BLER targets using ML models can be challenging due to the non-linear and dynamic nature of wireless channels. The model might overfit to specific scenarios during training and fail to generalize well in diverse real-world conditions. Additionally, real-time adjustments to meet stringent BLER targets

can

introduce latency, especially if the ML model is computationally intensive.

- Preserving Traditional Structures: While introducing ML-based enhancements, there's a need to preserve the traditional ILLA/OLLA structure for AMC. Integrating ML enhancements while preserving traditional structures like ILLA/OLLA can be complex. There's a risk of compatibility issues, where the ML model might conflict with or override the traditional mechanisms. Ensuring seamless integration without causing disruptions or inefficiencies is a significant challenge.

Candidate AI/ML Models :

- Differentiable Computation Graph: Introduced to train OLLA(Outer Loop Link Adaptation) hyper-parameters dynamically while preserving the traditional ILLA(Inner loop Link Adaptation)/OLLA(Outer Loop Link Adaptation) structure. This approach allows for the optimization of parameters in a way that's differentiable, making it suitable for gradient-based optimization techniques.

- Deep Reinforcement Learning (RL): Explored for MCS selection policies in real-time scenarios. This approach allows the model to learn optimal strategies through interaction with the environment. Specific models like lightweight actor-critic and Proximal Policy Optimization (PPO) have been trained for this purpose.

Motivations :

- Optimization of Open Loop Power Control (OLPC): Traditional methods like trial-and-error are often suboptimal. ML can fine-tune cell-specific parameters like P0 (expected value) and α(Path loss) compensation factor for better performance. Some AI/ML algorithm(e.g, Bayesian Optimization with Gaussian Processes (BOGP)) can approximate Key Performance Indicators (KPIs) in the P0,α space, converging in fewer samples and reducing outage risks.

- Adaptation to Different Scenarios: In 6G verticals like mmWave deployments, frequent adaptation of power control settings may be required. Some AI/ML algorithm(e.g, Multi-Agent Reinforcement Learning (MARL)) can adapt power control settings in real-time, especially in dynamic environments.

Challenges :

- Connectivity Risks: Exploring new P0,α values can risk network connectivity. While BOGP converges quickly, it still carries the risk of making suboptimal choices that could affect connectivity.

- Interference from Neighboring Base Stations: Independent ML solutions can face convergence issues due to non-stationarity. ML agents might compete against each other, leading to unstable learning. Centralized or cooperative learning is required to overcome this.

- Complexity of Multi-Agent Systems: Using MARL introduces the complexity of multi-agent optimization. Ensuring that all agents in a MARL system converge to a stable solution is challenging, requiring sophisticated coordination mechanisms.

Candidate AI/ML Models :

- Bayesian Optimization with Gaussian Processes (BOGP): Ideal for optimizing Open Loop Power Control (OLPC) parameters like P0 and α. It converges quickly and reduces the risk of network outages.

- Multi-Agent Reinforcement Learning (MARL): Suitable for dynamic environments where frequent adaptation of power control settings is required. Algorithms like Q-Learning and Deep Q-Networks (DQN) could be adapted for MARL.

Motivations :

- Adaptability to Network Types: Different deployments like sensor networks or high-capacity networks have unique requirements, like reduced-size MAC uplink PDU headers in low-traffic networks, conserving energy. Machine Learning (ML) techniques offer the flexibility to tailor Layer 2 (L2) signaling to specific network deployments.

- Customization for Traffic Types: Different types of data traffic, such as those generated by Indoor Factory machines or Small Office Home Office setups, have different requirements. ML can help in crafting protocols that are more adaptive to the type of traffic in the network.

Challenges :

- Large Signaling Space: The large signaling space makes most Multi-Agent Reinforcement Learning (MARL) approaches impractical. The challenge lies in efficiently navigating this large space, which ML techniques like state abstraction and Multi-Agent Proximal Policy Optimization (MAPPO) aim to solve.

- Complexity of Scenarios: Different scenarios like urban, rural, indoors/outdoors have their own unique requirements. The challenge is to develop ML models that can adapt to these diverse conditions.

- Signaling Overhead: The control plane often exchanges large amounts of signaling, such as channel matrices. Reducing this overhead while maintaining performance is a challenge that ML aims to address.

Candidate AI/ML Models :

- Multi-Agent Reinforcement Learning (MARL): Useful for joint learning between base stations and user equipment for new Layer 2 (L2) signaling and access policies.

- Multi-Agent Proximal Policy Optimization (MAPPO): This is an extension of Proximal Policy Optimization and is particularly useful for navigating the large signaling space in a more efficient manner.

Motivations :

- Efficiency in Compression: Traditional methods like 5G NR Type I and II codebooks are outperformed by ML-based techniques like bi-LSTM networks in terms of channel eigenvector compressibility.Machine Learning techniques like bi-LSTM networks can offer more efficient CSI compression, reducing overhead.

- Model Size and Real-time Deployment: The need for smaller model sizes for real-time hardware deployment.Transformer-based autoencoders are more efficient in terms of parameters, making them ideal for real-time hardware deployment.

- Channel Matrix Reconstruction and Bitrate Performance: Any CSI encoding mechanism must outperform current methods in these metrics to be standardized. ML models like Transformers have shown gains in channel matrix reconstruction and bitrate performance, making them candidates for standardization.

Challenges :

- Standardization: The industry has to reach a consensus on the advantages of ML-based methods for them to be standardized. Achieving industry-wide acceptance for ML methods is a challenge, given the variety of stakeholders.

- Model Management and Data Collection: Rules for model sizes, training methods, and data collection techniques need to be standardized. Managing and maintaining ML models in a standardized way across different vendors and technologies is complex.

- Performance Metrics: Clear gains in terms of channel matrix reconstruction error and bitrate performance must be demonstrated. Quantifying and proving the advantages of ML over traditional methods in these specific metrics is challenging.

Candidate AI/ML Models :

- Bi-Directional Long Short-Term Memory (Bi-LSTM) Networks: Effective for capturing the temporal dependencies in the channel state information, offering better compression.

- Transformer-based Autoencoders: Known for their efficiency in parameter usage, these models can offer better channel matrix reconstruction and are suitable for real-time hardware deployment.

- Convolutional Neural Networks (CNNs): Useful for capturing spatial correlations in MIMO systems, potentially reducing the complexity of CSI feedback.

- Principal Component Analysis (PCA): A statistical method that can be used for dimensionality reduction, making it easier to compress CSI.

Motivations :

- Latency Minimization: HARQ transmitters wait for Acknowledgements (ACKs) with parallel Stop-And-Wait (SAW) processes to minimize latency. ML can help in ACK prediction, reducing the time spent waiting for acknowledgments.

- Transport Block Sizes (TBSs) and Code Block Groups (CBGs): 6G will have increased TBSs and more CBGs will need to be accommodated. ML can quickly converge to the target Block Error Rate (BLER), aiding in efficient HARQ signaling.

- Decoding Result Prediction: Predicting the decoding result before the transmission ends allows for faster retransmissions. ML models can predict decoding outcomes, reducing HARQ feedback latency.

- Device Diversity: 6G will service a diverse range of devices, introducing MAC asymmetries in the Tx-Rx capacities. Techniques like Compressed Error HARQ can be enhanced by ML to adapt to different device types.

Challenges :

- Channel Uncertainty: ML cannot fully compensate for channel uncertainty, affecting the reliability of HARQ. Creating models that can adapt to channel variations is challenging.

- Feedback Codes for Specific Scenarios: The need for feedback codes tailor-made for specific deployment scenarios. Customizing ML models for diverse and specific network conditions can be complex.

- Time Correlations in Feedback Signals: Discovering hidden time correlations in the feedback signals to reduce Layer 2 (L2) overhead. Implementing deep attention mechanisms to understand time correlations is computationally intensive.

Candidate AI/ML Models :

- Deep Attention Mechanisms: These could be employed to focus on the most relevant parts of the signal or feedback for HARQ processes, reducing computational overhead.

- Deep Autoregressive Attention-Based Models: These models are particularly useful for capturing complex dependencies in the data. They can be employed to discover hidden time correlations in the HARQ feedback signals, potentially reducing Layer 2 (L2) overhead.

Reference

|

|