|

Engineering Math - Matrix |

|||||||||||||||

|

LU Decomposition

LU decomposition is a method to split (decompose) a matrix into two matrix product. One of these two matrix(the first part) is called 'L' matrix meaning 'Lower diagonal matrix' and the other matrix(the second part) is called 'U' matrix meaning 'Upper diagonal matrix'. Simply put, LU decomposition is a process to convert a Matrix A into the product of L and U as shown below.

A = LU

A lower triangular matrix is a matrix where all the elements above the main diagonal (top left to bottom right) are zero. An upper triangular matrix is a matrix where all the elements below the main diagonal are zero.

I would not explain about how you can decompose a matrix into LU form. The answer is "Use software" -:). I would talk about WHY.

In this section I want to show you an example of how the LU decomposed matrix is applied for a case of solving a linear equation (a linear simultaneous equation)

LU decomposition is used to solve a system of linear equations by factoring a square matrix A into a lower triangular matrix L and an upper triangular matrix U. Given a system of linear equations in the form Ax = b, where A is a square matrix, x is the column vector of unknowns, and b is the column vector of constants, the LU decomposition allows us to solve this system more efficiently.

By breaking down the original system of linear equations into two triangular systems and solving them with forward and back substitution, the LU decomposition allows us to solve the system of simultaneous linear equations efficiently. This method is particularly useful when dealing with large systems of linear equations or when solving multiple systems with the same coefficient matrix A but different constant vectors b.

Here's how the LU decomposition can be applied for solving a system of simultaneous linear equations:

Step 1: Decompose the matrix A into L and U

Perform the LU decomposition to factor the given square matrix A into a lower triangular matrix L and an upper triangular matrix U, such that A = LU.

Step 2: Solve for an intermediate vector y using forward substitution:

Since A = LU, we can rewrite the system of equations as LUx = b. Now, let Ux = y. This gives us the new system of equations Ly = b.

Because L is a lower triangular matrix, we can use forward substitution to solve for y. Start with the first row of L and solve for y1, then move to the second row and solve for y2, and so on until you reach the last row and solve for yn. This process is computationally efficient, as it requires only one division and one multiplication for each row.

Step 3: Solve for the unknown vector x using back substitution:

Now that we have the intermediate vector y, we can solve the system Ux = y for the unknown vector x. Since U is an upper triangular matrix, we can use back substitution to solve for x. Start with the last row of U and solve for xn, then move to the second-to-last row and solve for xn-1, and so on until you reach the first row and solve for x1. This process is also computationally efficient, as it requires only one division and one multiplication for each row.

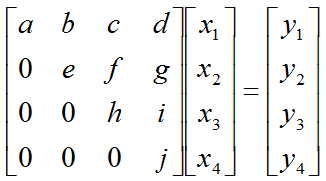

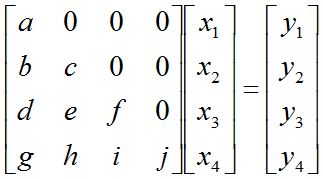

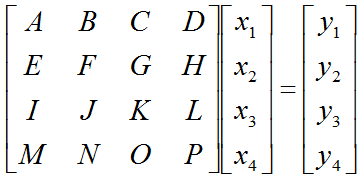

Let's assume that we have a matrix equation (Linear Equation) as shown below.

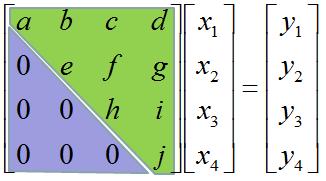

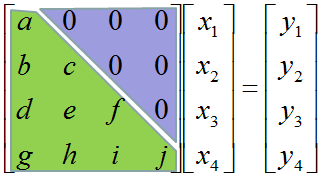

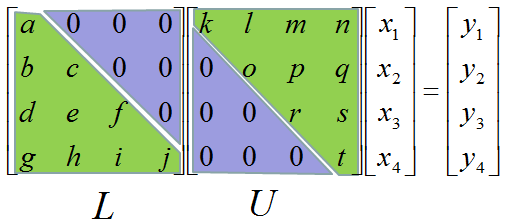

Don't look into each elements yet. Just get around 100 steps back away from this and look at the overall pattern of the matrix. Can you see a pattern as shown below ? You see two area marked as triangles. Green triangle shows all the elements from diagonal line and upper diagonal part. The elements in this triangle is non-zero values. Violet triangle shows the elements of lower diagonal part which is all zero.

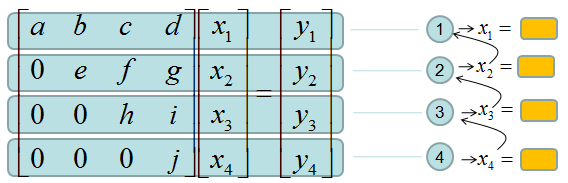

This form of matrix is called 'U' form matrix, meaning 'Upper diagonal matrix'. Why this is so special ? It is special because it is so easy to solve the equation. Now let's think about how we can solve this equation. Let's look the last row (4th row in this case). How can I figure out the value for x4 ? You would get it right away because there is only x4 term and j and y4 are known values. Once you get x4 and plug in the x4 value into row 3, then you would get x3 value. Once you get x3 and plug in the x3 value into row 2, then you would get x2 value. Once you get x2 and plug in the x2 value into row 1, then you would get x1 value.

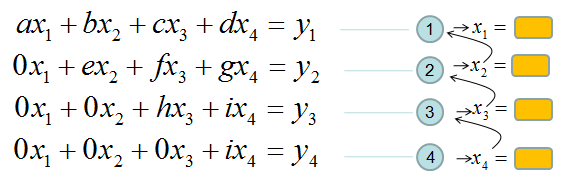

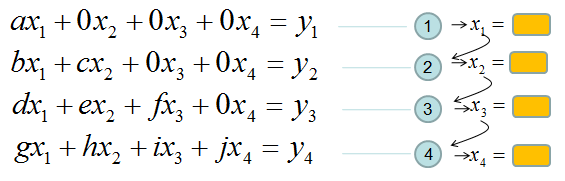

If it is not clear with you, it would be a little clearer if you convert the matrix equation into simultaneous equation as shown below. Start from the last equation and get x4 first and repeat the process described above.

If this process is still unclear,just play with real numbers. Put any numbers for a,b,c,d,e,f,g,h,i,y1,y2,y3,y4 and try to get x1,x2,x3,x4 as explained above.

If you tried this as explained, one thing you would notice would be "It is so simple to find the solution x1,x2,x3,x4". Just compare this process with what you experienced with general matrix equation solving process you did in your high school math or linear algebra class.

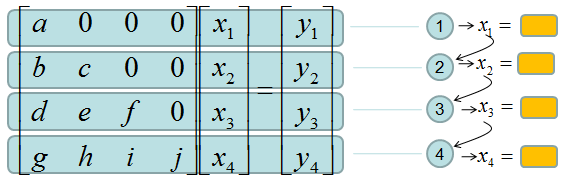

Now let's look into another example of matrix equation as shown below.

Now you would know what I will say. In this case, all the elements along the diagonal line and Lower part of diagonal line is non-zero values. All the values above the diagonal line is all zero. This form is called 'L' form meaning 'Lower diagonal matrix'.

Why this is so special ?

It is special because it is so easy to solve the equation. Now let's think about how we can solve this equation. Let's look the first row (the first row in this case). How can I figure out the value for x1 ? You would get it right away because there is only x1 term and a and y1 are known values. Once you get x1 and plug in the x1 value into row 2, then you would get x2 value. Once you get x2 and plug in the x3 value into row 3, then you would get x3 value. Once you get x3 and plug in the x2 value into row 4, then you would get x4 value.

If it is not clear with you, it would be a little clearer if you convert the matrix equation into simultaneous equation as shown below. Start from the first equation and get x1 first and repeat the process described above.

If this process is still unclear,just play with real numbers. Put any numbers for a,b,c,d,e,f,g,h,i,y1,y2,y3,y4 and try to get x1,x2,x3,x4 as explained above.

If you tried this as explained, one thing you would notice would be "It is so simple to find the solution x1,x2,x3,x4". Just compare this process with what you experienced with general matrix equation solving process you did in your high school math or linear algebra class.

Now let's suppose we have a matrix as shown below. Here all the elements in the matrix is non-zero values.

Now let's assume we can convert this matrix equation into following form. (Don't worry about HOW, just assume you can do this somehow). You already know that this form would make it easier to solve the equation.

Try this example that I found from web. (If the link is missing, try here).

Now you may say.. "You always say 'don't worry about how to solve. just use computer software'". If I use the computer software, why do I have to worry about this kind of conversion. Computer software would not have any problem to solve the equation even with the original form without LU decomposition. You are right, the computer program would not have any problem with original form. But the amount of time for the calculation is much shorter with LU decomposed form than doing the same thing in the original form. When the size of matrix is small, this time difference would be negligiable, but if the size is very huge (e.g, 10000 x 10000) the time difference would be very huge. If you've done a computer science, you would be familiar with Big O notation to evaluate the computation time. Try compare the computation time for the original matrix form and LU decomposed form. If you are not familiar with this notation, just trust me -:). Following two YouTube tutorial would give you some insight of LU decomposition including computation time.

Application of LU Decomposition

LU decomposition has several applications in various fields, such as engineering, computer science, physics, and economics. Some common applications include:

Solving systems of linear equations:

A system of linear equations can be represented as Ax = b, where A is a matrix, x is a column vector of unknowns, and b is a column vector of constants. LU decomposition can simplify solving such systems by breaking down matrix A into L and U. Once we have the LU decomposition, we can solve the system in two steps: a) Forward substitution: Solve Ly = b for y. b) Back substitution: Solve Ux = y for x.

The advantage of this approach is that triangular matrices are much easier to work with when solving linear systems compared to the original matrix A.

Computing determinants:

Finding the determinant of a matrix is an important operation in linear algebra. Once a matrix A is decomposed into L and U, the determinant of A can be found as the product of the diagonal elements of L and U. This is because the determinant of a triangular matrix is equal to the product of its diagonal elements, and det(A) = det(L) * det(U).

Finding matrix inverses:

The inverse of a matrix A, denoted A^(-1), is used to solve systems of linear equations and perform various calculations. Using LU decomposition, we can efficiently compute the inverse of A. If A = LU, then A^(-1) = U^(-1) * L^(-1). We can find the inverse of L and U separately using forward and back substitution and then multiply them to obtain A^(-1).

Numerical stability and efficiency:

In certain cases, the LU decomposition with partial pivoting can help improve the numerical stability of calculations. Partial pivoting involves reordering the rows of the matrix A to ensure that the largest element (in absolute value) in each column is on the diagonal. This can help reduce the effects of round-off errors and improve the accuracy of calculations.

Eigenvalue and eigenvector computations:

In some algorithms for finding eigenvalues and eigenvectors of a matrix, LU decomposition is used as an intermediate step. These algorithms, such as the QR algorithm, can benefit from the efficiency and numerical stability provided by LU decomposition.

I understand that it would be beneficial to use in various way once we have L and U components of a matrix. But doesn't it take time and effort to decompose a matrix into L and U components ? How would you compare the benefit with overhead ?

Good point. There is a computational cost associated with decomposing a matrix into its L and U components. The process of LU decomposition generally involves Gaussian elimination or similar algorithms, which have a time complexity of O(n^3) for an n x n matrix. This means that as the size of the matrix grows, the time required to perform the decomposition increases significantly.

The benefits of using LU decomposition depend on the specific problem and the size of the matrices involved. For small matrices or one-time calculations, the overhead of LU decomposition might not be justifiable. However, for larger matrices or cases where the decomposition can be reused for multiple calculations, the benefits can outweigh the overhead, making LU decomposition a valuable tool in linear algebra.

However, the benefits of LU decomposition often outweigh the overhead, especially when solving large systems of linear equations, calculating determinants, or finding matrix inverses. Here's why:

Reusability: Once a matrix A is decomposed into L and U, the decomposition can be reused for solving multiple linear systems with the same matrix A but different right-hand side vectors (b). In such cases, the time spent on LU decomposition is a one-time cost, and subsequent linear system solutions become much faster using forward and back substitution.

Efficiency: When solving a linear system with LU decomposition, triangular matrices (L and U) are significantly easier to work with than the original matrix A. Forward and back substitution methods used for solving triangular systems have a time complexity of O(n^2), which is more efficient than solving the original system directly using methods like Gaussian elimination.

Numerical stability: In certain cases, LU decomposition with partial pivoting can help improve the numerical stability of calculations, reducing the effects of round-off errors and improving the accuracy of results.

Is there any rule of thumb in terms of a threshold of a matrix size with the cost outweighing the benefit ?

Good Question. There isn't a universal rule of thumb for a threshold matrix size where the benefits of LU decomposition consistently outweigh the costs, as the trade-offs depend on the specific problem and computational resources available. However, some general guidelines can be helpful when deciding whether to use LU decomposition:

Small matrices: For very small matrices (e.g., 2x2 or 3x3), the overhead of LU decomposition might not provide significant benefits, as direct methods like Gaussian elimination or Cramer's rule can efficiently solve linear systems or calculate determinants and inverses.

Reusability: If you need to solve multiple linear systems with the same matrix A but different right-hand side vectors (b), LU decomposition becomes more advantageous. The decomposition is a one-time cost, and solving subsequent linear systems using forward and back substitution is faster. In such cases, even for moderately sized matrices (e.g., 10x10), LU decomposition can be beneficial.

Large matrices: As the size of the matrix grows, the computational cost of LU decomposition and direct methods like Gaussian elimination both increase. However, the benefits of using LU decomposition may become more pronounced, particularly for solving linear systems, due to the efficiency of forward and back substitution with triangular matrices. For matrices larger than 100x100, iterative methods like the Conjugate Gradient method or GMRES may be more suitable, depending on the properties of the matrix.

Numerical stability: If the problem you're working on requires high numerical stability or involves ill-conditioned matrices, LU decomposition with partial pivoting can be a better option than direct methods, even for moderate-sized matrices.

Computational resources: The available computational resources (e.g., memory, processing power) can also influence the decision to use LU decomposition. For limited-resource environments, the benefits of LU decomposition might be more pronounced due to its efficient handling of large matrices.

There are some limitations and restrictions associated with LU decomposition:

Square matrices: LU decomposition is applicable only to square matrices (i.e., matrices with an equal number of rows and columns).

Existence of LU decomposition: Not all square matrices have an LU decomposition. A matrix must be invertible and not have any zero elements along its main diagonal for LU decomposition to exist without pivoting. When a matrix has zero elements along its main diagonal or is singular (non-invertible), partial or complete pivoting is required to make LU decomposition possible.

Partial and complete pivoting: To handle situations where a matrix has zero elements along its main diagonal or is singular, partial or complete pivoting is used. In partial pivoting, rows are interchanged to ensure that the largest element in the current column is on the main diagonal. In complete pivoting, both rows and columns are interchanged to achieve the same goal. Pivoting can introduce additional computational complexity and can sometimes lead to less accurate results due to rounding errors in numerical calculations.

Numerical stability: LU decomposition can be numerically unstable for ill-conditioned matrices (matrices with a large condition number). In such cases, small errors in the input data can lead to large errors in the output. Alternative methods like QR decomposition or using iterative techniques may be more suitable for solving linear systems with ill-conditioned matrices.

Sparse matrices: LU decomposition may not be the most efficient method for sparse matrices (matrices with a majority of zero elements) since it can lead to fill-in, where many of the zero elements become non-zero during the decomposition process. This can result in increased memory requirements and computation time. For sparse matrices, specialized decomposition techniques like Cholesky decomposition (for positive definite matrices) or iterative methods may be more efficient.

Parallelization and scalability: LU decomposition can be computationally expensive for large matrices. While there are parallel algorithms for LU decomposition, achieving high performance and good scalability on parallel architectures can be challenging due to the dependencies between various steps in the algorithm.

Alternative methods and advantage over LU decomposition

There are alternative decomposition methods that can help overcome some of the limitations associated with LU decomposition. The choice of a suitable decomposition method depends on the specific problem and the properties of the matrix involved and not easy to have single and clear cut criteria. But we may try to come up with rule of thumb based comparitive table as below.

|

|||||||||||||||