|

Infra Structure for AI/ML ?

Today's world is rapidly changing because of Artificial Intelligence (AI) and Machine Learning (ML). But what makes these technologies work so well? It's not just about smart computer programs or huge amounts of data. It's also about the powerful system set up to run these technologies. This system includes special computers, storage for data, and networks that help everything connect and work together. In this blog, we'll explore the essential parts that make AI and ML possible. We'll look

at how these parts work together to turn ideas into real tools that can do amazing things. Whether you're new to AI and ML or just curious about how they work, this note will help you understand the important setup that makes it all happen.

NOTE : What I will be looking at in this note is about large scale AI/ML system like AI/ML data center, not much about small or personal scale system running on a few PC and GPU cards.

Components of the infra Structure

Let's look at the important parts of a big AI data center, like the ones a Big Tech uses for its AI work. These data centers need many different pieces to work well and keep everything running without problems. In this part, we will talk about the main pieces that make up these big data centers. We'll explain things like the computers that do the work, how the data is stored, how everything is kept cool, and how it all stays connected. We want to make it easy to understand how these parts

work together to help AI and ML technologies do amazing things.

Following is a short list of components that most of these data center would have

- Server Racks and Enclosures - Houses and organizes servers efficiently.

- Security Systems - Surveillance cameras, biometric access, and secured entry points.

- Fire Suppression Systems - Detects and extinguishes fires without damaging equipment.

- Cabling Infrastructure - Organized cabling for power and network connectivity.

- Servers - Core processing units for AI and computing tasks.

- CPU-Intensive Servers - Handles general computing and processing workloads.

- GPU Servers - Optimized for AI/ML computations and deep learning.

- Storage Servers - Dedicated for managing and storing data.

- Storage Systems - Manages vast amounts of data.

- SSDs & HDDs - Solid-state and hard disk drives for storage needs.

- Data Storage Solutions - Includes SAN (Storage Area Network) and NAS (Network Attached Storage).

- Networking Equipment - Manages data flow and connectivity.

- Routers - Directs data packets between networks.

- Switches - Connects devices within the data center.

- Firewalls - Protects the network from unauthorized access.

- Load Balancers - Distributes traffic to optimize performance.

- Cooling Systems - Regulates temperature and prevents overheating.

- In-Row Cooling - Cools server racks efficiently.

- Liquid Cooling - Uses liquid-based solutions for heat dissipation.

- Traditional HVAC - Air conditioning for temperature regulation.

- Power Supply Systems - Ensures continuous power availability.

- Uninterruptible Power Supplies (UPS) - Provides short-term backup power during outages.

- Backup Generators - Long-term power support in case of extended outages.

- Management Software - Monitors and controls data center operations.

- Performance Monitoring - Tracks server and network performance.

- Power & Environmental Tracking - Monitors energy usage and climate conditions.

- Data Center Infrastructure Management (DCIM) - Optimizes IT and facility operations.

- Cloud Integration - Connects with cloud services for scalable computing.

- Environmental Controls - Monitors data center conditions.

- Temperature - Ensures servers remain within optimal operating range.

- Humidity - Prevents excess moisture or dryness.

- Airflow - Maintains adequate ventilation.

- Energy Efficiency Solutions - Reduces power consumption.

- Energy-Efficient Lighting - Uses LED and automated lighting systems.

- Power Usage Effectiveness (PUE) Optimization - Improves efficiency ratios.

- Renewable Energy - Integrates solar or wind power sources.

- Disaster Recovery Systems - Ensures business continuity during failures.

- Data Backup - Regular backups to prevent data loss.

- Recovery Strategies - Plans for restoring operations in case of a disaster.

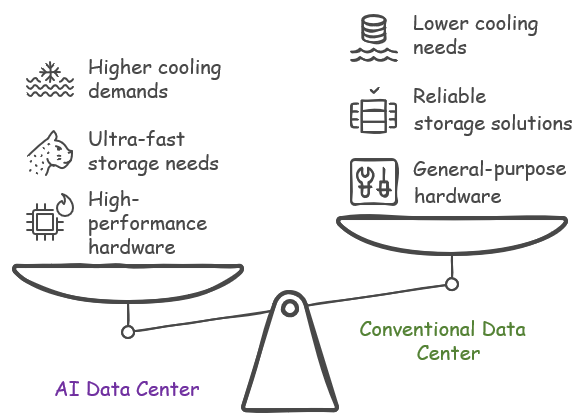

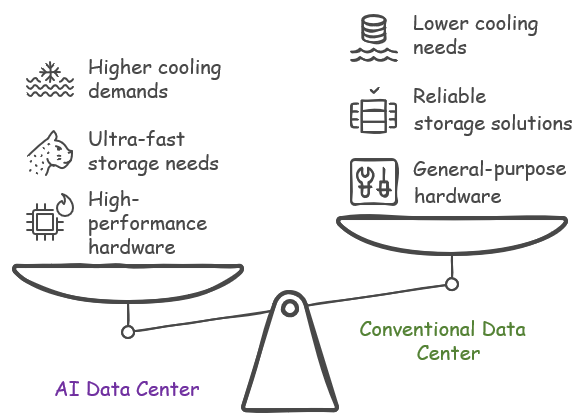

Main differences between AI Data Center and Conventional Data Center

The main differences between an AI data center and a conventional data center lie in their design, hardware specifications, power and cooling requirements, and overall purpose. These differences stem from the unique demands of AI and ML workloads compared to traditional data processing tasks.

In short, AI data centers are specialized facilities designed to meet the high-performance computing demands of AI and ML workloads, featuring advanced hardware, high power and cooling requirements, and specialized software ecosystems. In contrast, conventional data centers cater to a broader range of IT needs, with a focus on reliability, capacity, and supporting general-purpose computing tasks.

Here’s a closer look:

- Hardware Specifications:

- AI Data Center: Equipped with high-performance GPUs and CPUs to handle complex computations and parallel processing required for machine learning and deep learning tasks. These centers also have specialized hardware accelerators for AI, such as TPUs (Tensor Processing Units).

- Conventional Data Center: Primarily relies on CPUs for computing needs, focusing on handling a broad range of IT workloads such as database management, application hosting, and web services without the need for specialized AI processors.

- Storage and Networking:

- AI Data Center: Requires ultra-fast storage solutions (like NVMe SSDs) and high-bandwidth networking to manage the vast data flows involved in training AI models. Data throughput and low latency are critical for performance.

- Conventional Data Center: Utilizes a variety of storage solutions, including HDDs and SSDs, with networking tailored to support the expected traffic and data management needs, focusing more on capacity and reliability rather than extreme speed.

- Power and Cooling Requirements:

- AI Data Center: Has significantly higher power and cooling requirements due to the intense workload of AI computations. These facilities often incorporate advanced cooling technologies, such as liquid cooling, to manage the heat generated by GPUs and other high-performance components.

- Conventional Data Center: While still requiring effective power and cooling solutions, the demand is generally lower compared to AI data centers. Traditional cooling methods are often sufficient.

- Scalability and Flexibility:

- AI Data Center: Designed for scalability and flexibility to accommodate the rapidly evolving AI landscape. They need to rapidly scale up resources to meet the demands of AI model training and inference.

- Conventional Data Center: While scalability is also important, the focus is more on maximizing uptime and reliability for a wide range of IT services with predictable scaling patterns.

- Software and Ecosystem:

- AI Data Center: Utilizes a stack of AI-specific software and frameworks (such as TensorFlow, PyTorch) that require direct support from the hardware. Integration with cloud services and APIs for AI model training and deployment is also more pronounced.

- Conventional Data Center: Employs a broader range of standard IT management and virtualization software, focusing on general-purpose computing tasks and traditional web services.

- Purpose and Workloads:

- AI Data Center: Specifically optimized for AI and ML workloads, which involve processing and analyzing large datasets, training AI models, and performing complex simulations.

- Conventional Data Center: Supports a wide variety of enterprise IT functions, including hosting websites, running business applications, and storing data.

Reference

YouTube

|

|