|

Python |

||||||

|

K Cluster

In this note, I want to try to apply an approach that is completely from other notes. I wanted to use chatGPT to create a Python code that I want instead of writing it myself.

NOTE : Refer to this note for my personal experience with chatGPT coding and advtantage & limitation of the tool. In general, I got very positive impression with chatGPT utilization for coding.

This code is created first by chatGPT on Feb 03 2023 (meaning using chatGPT 3.5) and then modified a little bit my me. The initial request that I put into chatGPT is as follows :

NOTE : It is not guaranteed that you would have the same code as I got since chatGPT produce the answers differently depending on the context. And it may produce the different answers everytime you ask even with the exact the same question. NOTE : In this code, the requirements step 2) is also generated by chatGPT based on my question : "give me the list of parameters for K clustering. NOTE : If you don't have any of your own idea for the request, copy my request and paste it into the chatGPT and put additional requests based on the output for the previous request. I would suggest to create a new thread in the chatGPT and put my request and then continue to add your own request.

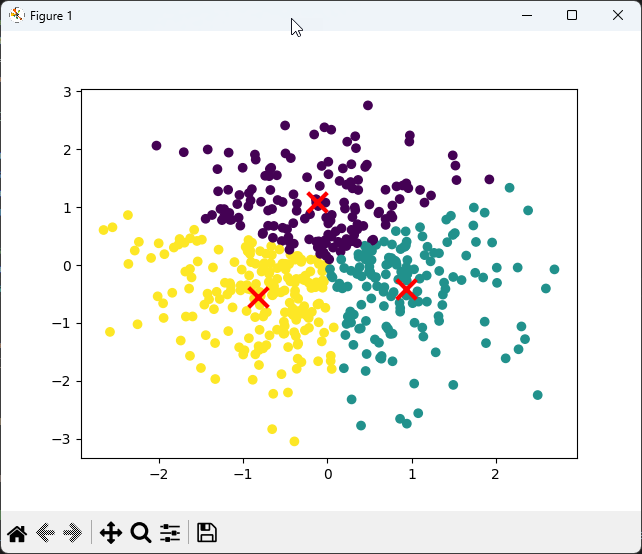

The result from this code is as follows :

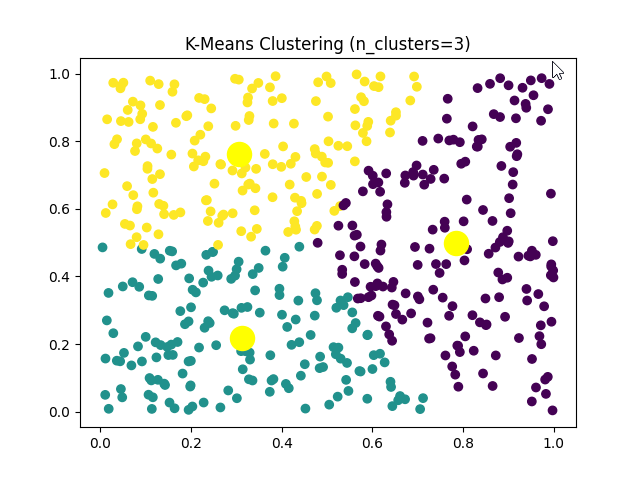

As a next step, I asked chatGPT to simplify the code using a any package that can simplify the same implementation and the test routine. The requested code and the result are as follow.

The result of the code is as shown below.

|

||||||