|

|

||

|

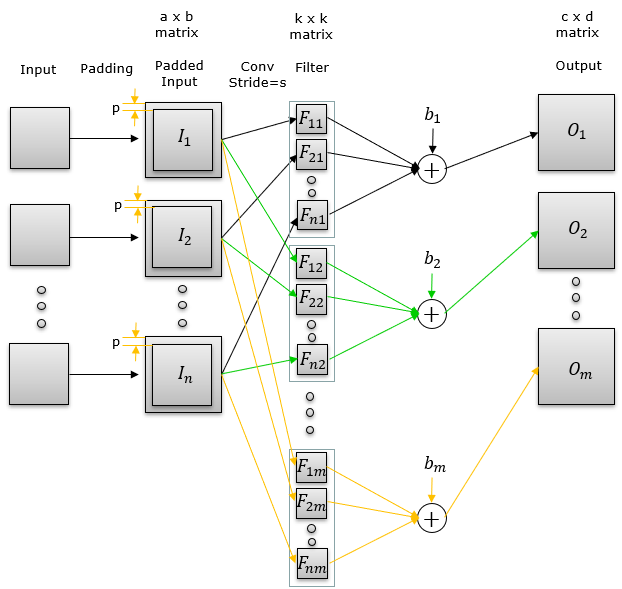

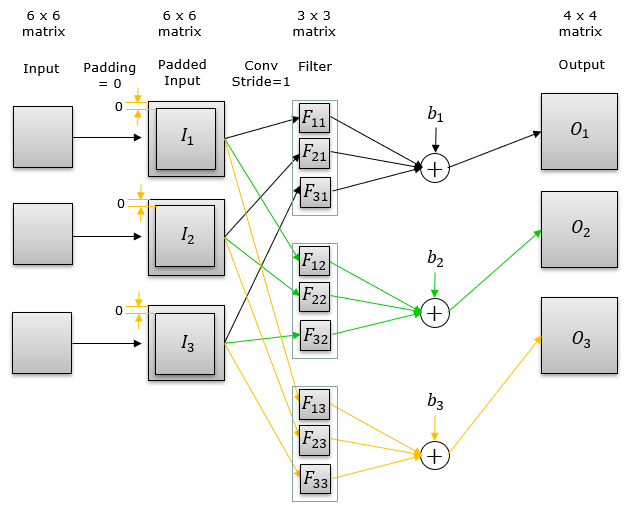

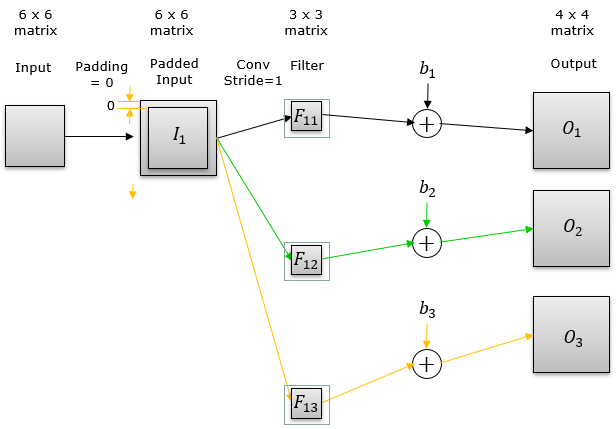

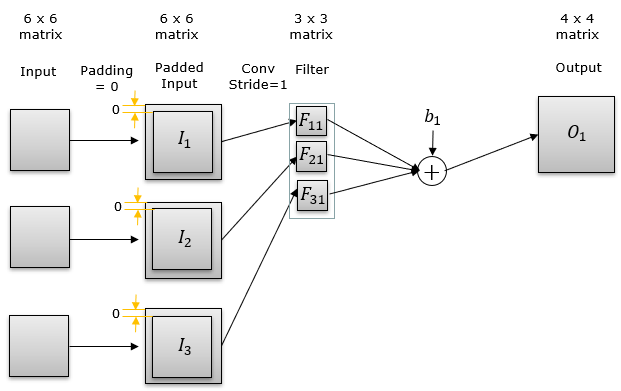

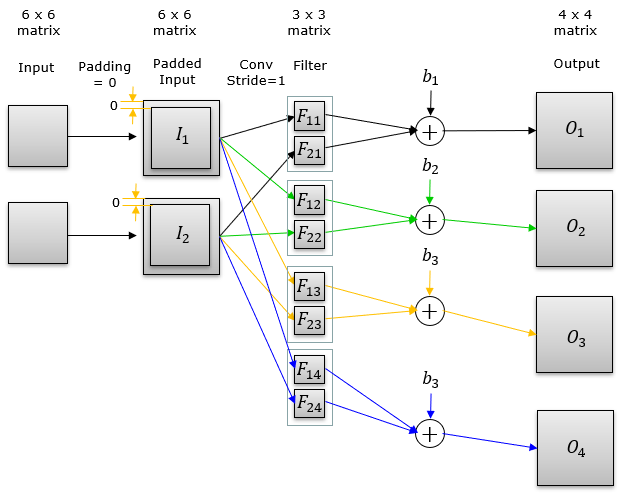

As the name implies, conv2D is the function to perform convolution to a 2D data (e.g, an image). If you are completely new to the concept of convolution and serious about understanding it from the very basic. I would suggest you to start with 1 D convolution in my note here. If you are already familiar with the basic concept of convolution or not interested in the basic concept and want to directly jump into 2D convolution which is more relevant to 2D image processing, take a look at some examples in Conv 2D section in my visual note. Conv2D function can perform the convolution to a much more complicated data structure (like to a set of 2D data, not only to single 2D data) and with some additional options like padding and stride. What I want to you do in this section is not about performing the math of the convolution but a data structure and calculation flow performed by the Conv2D function. The Generic usage of Conv2D() function is as follows. torch.nn.Conv2d(in_channels = n, out_channels = m, kernel_size = k, stride = s, padding = p) The operation performed by this function can be illustrated as show below. In most case, the a and b is set to be the same. the c and d is usally set to be same as well. One important thing that you might have noticed from Conv2D() usage would be that we only set the dimension of the filter (kernel), does not specify the number of the filters nor the values within the filters. As hinted by the diagram shown below, the number of the filter is automatically determined by the number of input data and number of output data. The initial values of each filter is automatically assigned by Conv2D() function (usually random values are assigned).

Now I will show you various examples for various data/calculation flow and corresponding source Pytorch source code. Going through these examples, I hope you intuitively understand the usage of Conv2D() function.

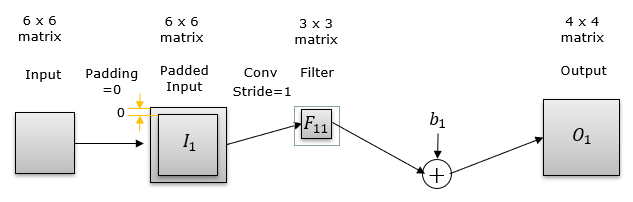

Input layer 1, output layer 1, kernel size 3x3, stride 1, padding 0

import torch

input1 = torch.ones(1,1,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 1, out_channels = 1, kernel_size = 3)

print("net = ",net) ==> net = Conv2d(1, 1, kernel_size=(3, 3), stride=(1, 1))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameter containing: tensor([[[[ 0.0881, -0.1189, -0.0778], [ 0.0953, 0.0934, 0.0858], [-0.2734, 0.1937, 0.0823]]]], requires_grad=True), Parameter containing: tensor([0.1680], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[ 0.0881, -0.1189, -0.0778], [ 0.0953, 0.0934, 0.0858], [-0.2734, 0.1937, 0.0823]]]], requires_grad=True) bias = Parameter containing: tensor([0.1680], requires_grad=True)

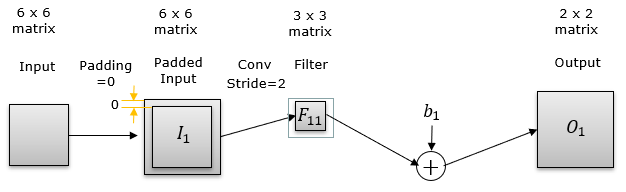

out = net(input1) print("output = ",out) ==> output = tensor([[[[0.3365, 0.3365, 0.3365, 0.3365], [0.3365, 0.3365, 0.3365, 0.3365], [0.3365, 0.3365, 0.3365, 0.3365], [0.3365, 0.3365, 0.3365, 0.3365]]]], grad_fn=<ThnnConv2DBackward>) Input layer 1, output layer 1, kernel size 3x3, stride 2, padding 0

import torch

input1 = torch.ones(1,1,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 1, out_channels = 1, kernel_size = 3, stride = 2)

print("net = ",net) ==> net = Conv2d(1, 1, kernel_size=(3, 3), stride=(2, 2))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameter containing: tensor([[[[ 0.1907, 0.2069, 0.1676], [ 0.0233, -0.2632, 0.1108], [-0.0301, 0.0857, 0.2350]]]], requires_grad=True), Parameter containing: tensor([-0.0093], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[ 0.1907, 0.2069, 0.1676], [ 0.0233, -0.2632, 0.1108], [-0.0301, 0.0857, 0.2350]]]], requires_grad=True) bias = Parameter containing: tensor([-0.0093], requires_grad=True)

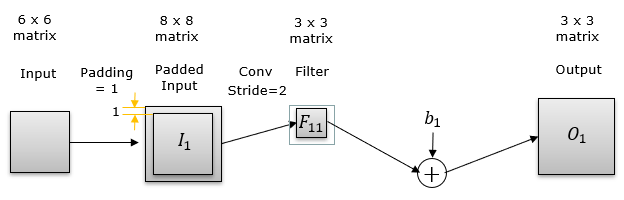

out = net(input1) print("output = ",out) ==> output = tensor([[[[0.7175, 0.7175], [0.7175, 0.7175]]]], grad_fn=<ThnnConv2DBackward>) Input layer 1, output layer 1, kernel size 3x3, stride 2, padding 1

import torch

input1 = torch.ones(1,1,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 1, out_channels = 1, kernel_size = 3, stride = 2, padding = 1)

print("net = ",net) ==> net = Conv2d(1, 1, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameter containing: tensor([[[[-0.2392, -0.0492, -0.0347], [-0.3049, -0.1630, 0.1242], [-0.2988, -0.3229, 0.1064]]]], requires_grad=True), Parameter containing: tensor([-0.0967], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[-0.2392, -0.0492, -0.0347], [-0.3049, -0.1630, 0.1242], [-0.2988, -0.3229, 0.1064]]]], requires_grad=True) bias = Parameter containing: tensor([-0.0967], requires_grad=True)

out = net(input1) print("output = ",out) ==> output = tensor([[[[-0.3520, -0.9556, -0.9556], [-0.4359, -1.2787, -1.2787], [-0.4359, -1.2787, -1.2787]]]], grad_fn=<ThnnConv2DBackward>) Input layer 3, output layer 3, kernel size 3x3, stride 1, padding 0

import torch

input1 = torch.ones(1,3,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 3, out_channels = 3, kernel_size = 3, stride = 1)

print("net = ",net) ==> net = Conv2d(3, 3, kernel_size=(3, 3), stride=(1, 1))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameters = [Parameter containing: tensor([[[[-0.0803, -0.1713, 0.1441], [-0.1072, -0.1571, -0.0254], [-0.1230, -0.0584, 0.0903]],

[[-0.1486, 0.1374, -0.1192], [ 0.0228, 0.0543, -0.1124], [-0.1045, 0.1804, -0.0721]],

[[-0.0944, 0.1255, 0.0371], [-0.0887, -0.1317, 0.1474], [ 0.0237, 0.1048, 0.0840]]],

[[[-0.1206, -0.1360, 0.0185], [-0.0684, 0.1522, 0.1608], [ 0.0973, 0.0807, 0.1193]],

[[ 0.1036, -0.0177, 0.1745], [-0.1605, 0.0437, -0.1423], [-0.0322, 0.0826, -0.1443]],

[[ 0.1145, -0.1378, -0.1148], [-0.0828, -0.1226, -0.0900], [ 0.1138, 0.1260, 0.0788]]],

[[[-0.1372, -0.0510, 0.1307], [ 0.1600, 0.0902, 0.0489], [-0.0889, 0.1738, -0.0099]],

[[-0.0494, -0.0856, 0.1392], [-0.1584, 0.0696, -0.1846], [-0.1266, -0.1801, 0.0202]],

[[ 0.0151, 0.1716, -0.1645], [-0.0296, 0.1748, -0.0985], [-0.1260, -0.1463, 0.0970]]]], requires_grad=True), Parameter containing: tensor([0.0566, 0.0760, 0.0430], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[-0.0803, -0.1713, 0.1441], [-0.1072, -0.1571, -0.0254], [-0.1230, -0.0584, 0.0903]],

[[-0.1486, 0.1374, -0.1192], [ 0.0228, 0.0543, -0.1124], [-0.1045, 0.1804, -0.0721]],

[[-0.0944, 0.1255, 0.0371], [-0.0887, -0.1317, 0.1474], [ 0.0237, 0.1048, 0.0840]]],

[[[-0.1206, -0.1360, 0.0185], [-0.0684, 0.1522, 0.1608], [ 0.0973, 0.0807, 0.1193]],

[[ 0.1036, -0.0177, 0.1745], [-0.1605, 0.0437, -0.1423], [-0.0322, 0.0826, -0.1443]],

[[ 0.1145, -0.1378, -0.1148], [-0.0828, -0.1226, -0.0900], [ 0.1138, 0.1260, 0.0788]]],

[[[-0.1372, -0.0510, 0.1307], [ 0.1600, 0.0902, 0.0489], [-0.0889, 0.1738, -0.0099]],

[[-0.0494, -0.0856, 0.1392], [-0.1584, 0.0696, -0.1846], [-0.1266, -0.1801, 0.0202]],

[[ 0.0151, 0.1716, -0.1645], [-0.0296, 0.1748, -0.0985], [-0.1260, -0.1463, 0.0970]]]], requires_grad=True), bias = Parameter containing: tensor([0.0566, 0.0760, 0.0430], requires_grad=True)]

out = net(input1) print("output = ",out) ==> output = tensor([[[[-0.3861, -0.3861, -0.3861, -0.3861], [-0.3861, -0.3861, -0.3861, -0.3861], [-0.3861, -0.3861, -0.3861, -0.3861], [-0.3861, -0.3861, -0.3861, -0.3861]],

[[ 0.1724, 0.1724, 0.1724, 0.1724], [ 0.1724, 0.1724, 0.1724, 0.1724], [ 0.1724, 0.1724, 0.1724, 0.1724], [ 0.1724, 0.1724, 0.1724, 0.1724]],

[[-0.3028, -0.3028, -0.3028, -0.3028], [-0.3028, -0.3028, -0.3028, -0.3028], [-0.3028, -0.3028, -0.3028, -0.3028], [-0.3028, -0.3028, -0.3028, -0.3028]]]], grad_fn=<ThnnConv2DBackward>) Input layer 1, output layer 3, kernel size 3x3, stride 1, padding 0

import torch

input1 = torch.ones(1,1,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 1, out_channels = 3, kernel_size = 3, stride = 1)

print("net = ",net) ==> net = Conv2d(1, 3, kernel_size=(3, 3), stride=(1, 1))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameter containing: tensor([[[[-0.2515, -0.1372, 0.3204], [-0.0997, 0.3118, 0.2565], [ 0.0022, 0.0423, -0.0538]]],

[[[-0.2974, 0.3017, -0.0427], [-0.0471, 0.0506, 0.2380], [-0.0384, -0.1994, -0.0341]]],

[[[-0.1916, -0.1838, 0.1763], [-0.3117, 0.1773, -0.0955], [-0.1647, 0.1660, 0.1878]]]], requires_grad=True), Parameter containing: tensor([-0.0194, 0.2818, -0.1526], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[-0.2515, -0.1372, 0.3204], [-0.0997, 0.3118, 0.2565], [ 0.0022, 0.0423, -0.0538]]],

[[[-0.2974, 0.3017, -0.0427], [-0.0471, 0.0506, 0.2380], [-0.0384, -0.1994, -0.0341]]],

[[[-0.1916, -0.1838, 0.1763], [-0.3117, 0.1773, -0.0955], [-0.1647, 0.1660, 0.1878]]]], requires_grad=True) bias = Parameter containing: tensor([-0.0194, 0.2818, -0.1526], requires_grad=True)

out = net(input1) print("output = ",out) ==> output = tensor([[[[ 0.3715, 0.3715, 0.3715, 0.3715], [ 0.3715, 0.3715, 0.3715, 0.3715], [ 0.3715, 0.3715, 0.3715, 0.3715], [ 0.3715, 0.3715, 0.3715, 0.3715]],

[[ 0.2131, 0.2131, 0.2131, 0.2131], [ 0.2131, 0.2131, 0.2131, 0.2131], [ 0.2131, 0.2131, 0.2131, 0.2131], [ 0.2131, 0.2131, 0.2131, 0.2131]],

[[-0.3923, -0.3923, -0.3923, -0.3923], [-0.3923, -0.3923, -0.3923, -0.3923], [-0.3923, -0.3923, -0.3923, -0.3923], [-0.3923, -0.3923, -0.3923, -0.3923]]]], grad_fn=<ThnnConv2DBackward>) Input layer 3, output layer 1, kernel size 3x3, stride 1, padding 0

import torch

input1 = torch.ones(1,3,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 3, out_channels = 1, kernel_size = 3, stride = 1)

print("net = ",net) ==> net = Conv2d(3, 1, kernel_size=(3, 3), stride=(1, 1))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameter containing: tensor([[[[-0.0912, -0.1064, -0.1396], [ 0.0072, -0.1196, 0.1672], [-0.0177, -0.0890, 0.0466]],

[[ 0.0092, 0.1629, -0.1772], [-0.1293, -0.1035, 0.0919], [-0.1625, -0.0141, 0.0412]],

[[ 0.0712, -0.1737, 0.0552], [ 0.0434, 0.1417, -0.1580], [ 0.1569, 0.1290, 0.1705]]]], requires_grad=True), Parameter containing: tensor([-0.0269], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[-0.0912, -0.1064, -0.1396], [ 0.0072, -0.1196, 0.1672], [-0.0177, -0.0890, 0.0466]],

[[ 0.0092, 0.1629, -0.1772], [-0.1293, -0.1035, 0.0919], [-0.1625, -0.0141, 0.0412]],

[[ 0.0712, -0.1737, 0.0552], [ 0.0434, 0.1417, -0.1580], [ 0.1569, 0.1290, 0.1705]]]], requires_grad=True) bias = Parameter containing: tensor([-0.0269], requires_grad=True)

out = net(input1) print("output = ",out) ==> output = tensor([[[[-0.2145, -0.2145, -0.2145, -0.2145], [-0.2145, -0.2145, -0.2145, -0.2145], [-0.2145, -0.2145, -0.2145, -0.2145], [-0.2145, -0.2145, -0.2145, -0.2145]]]], grad_fn=<ThnnConv2DBackward>) Input layer 2, output layer 4, kernel size 3x3, stride 1, padding 0

import torch

input1 = torch.ones(1,2,6,6) print("input = ",input1) ==> input = tensor([[[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]],

[[1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.], [1., 1., 1., 1., 1., 1.]]]])

net = torch.nn.Conv2d(in_channels = 2, out_channels = 4, kernel_size = 3, stride = 1)

print("net = ",net) ==> net = Conv2d(2, 4, kernel_size=(3, 3), stride=(1, 1))

print("Parameters = ",list(net.parameters())) ==> Parameters = [Parameter containing: tensor([[[[ 0.2179, -0.1332, 0.0899], [-0.0964, -0.1653, 0.1551], [-0.1422, -0.2231, 0.1075]],

[[-0.1066, -0.1183, 0.0421], [ 0.1305, 0.2184, -0.1633], [-0.1271, 0.2284, 0.2235]]],

[[[ 0.1970, -0.0710, -0.0590], [-0.1749, 0.0487, 0.1591], [-0.1202, 0.0690, 0.1691]],

[[ 0.0198, -0.0896, 0.2124], [-0.0867, -0.0135, -0.1714], [-0.0533, 0.1503, -0.2194]]],

[[[-0.1208, -0.1256, -0.0556], [ 0.0442, 0.2287, -0.2230], [-0.0903, 0.1225, -0.1689]],

[[-0.1276, -0.2175, 0.1301], [-0.0630, -0.0887, 0.0780], [ 0.0101, 0.1145, 0.0791]]],

[[[ 0.0135, 0.0196, 0.1861], [ 0.1848, 0.2056, -0.1715], [ 0.1584, -0.2033, 0.0102]],

[[ 0.2010, 0.1128, -0.0148], [ 0.2009, 0.2132, 0.0760], [ 0.1043, 0.1950, 0.1885]]]], requires_grad=True), Parameter containing: tensor([-0.2167, -0.0877, 0.1046, 0.1399], requires_grad=True)]

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = [Parameter containing: tensor([[[[ 0.2179, -0.1332, 0.0899], [-0.0964, -0.1653, 0.1551], [-0.1422, -0.2231, 0.1075]],

[[-0.1066, -0.1183, 0.0421], [ 0.1305, 0.2184, -0.1633], [-0.1271, 0.2284, 0.2235]]],

[[[ 0.1970, -0.0710, -0.0590], [-0.1749, 0.0487, 0.1591], [-0.1202, 0.0690, 0.1691]],

[[ 0.0198, -0.0896, 0.2124], [-0.0867, -0.0135, -0.1714], [-0.0533, 0.1503, -0.2194]]],

[[[-0.1208, -0.1256, -0.0556], [ 0.0442, 0.2287, -0.2230], [-0.0903, 0.1225, -0.1689]],

[[-0.1276, -0.2175, 0.1301], [-0.0630, -0.0887, 0.0780], [ 0.0101, 0.1145, 0.0791]]],

[[[ 0.0135, 0.0196, 0.1861], [ 0.1848, 0.2056, -0.1715], [ 0.1584, -0.2033, 0.0102]],

[[ 0.2010, 0.1128, -0.0148], [ 0.2009, 0.2132, 0.0760], [ 0.1043, 0.1950, 0.1885]]]], requires_grad=True), bias = Parameter containing: tensor([-0.2167, -0.0877, 0.1046, 0.1399], requires_grad=True)]

out = net(input1) print("output = ",out) ==> output = tensor([[[[-0.0788, -0.0788, -0.0788, -0.0788], [-0.0788, -0.0788, -0.0788, -0.0788], [-0.0788, -0.0788, -0.0788, -0.0788], [-0.0788, -0.0788, -0.0788, -0.0788]],

[[-0.1214, -0.1214, -0.1214, -0.1214], [-0.1214, -0.1214, -0.1214, -0.1214], [-0.1214, -0.1214, -0.1214, -0.1214], [-0.1214, -0.1214, -0.1214, -0.1214]],

[[-0.3692, -0.3692, -0.3692, -0.3692], [-0.3692, -0.3692, -0.3692, -0.3692], [-0.3692, -0.3692, -0.3692, -0.3692], [-0.3692, -0.3692, -0.3692, -0.3692]],

[[ 1.8202, 1.8202, 1.8202, 1.8202], [ 1.8202, 1.8202, 1.8202, 1.8202], [ 1.8202, 1.8202, 1.8202, 1.8202], [ 1.8202, 1.8202, 1.8202, 1.8202]]]], grad_fn=<ThnnConv2DBackward>) Input layer 3, output layer 1, kernel size 3x3, stride 1, padding 0 wit input imageimport torch import torchvision.transforms.functional as TF import PIL from PIL import Image from matplotlib import pyplot from numpy import asarray

img = Image.open('temp/digit/0/digit0.png') input = TF.to_tensor(img); input = input.unsqueeze_(0);

print("input.shape = ",input.shape) ==> input.shape = torch.Size([1, 3, 10, 10])

net = torch.nn.Conv2d(in_channels = 3, out_channels = 1, kernel_size = 3)

print("net = ",net) ==> net = Conv2d(3, 1, kernel_size=(3, 3), stride=(1, 1))

print("Weight = ",net.weight) print("bias = ",net.bias) ==> Weight = Parameter containing: tensor([[[[-0.0482, 0.1148, -0.1228], [-0.1687, 0.0758, 0.0497], [ 0.0313, -0.1646, -0.1486]],

[[ 0.0562, 0.1516, 0.1300], [ 0.0879, -0.0339, -0.1876], [-0.0335, 0.1665, 0.0831]],

[[-0.0947, -0.1731, -0.0320], [ 0.0810, 0.0433, -0.1137], [-0.0629, -0.1213, -0.0357]]]], requires_grad=True) bias = Parameter containing: tensor([0.1899], requires_grad=True)

out = net(input) print("output = ",out) ==> output = tensor([[[[-0.2825, 0.0074, 0.0993, -0.1404, -0.2211, -0.2096, -0.0612, -0.2893], [-0.1458, -0.0563, -0.2432, -0.2315, 0.0343, -0.2591, -0.2193, -0.2048], [-0.1072, -0.0550, -0.3664, -0.1790, -0.1037, -0.0855, -0.3280, -0.1874], [-0.0702, -0.1030, -0.3483, -0.2005, -0.1603, -0.0146, -0.3481, -0.1741], [-0.1308, -0.0748, -0.2734, -0.1411, -0.0867, -0.0083, -0.3704, -0.1826], [-0.1727, -0.0352, -0.3164, 0.0981, 0.2282, -0.0866, -0.4669,-0.1779], [-0.2981, 0.0287, -0.1716, -0.1647, -0.2899, -0.2744, -0.2395,-0.2673], [-0.2801, -0.2910, -0.2061, -0.2739, -0.2440, -0.3237, -0.2540,-0.2801]]]], grad_fn=<ThnnConv2DBackward>) Reference :[1] Convolutional Neural Networks (CNNs / ConvNets) [2] CONVOLUTIONAL NEURAL NETWORKS IN PYTORCH

|

||