|

|

|||||||||||||||||||||||||||||

|

As the name implies, this is to guarantee the traffic with Low Latency and Very High Reliability at the same time. It has to satisfy the most challenging two requirement (Latency and Reliability) simultaneously.

How Low and How Reliable it should be ?According to RP-191584 and TR 38.913-7.9, this requirement is specified as follows : (I think this is for single hop (e.g, between UE and gNB) probably at the layer of MAC/RLC)

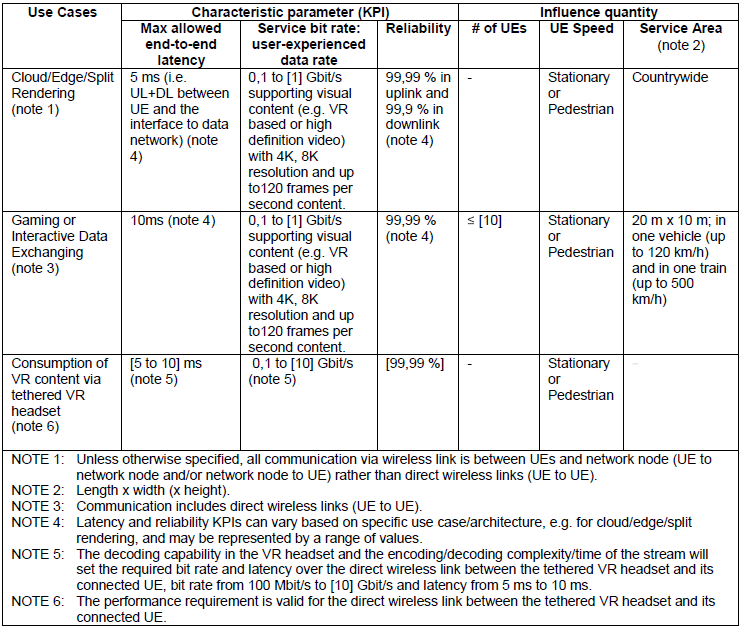

In reality, I think more important performance indicator is end-to-end latency for each application and use case. Following table is the summary of the latency requirement for each application/use cases that I collected from various references listed in the reference section.

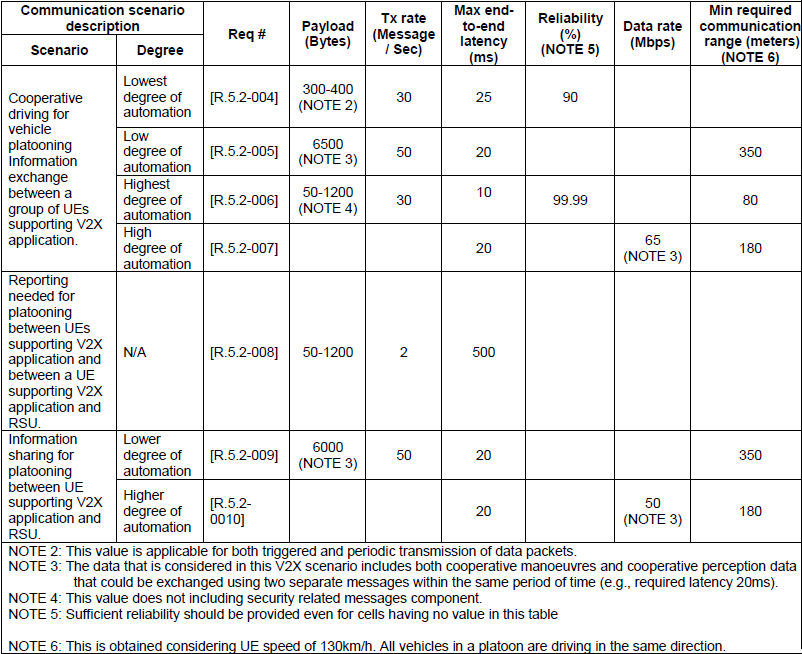

< TS 22.186 - Table 5.2-1 Performance requirements for Vehicles Platooning >

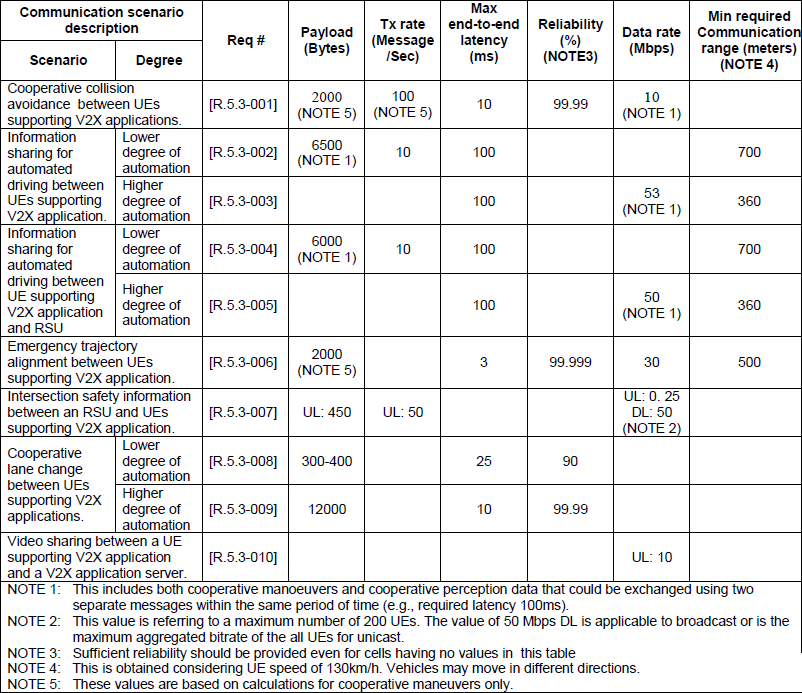

< TS 22.186 - Table 5.3-1 Performance requirements for advanced driving >

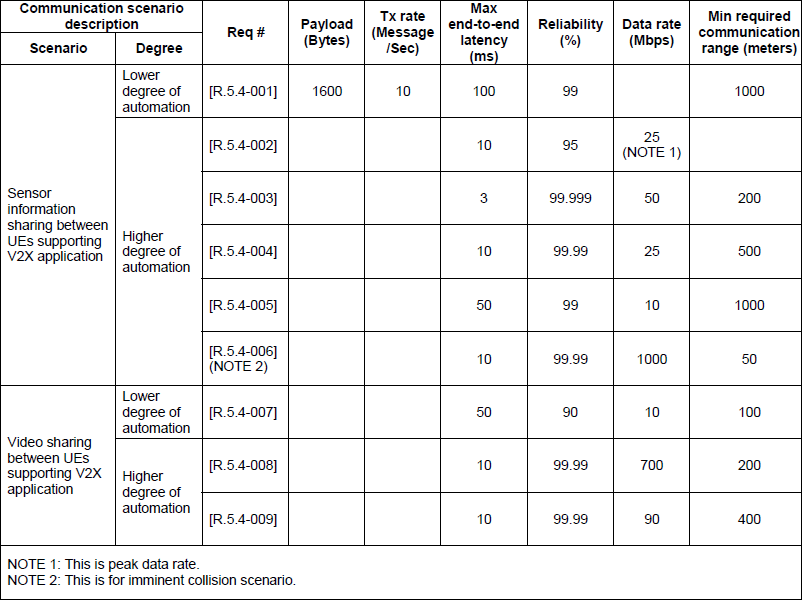

< TS 22.186 - Table 5.4-1 Performance requirements for extended sensors >

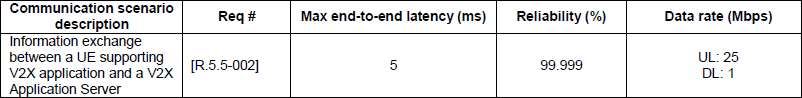

< TS 22.186 - Table 5.5-1 Performance requirements for remote driving >

< 22.261 v17.11 Table 7.6.1-1 KPI Table for additional high data rate and low latency service >

What is it for ?Why we need this kind of challenging feature ? It seems that they have some use case as follows in mind when they investigated on this. Following is the use case specified in RP-191584

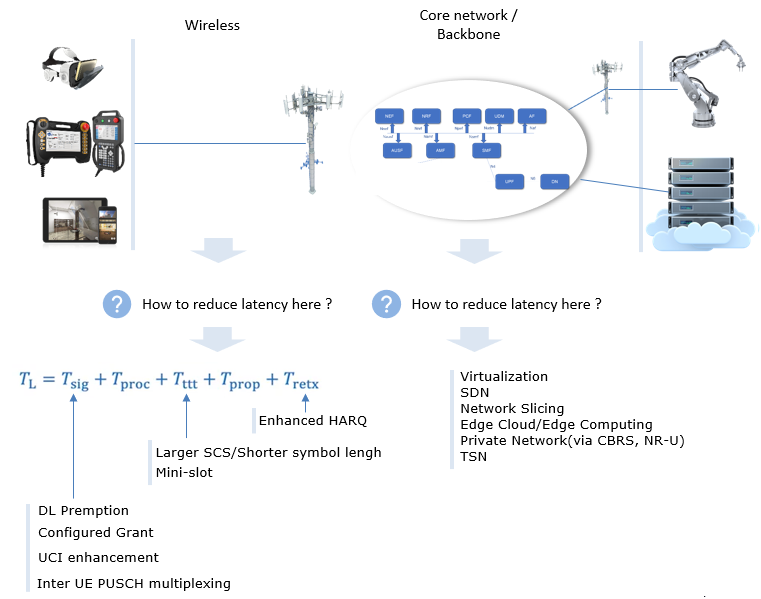

How to Reduce Latency ?To reduce the end-to-end latency, we need to reduce the latency both on RAN side and on Corenetwork side. Let's briefly think of how we can reduce the latency on each side. Some common technologies to reduce the latency on RAN and Corenetwork side can be summarized in illustration as shown below. RAN side latency reduction is relatively well described in 3GPP specification (mostly in Release 16), but Core network side technology seems to be more up to implementation. Personally I think Core network latency would play more role in terms of end-to-end latency and it would take a while and huge investment to restructure and optimize overall architecture of the core network.

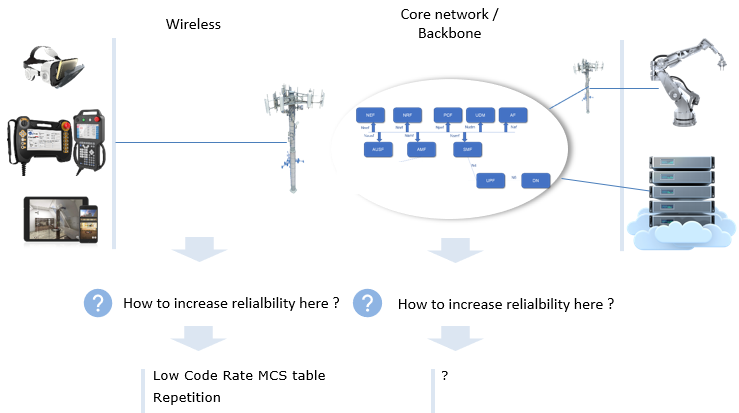

As the name implies, URLLC is not only for Latency Reduction. Reliability improvement is also important to meet the super high standards of URLLC. I think this part would be even more challenging than reducing the latency. A common trick to increase the reliablity on RAN side is to use MCS table for low code rate and repetitive data transmission. Unfortunately this would result in throughput reduction, but it is not easy to think of any other means to increase reliability without sacrificing some throughput performance. In addition, we would come up with some solutions to increase the reliability on coreside (i.e, reliability over coreside data path), but as of now (Mar 2021) I don't have any specify knowledge on how to improve core side reliability. I will update on this as I learn further.

What cause the delay ?As the term implies, the critical component of URLLC (Ultra-Reliable Low Latency Communication) is about delay more strictly, it's all about reducing delay. In order to reduce delay, we need to understand the source of the delay in the first place. Why is minimizing latency so crucial? Well, in many next-generation applications, even milliseconds matter. Think of remote surgery, where a surgeon needs real-time feedback from robotic instruments, or autonomous vehicles, where split-second decisions can be the difference between safety and danger. In these scenarios, traditional network communication just won't cut it. URLLC aims to tackle these delays head-on, employing a range of techniques to ensure lightning-fast and incredibly reliable communication. In this blog post, we'll dive deep into the world of URLLC, exploring its key technologies, applications, and the challenges that lie ahead. In this note, we will look into some of the major source of delays. The initial list came from Ultra-Reliable Low-Latency in 5G: A Close Reality or a Distant Goal? (Arman Maghsoudnia) and further details will be added as I learn more. Source of the dealyThere are the different sources of latency that hinder the achievement of Ultra-Reliable Low-Latency Communication (URLLC) in 5G. Each source contributes uniquely to the overall latency, and their interplay is critical in determining system performance. URLLC is a key feature introduced in 5G to support mission-critical applications such as autonomous vehicles, remote surgery, industrial automation, and augmented reality, which demand extremely low latency (as low as 0.5ms) and high reliability (99.999%). Despite years of theoretical research and advancements, practical implementations of URLLC often fall short of meeting these stringent requirements due to bottlenecks in system design, protocol inefficiencies, and hardware/software limitations

Deterministic vs Non-deterministic latenciesLatency in 5G systems can be broadly understood in terms of deterministic and non-deterministic latencies, which represent the predictability of delays during data transmission and processing. Deterministic latency refers to delays that are predictable and consistent. These latencies occur in processes or components where timing behavior is predefined and not subject to significant variation. Characteristics:

Non-deterministic latency, on the other hand, arises from processes or components where delays are variable and unpredictable. These latencies are often caused by dynamic factors, such as system load, shared resources, or environmental conditions.

PHY/MAC EnhancementEven though I haven't seen much of real implementation(UE and Network) and real network deployment in Release 15 as of now(Jan 2021), there are features in Release 15 that is introduced with URLLC in mind. The list of these features are well summarized in 5GAmericas white paper as follows :

As you may guess, we would need a lot of PHY/MAC enhancement to make this possible. Those enhancement is described in RP-191584 and 38.824 V16.0.0 (2019-03). As you see, it requires the enhancement across all physical channels and MAC scheduling.

Presentations on YouTube[1] URLLC Standardization and Use Cases | ITN WindMill Project [2] Can todays Internet protocols deliver URLLC? [3] Teaser: 5G Remote Surgery - Fact or Fiction? [4] The Okay, But How? Show, Episode 2: 5G and low latency [5] Wireless Access for URLLC | ITN WindMill Project [6] neXt Curve Webcast: URLLC Myths and Realities [7] Deterministic Networking for Real-Time Systems (Using TSN) - Henrik Austad, Cisco Systems [8] AWS Wavelength - Edge Computing for 5G Networks [9] What is Edge Computing and its Impact on 5G? [10] Keynote: Network of Tomorrow - 5G and Edge - Rajesh Gadiyar, Intel [11] 5G Connected Edge Cloud - Keynote by Andre Fuetsch, AT&T [12] Aether - Managed 5G Edge Cloud Platform [13] 5G network slicing: automation, assurance and optimization of 5G transport slices (Nokia) [14] AREA Webinar | The Challenges of Enterprise AR Implementation [15] Technical Challenges of Effective VR 360 Delivery [16] B5: Overcoming the Technical Challenges of Delivering VR Video [17] Why Virtual Reality Is STRUGGLING [18] AR VR Women - Silicon Valley @ Nvidia - Technical Challenges for VR and AR - 2016 06 15 [19] R&S Thirty-Five: C-V2X and end-to-end application testing [20] Intermediate: Vehicle to Everything (V2X) Introduction [21] V2X Technology and Deployment : As of Sep. 2020 [22] What is V2X - V2X Communciation Explained Part 1 [23] DSRC or 5G? [24] How Can C-V2X Create an Environment that Improves Quality of Life for Everyone? (GSMA) [25] Dr. Javier Gozalvez - V2X Networks for Connected and Automated Driving - IEEE LCN 2019 Keynote (IEEE) [26] What is TSN Part 1: Where We Are Today [27] What is TSN Part 2: What is Time-Sensitive Networking? [28] What is TSN Part 3: Why is Time-Sensitive Networking Important for Automation? [29] 5G and the Future of Connected Cars Video Demo :[1] Taking factory automation to the next level with Audi through URLLC [2] Field Trials on 5G mMTC and uRLLC in Japan [4] Nokia 5G Demonstration Video 5G: driving the automation of everything [5] Part 10: 5G Use cases - 5G for Absolute Beginners [6] Powering safer vehicle production with 5G [7] 5G for Robotics: Ultra-Low Latency Control of Distributed Robotic Systems [8] Low-latency communication for Industrial IoT Demo [9] Inside Ericsson King's College London 5G Tactile Internet Showroom [10] Achieving industrial automation protocols with 5G URLLC (Ericsson) [11] 5G: A Game Changer for Augmented Reality, Virtual Reality and Mixed Reality (Verizon) [12] 5G enabled VR GLASSES are COMING in 2021 | HAND TRACKING SUPPORT! | HP REVERB G2 images 'Leaked' [13] NTT DoCoMo demos VR control of robots over 5G [14] Best THEATER Apps For The Oculus Quest [15] SKTelecom 5G]SK Telecom Uses 5G AR to Bring Fire-Breathing Dragon to Baseball Park (SKTelecom) [16] IMR Wireless VR streaming over 5G [17] Oculus Quest XR2 - 5G, 8K Resolution, and SEVEN Cameras!? [18] XR - The Merging of Augmented Reality AR, Virtual Reality VR and Mixed Reality in 2020 [19] Why Microsoft Uses Virtual Reality Headsets To Train Workers [20] Inside Imogen Heaps cutting-edge VR concert | The Future of Music with Dani Deahl [21] Wave | Virtual Concerts (Mixed Reality (XR)) [22] Experience VR concerts and move around freely! - NOYS VR gameplay [23] CES 2021 brings INSANE new VR Technology [24] HoloLens 2 AR Headset: On Stage Live Demonstration [25] HoloLens 2: inside Microsoft's new headset [27] Demo: Network Slicing with Blue Planet 5G Automation [28] Ericsson showed live end to end 5G network slicing at Mobile World Congress LA [29] ZTE 5G End to End Network Slicing Demo [30] Oculus Quest 2 Review - 100 Hours Later [31] The Evolution of Virtual Reality by 2025 [32] V2X Technology and Deployment : As of Sep. 2020 [33] 5G C-V2X Technology Evolution Simulation (Qualcomm) [34] Introducing Cellular V2X (Qualcomm) [35] Honda V2X Communications and automated driving (Honda) [36] Cellular V2X paving the path to 5G (Qualcomm) [37] 5G and the Future of Connected Cars [38] Autotalks in a major live C-V2X testing on the streets of Shanghai [39] Boneworks - Next Gen VR Gameplay! [40] Is Your Internet FAST Enough? [41] What Is the Best Internet Speed for Gaming? [Simple Guide] [42] Testing Google Stadia at 3 Different Connection Speeds! [43] Oculus Quest Virtual Desktop Ultimate Low Latency Setup - Best Hardware For 20ms Latency [44] Virtual Desktop Settings: Ultimate Performance, Graphics & Low Latency: Streamer App & Streaming Tab [45] Wireless VR Sim Racing is here! (90hz low latency step by step guide for Oculus Quest 2) [46] Qualcomm Technologies and Siemens Drive the Future of Industrial Manufacturing with 5G (Qualcomm) [47] Qualcomm Snapdragon XR2 5G Platform turns the ordinary into extraordinary XR (Qualcomm) [48] Qualcomm 5G NR Industrial IoT Demo (Qualcomm) [49] Qualcomm, Bosch highlight 5G as an industrial enabler (Qualcomm) Papers/Whitepaper

|

|||||||||||||||||||||||||||||