|

|

||||||||||||||||

|

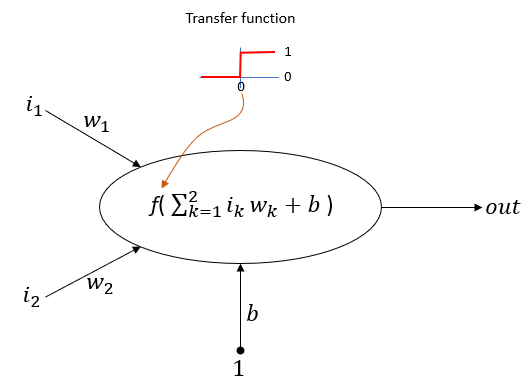

As you may know (or sense, feel), it 'sounds' as Neural Network is a kind of algorithm that try to minic the nerve system in biological organism (like human brain). Also as you may know almost every biological organism is made up of basic unit called 'cell'(I used to be a biologist a few decodes ago and as a biologist many things are popping up in my mind that contradicts this statement, but let's not get too much deep into this). Anyway, we (especially scientist or engineers) like to analyze a system as a combination of simple basic units. The basic units of most of biological system is called 'Cell'. In the same logic, when we are talking about Nerve System, it is also made up of a basic unit. This basic unit is called 'Neuron'. If we compare a neural network with biological nerve system, you may easily guess there might be some basic unit that comprises the neural network, that unit is called a Perceptron. The original concep of Perceptron comes from this paper(Ref [1]), but it is not required to read the paper unless you are seriously interested in the origin of anything. In this note, I will talk about the simplest Neural Network and how it works. If you are serously interested in Neural Network, you should understand this structure completely and should be able to trace down all the learning process by hand. If you just read the text, you may think you would understand when you read it, but you would get confused 10 minutes after you close the book.

There are a few basic things that you MUST understand very clearly. Here goes the list and these are the main things that you will learn from this page by hands.

Now let's go through the process of how this network works step by step. I strongly suggest you to draw this network and learning process by pen and pencil on the paper. Each of the process has its own mathematical background that sound scary like matrix inner product, gradient descent etc. But before diving into those mathematics, just blindly follow through these steps and let your brain come up with a clear image in your mind.

The objective is to minimize the error function (NOTE : The purpose of 1/2 in the following equation is purely for mathematical convenience, particularly when we take the derivative during gradient descent.):

We update weights using the gradient of the error:

For a particular weight

Putting it all together:

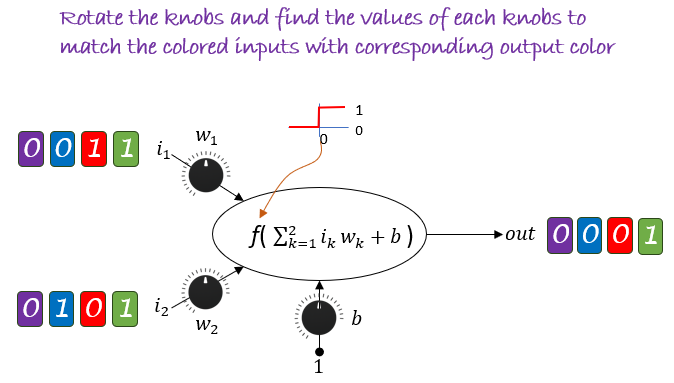

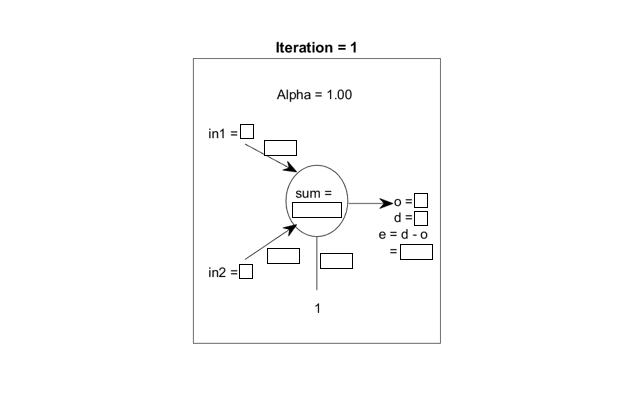

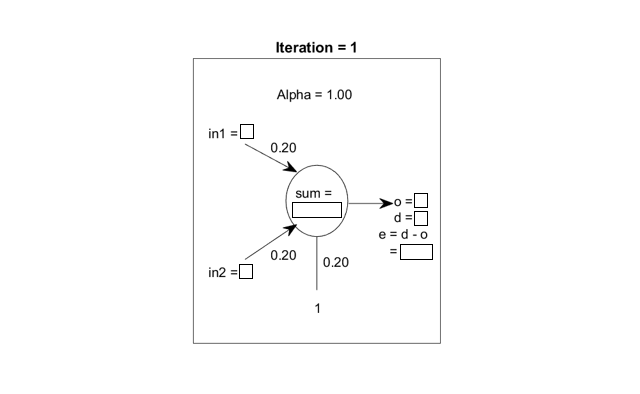

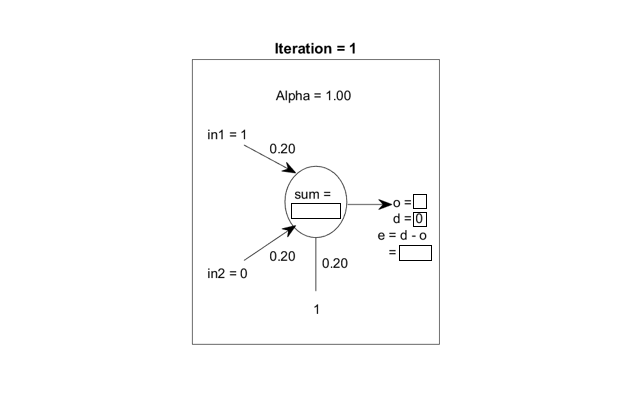

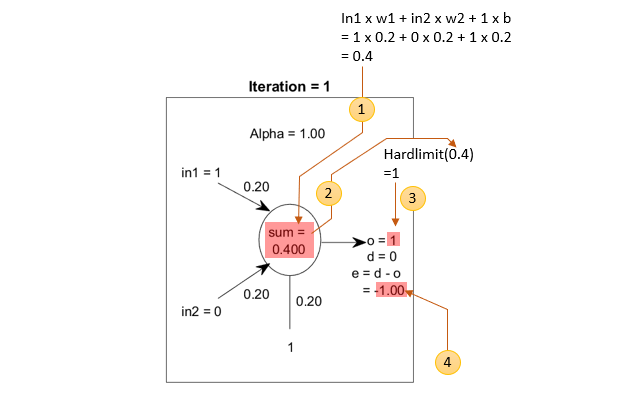

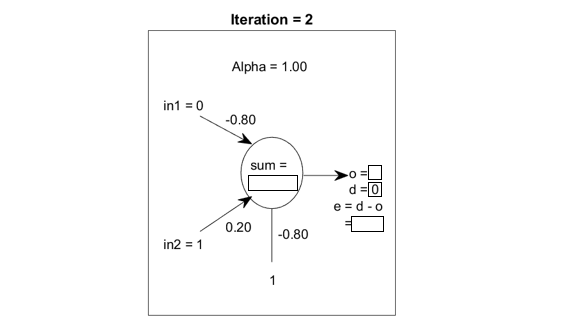

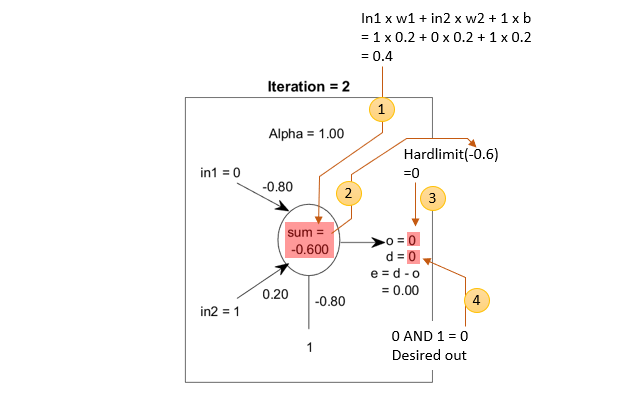

Example : Perceptron for AND logicIn this tutorial, I will go through the learning process of the perceptron learning AND gate logic. Actually this is one of the most common example for explaining the logic of the perceptron. In this example, I will show only the first cycles of learning process. In real implementation, you would need to repeat the same process many times until you reach the correct status of the learning process. I strongly, strongly, strongly recommend you to get sheets of papers and pen, and go through each and every calculation on your own. Even better thing to do is to repeat this cycle several more times and see how each iteration get closer to the final learning states. You can get an example showing 50 iterations of the process from my visual note linked here. Going through at least a few iterations with pen and paper on your own would give you much better understandings than just reading tens of document. After the completion of the learning process, the newtork should be able to implement following truth table.

i) calculate the sum of multiplication of input and weights plus bias value as in step (1) ii) put the sum into the transfer function of the perceptron. In this case, Hardlimit() is used as the transfer function of the cell (perceptron). Step (2) iii) the result of the transfer function becomes the output value of the cell (perceptron). Step (3) iv) calculate the error value. Since we know the output value and desired value, we can calculate the error as in step (4).

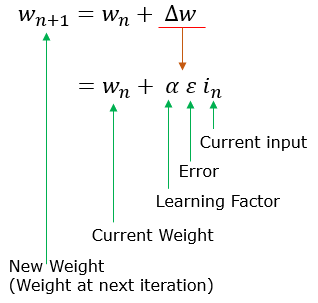

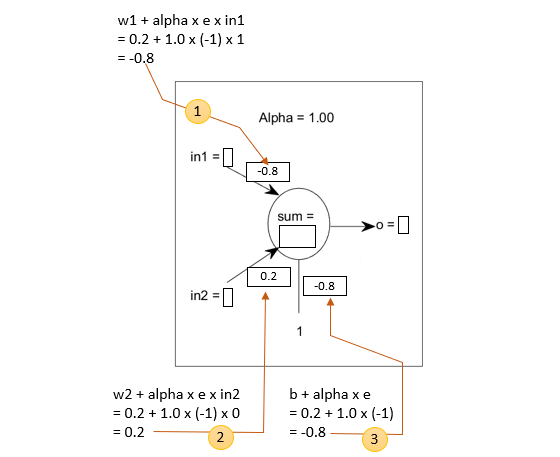

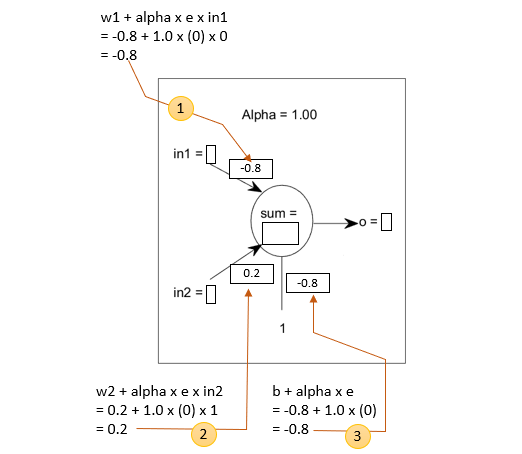

Following is to show how the weight value gets updated.

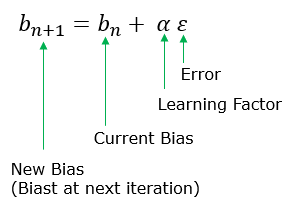

Following is to show how the bias value gets updated.

You can apply this rule to our example and get the new update as shown below.

I just completed each and every steps of the operation of a single perceptron. If you go through these procedure over and over eventually the network will learn how to come up with the proper outputs for every inputs in AND truth table. Reference[1] THE PERCEPTRON: A PROBABILISTIC MODEL FOR INFORMATION STORAGE AND ORGANIZATION IN THE BRAIN

|

||||||||||||||||