|

Transformer

Imagine a helpful AI that can understand and generate human-like text, translate languages, or even write different kinds of creative content. A key technology that makes this possible is called the "Transformer" model. Think of it like a really smart way for a computer to pay attention to different parts of a sentence or piece of information all at once, instead of just reading word by word. This allows it to understand context and relationships between words much better

than older methods, leading to more accurate and nuanced results in many AI applications.

Unlike older methods that read text word by word, a transformer looks at entire sentences or even whole paragraphs at once. This approach allows the model to pick out the most important words and understand how they relate to each other. Because of this, transformer models are very effective at tasks like translating languages, summarizing text, and answering questions. In simple terms, they help computers process language in a smarter and faster way.

It's true that there were other AI models before Transformers, so why did this new model become so popular?

Transformers became the dominant model in AI because they solve key problems that older models struggled with. Traditional models like recurrent neural networks (RNNs) and long short-term memory (LSTM) networks process language sequentially, meaning they read text one word at a time. This makes them slow and inefficient, especially for long sentences or documents. Transformers, on the other hand, use a mechanism called self-attention, which allows them to look at all words in a sentence at

once and determine which ones are most important. This makes them much faster and better at understanding context.

Another major advantage is that transformers can handle long-range dependencies more effectively. In older models, if a word at the beginning of a sentence was related to a word at the end, the connection could get lost as the model processed the sentence step by step. Transformers solve this problem by allowing all words to influence each other directly, making them much better at tasks like translation, summarization, and generating human-like text.

Finally, transformers are highly scalable. Their design makes it possible to train them on huge datasets using powerful hardware, leading to breakthroughs like GPT and BERT, which power many modern AI applications. Because of these advantages—speed, accuracy, and scalability—transformers have replaced most other models in natural language processing and are now expanding into fields like computer vision and drug discovery.

- Better Context Understanding – Transformers can see the entire sentence or paragraph at once, capturing relationships between words no matter how far apart they are.

- Example : Imagine reading a sentence like "The bank is on the river bank." Older models struggled with this because they processed words one by one. Transformers, however, can look at all the words at once and understand the relationships between them. This helps them grasp the true meaning, like knowing whether "bank" refers to a financial institution or the side of a river.

- Example: In "The cat that chased the mouse was black," a transformer knows that "black" describes "cat," even though "mouse" is in between.

- Parallel Processing for Speed – Older models, like RNNs and LSTMs, were kind of like reading a sentence word-by-word, taking their time. Transformers, on the other hand, are like speed readers. They look at the whole sentence at the same time, thanks to something called "self-attention." This parallel processing makes them incredibly fast, allowing them to zip through tons of text. Think about it: a model like GPT can whip up a long essay in just seconds,

a task that would have taken older models ages to complete..

- Example: GPT models can generate long essays in seconds, whereas older models would take much longer.

- Long-Range Dependencies – Older models forget important details when sentences are too long, but transformers keep track of distant words effectively. Imagine trying to remember the beginning of a long story. Older AI models were like that – they'd often forget key details from earlier in a sentence or paragraph, especially if it was really long. Transformers, though, are much better at this. They have a great memory for "long-range dependencies," meaning

they can keep track of information even if it's separated by many words.

- Example: when translating a paragraph, a Transformer can easily remember the subject of a sentence, even if it's way back at the beginning, and make sure the verb agrees with it correctly. It's like they have a super-powered ability to connect the dots across long stretches of text.

- Scalability with Big Data – Transformers scale well with huge datasets and powerful GPUs, leading to breakthroughs in AI. Transformers are built for the age of big data. They work really well with massive datasets and powerful computers (GPUs). This means we can train them on enormous amounts of text and code, which leads to huge improvements in their abilities.

- Example: Think of models like GPT-4 and BERT: they've been trained on truly gigantic collections of information, and that's why they're so good at things like understanding language, generating text, and even writing code. The more data they're fed, the smarter they become, and Transformers are designed to handle it all.

- Expanding Beyond Language – While Transformers first made a splash in the world of language, their impact has gone way beyond just text. Turns out, the core ideas behind Transformers are incredibly versatile. Researchers are now using them in all sorts of fields, from computer vision (helping computers "see" and understand images) to drug discovery and even the incredibly complex world of protein folding.

- Example: A prime example of this is AlphaFold, a program developed by DeepMind. It uses Transformers to predict how proteins fold, a problem that scientists have been working on for decades. This has revolutionized biology and opened up new possibilities in medicine and other areas. So, while they started with language, Transformers are now reshaping many different areas of science and technology..

Understanding how Transformers work requires grasping some key terminology and concepts. This isn't just about knowing the buzzwords; it's about building a solid foundation to truly appreciate the power and potential of this technology. This overview will break down the essential building blocks of Transformers, from the fundamental idea of attention to more nuanced concepts like positional encoding and multi-head attention. By familiarizing yourself with these terms, you'll

be well-equipped to delve deeper into the world of Transformers and understand how they're revolutionizing artificial intelligence.

Each of these items would require hours and hours of reading and practice to get the clear/detailed understanding, but I just listed the items just to get readers familiar with some basic term even though not fully understood. Whenever you have time and interest, pick any of these items and find some YouTube tutorial and repeat the process, you will gradually get familiar with these concepts.

- Self-Attention – The core mechanism of transformers that allows the model to weigh the importance of different words in a sentence, regardless of their position.

- Example: In “She gave the book to John because he loves reading,” self-attention helps the model recognize that “he” refers to “John.”

- Attention Mechanism – A method that enables the model to focus on the most relevant words when making predictions.

- Example: When translating “The sky is blue” into French, attention ensures that “sky” is properly linked to “ciel.”

- Positional Encoding – Since transformers process words simultaneously (instead of sequentially like RNNs), positional encoding helps the model understand word order.

- Example: Without positional encoding, “The cat chased the dog” and “The dog chased the cat” might seem identical to the model.

- Multi-Head Attention – Instead of using a single attention mechanism, transformers use multiple attention layers to capture different relationships between words.

- Example: While one head might focus on grammatical structure, another might look at meaning and context.

- Feedforward Neural Network – A fully connected layer that processes each word after attention has been applied, helping refine the model’s understanding.

- Example: This step fine-tunes word embeddings to ensure better predictions in tasks like text generation.

- Transformer Block – The fundamental unit of a transformer model, consisting of self-attention and feedforward layers, stacked multiple times for deeper learning.

- Example: GPT-4 has hundreds of transformer blocks stacked together to improve performance.

- Encoder-Decoder Architecture – A structure where the encoder processes input text, and the decoder generates output, commonly used in translation models.

- Example: Google Translate uses this to convert English sentences into French.

- Pretraining and Fine-Tuning – Transformers are first trained on vast amounts of text (pretraining) and then adjusted for specific tasks (fine-tuning).

- Example: BERT was pretrained on Wikipedia and later fine-tuned for tasks like sentiment analysis.

- Tokenization – The process of breaking text into smaller units (tokens) that the model can understand.

- Example: “I’m happy” might be split into tokens: [“I”, “’m”, “happy”].

- Masking (for Training) – A technique where certain words in a sentence are hidden so the model learns to predict missing words.

- Example: In BERT, a sentence like “The [MASK] is blue” forces the model to learn that the missing word is “sky.”

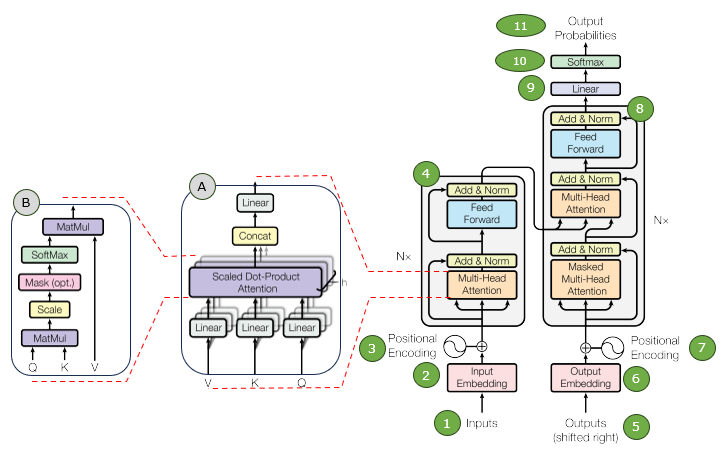

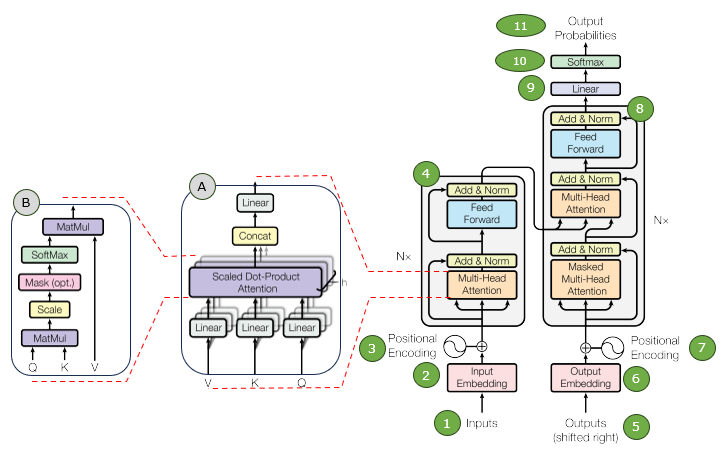

The diagram shown below is a high level diagram of Transformer architecture from Attention Is All You Need. There are a lot of different versions of diagrams and there are many variations in the architecture itself, but this would be the most widely known architecture.

Image Source : Attention Is All You Need

Following is the breakdown of this diagram and short descriptions of each labeled block (Of course, you may not get clear understandings on the descriptions below unless you already have pretty good prior knowledge about the transformer. So don't get disappointed / frustrated if this descriptions does not make clear sense to you for now).

(1). Inputs (Encoder):

This is the initial input to the encoder, the sequence you want to process (e.g., a sentence in English for translation).

Followings are the input/output of this stage

- Input: A sequence of tokens (words, sub-words, or characters). Eg. "The cat sat on the mat."

- Output: The same sequence of tokens, but represented as numerical vectors through embedding (explained next). e.g, "The" → [0.1, 0.3, -0.5, ...] (vector representation)

(2). Input Embedding (Encoder):

Converts the input tokens (words) into dense, continuous vector representations called embeddings. This captures semantic meaning and relationships between words. In orther words, this converts tokenized words into dense vector representations that the Transformer can process.

Followings are the input/output of this stage

- Input: A sequence of tokens (words). e.g, "The" → [0.1, 0.3, -0.5, ...] (vector representation)

- Output: A sequence of word embeddings (vectors). e.g,The word "The" becomes a vector like [0.2, 0.8, 0.5, ...]. Similar words have similar vectors.

(3). Positional Encoding (Encoder):

Adds information about the position of each word in the sequence to the embeddings. Transformers don't inherently understand word order, so this is crucial. Since Transformers do not have built-in word order awareness (unlike RNNs), positional encoding is added to provide sequence information

Followings are the input/output of this stage.

- Input: Word embeddings (from Step 2).

- Output: Word embeddings with positional information added.

(4). Multi-Head Attention (Encoder):

Calculates the relationships between all words in the input sequence, determining which words are most relevant to each other. "Multi-head" means it does this multiple times with different sets of learned parameters (attention heads). This layer computes the relationship between words (attention mechanism). Each word "attends" to other words in the sentence to understand their contextual meaning.

Followings are the input/output of this stage.

- Input: Word embeddings with positional encoding.

- Output: A set of attention-weighted embeddings. e.g, In "The cat sat on the mat," the model learns that "cat" is related to "sat" more than to "mat."

Since this block is comprised of multiple components, let me breakdown further of this block :

Function of Multi-Head Attention

- Purpose: Captures dependencies between words in a sentence.

- Mechanism: Uses multiple attention heads to extract different aspects of meaning.

- Example: In the sentence "The cat sat on the mat.", one attention head might focus on syntactic structure (e.g., subject-verb agreement: "cat" → "sat"), while another head might focus on semantic meaning (e.g., "mat" relates to "on").

Step-by-Step Breakdown

Step 1: Compute Query (Q), Key (K), and Value (V)

- Instead of a single attention mechanism, multiple heads process attention in parallel.

- Each head has its own set of Q, K, and V matrices:

Headi = Attention(QWQ,i, KWK,i, VWV,i)

- All attention heads are concatenated and transformed using a final weight matrix WO:

MultiHead(Q, K, V) = Concat(Head1, ..., Headh) WO

Input and Output of Multi-Head Attention

- Input: Word embeddings + positional encoding

- Processing: Compute Q, K, V → Apply scaled dot-product attention → Combine multi-head outputs

- Output: Context-aware representations that improve downstream tasks

Why is Multi-Head Attention Important?

- Handles long-range dependencies: Words in a sentence can relate even if they are far apart.

- Extracts multiple features: Each head learns different aspects of language (e.g., syntax, semantics).

- Improves translation & text generation: Ensures accurate word alignment in machine translation.

(5). Output (Shifted Right)

During training, the decoder’s input is shifted by one position to predict the next token.

Followings are the input/output of this stage.

- Input: Previous output sequence.

- Output: Shifted sequence to help the model learn next-word prediction. e.g: If the expected output is "I love AI", the decoder gets ["I", "love"] to predict the next word "AI".

(6). Output Embedding

Similar to input embedding, converts output text into vector representations.

Followings are the input/output of this stage.

- Input: Tokenized output sequence.

- Output: Dense vector representation of words. E.g, "AI" → [0.45, -0.23, 0.89, ...]

(7). Positional Encoding (Decoder)

Adds positional information to the decoder input, just like in the encoder.

Followings are the input/output of this stage.

- Input: Output embeddings.

- Output: Position-aware embeddings. Eg: Helps distinguish between "I love AI" and "AI love I".

(8). Multi-Head Attention (Decoder)

Computes attention for previously generated words (autoregressive decoding).

Followings are the input/output of this stage.

- Input: Decoder’s word embeddings.

- Output: Context-aware word representations. E.g, If generating "The cat," the model ensures that "cat" is correctly predicted given "The."

Since this block is comprised of multiple components, let me breakdown further of this block :

Block 8 represents the Multi-Head Attention mechanism in the Transformer Decoder. It differs from Block 4 in the Encoder because the decoder must generate text sequentially, ensuring that future words are not visible before they are predicted.

Key Differences Between Block 8 (Decoder) and Block 4 (Encoder)

- Block 4 (Encoder): Uses one self-attention layer to capture word relationships in the input sentence.

- Block 8 (Decoder): Uses two attention layers:

- Masked Multi-Head Attention: Prevents the model from seeing future words.

- Encoder-Decoder Attention: Helps the decoder align its output with the input sentence.

Breakdown of Block 8 (Decoder Multi-Head Attention Mechanism)

- Function: Ensures the model only attends to previous words during text generation.

- Why is masking needed? Prevents the model from "cheating" by seeing future words before predicting them.

- Example: If the expected output is "I love AI", the model:

- At step 1, sees only "I" and predicts "love".

- At step 2, sees "I love" and predicts "AI".

- Masking blocks it from seeing "AI" when predicting "love".

- Function: Aligns the decoder output with relevant parts of the input sentence.

- Key Difference from Block 4: Instead of self-attention, the decoder attends to the encoder’s outputs.

- Example (Machine Translation):

- Input sentence: "Le chat dort." (French: "The cat sleeps.")

- Generated output: "The cat is sleeping."

- The decoder aligns "chat" to "cat" and "dort" to "is sleeping".

- Function: Similar to the encoder, it applies non-linearity and improves generalization.

Summary of Differences from Block 4

- Block 4 (Encoder):

- Uses Multi-Head Self-Attention.

- No masking (sees full input sentence).

- Processes the entire input at once.

- Block 8 (Decoder):

- Uses Masked Multi-Head Attention + Encoder-Decoder Attention.

- Masking prevents future word visibility.

- Each word is predicted step-by-step.

Why This Matters?

- Masking ensures the model predicts words step-by-step during training.

- Cross-Attention aligns generated words with relevant parts of the input.

- Improves performance in tasks like translation, summarization, and text generation.

(9). Linear (Final Fully Connected Layer)

Converts processed word representations into logits (raw prediction scores).

Followings are the input/output of this stage.

- Input: Processed vector outputs from decoder.

- Output: A probability distribution over the vocabulary. E.g,"The" →

[0.1, 0.9, 0.05, ...] (indicating "cat" is the most likely next word).

(10). Softmax (Decoder):

Converts the logits into probabilities by applying the softmax function. This gives a probability distribution over the vocabulary.

- Input: Logits from the Linear layer

- Output: Probability distribution over the vocabulary. E.g, For the next word in a translation, the softmax might output a probability of 0.8 for "the", 0.1 for "a", 0.05 for "it", etc.

This is very simple step and the concept of softmax is so widely used in various deep learning model. So it would be good to look into this a little bit further.

The Softmax function is used in the final stage of the Transformer decoder to convert raw numerical scores (logits) into probabilities. This ensures that the model outputs a probability distribution over the vocabulary, helping it select the most likely next word.

What is Softmax?

- The softmax function takes a list of raw scores (logits) and normalizes them into a probability distribution.

- It ensures that the sum of all output probabilities is exactly 1.

- The word with the highest probability is selected as the predicted next word.

Softmax Formula

Given a set of logits (z1, z2, ..., zn), the softmax function is calculated as:

Softmax(zi) = ezi / ∑ ezj for all j

Step-by-Step Example

Suppose the Transformer model generates the following logits for the next word in a sentence:

- Logits: "the" = 2.5, "a" = 1.2, "it" = 0.5

- e2.5 = 12.18

- e1.2 = 3.32

- e0.5 = 1.65

Step 2: Compute the sum of exponentials

Sum = 12.18 + 3.32 + 1.65 = 17.15

- P("the") = 12.18 / 17.15 = 0.71

- P("a") = 3.32 / 17.15 = 0.19

- P("it") = 1.65 / 17.15 = 0.10

Final Output: The model assigns the highest probability (0.71) to "the", so it predicts "the" as the next word.

Why is Softmax Important at this step?

- Converts logits into interpretable probabilities.

- Ensures the model selects the most likely next word.

- Prevents extreme values from dominating predictions.

Summary

- Input: Raw logits from the linear layer.

- Processing: Softmax normalizes logits into probabilities.

- Output: Probability distribution over vocabulary (sum = 1).

- Prediction: The word with the highest probability is chosen.

Transformers are increasingly being adopted in the telecom industry, particularly in two key areas: Radio Access Network (RAN) and Core/OAM (Operations, Administration, and Maintenance). Their ability to process large-scale sequential data efficiently makes them well-suited for tasks such as network optimization, anomaly detection, and predictive maintenance.

Transformers are revolutionizing telecom by enhancing network efficiency, optimizing resources, and improving service quality across both RAN and Core/OAM. Their ability to process large-scale sequential data and learn complex patterns makes them a key enabler for AI-driven telecom networks, particularly in 5G and future 6G systems.

Transformers are revolutionizing how we build and manage telecom networks. In the RAN, they're like super-smart assistants, predicting network conditions to optimize resource allocation, preventing equipment failures before they happen, and even helping us design better networks in the first place. They're also making our networks more efficient by compressing crucial information and intelligently managing how signals are directed.

- Channel State Information (CSI) Prediction & Compression

- Transformers can predict and compress CSI feedback more efficiently than traditional methods, reducing overhead while maintaining accuracy. Imagine the network constantly trying to understand the radio environment – that's essentially what Channel State Information (CSI) is. It's crucial for good communication, but all that information takes up valuable bandwidth. Transformers are like CSI whisperers; they can predict what's going to happen with the radio signals, allowing

them to compress the CSI feedback much more effectively than older methods. This means less data needs to be sent, freeing up space and boosting the overall efficiency of the network, which is especially important in advanced systems like massive MIMO. Think of it as the network getting a much clearer picture of the radio environment without having to shout every single detail.

- Example: Using a transformer-based model, CSI reports can be compressed while preserving quality, improving spectral efficiency in massive MIMO systems.

- Beam Management and Optimization

- In 5G and 6G networks, beamforming plays a crucial role in improving signal quality. Transformers can predict optimal beam directions based on historical patterns and real-time data. Think of beamforming as the network shining a spotlight on each user to give them the best possible signal. In 5G and 6G, this is super important, but figuring out where to aim that spotlight is tricky, especially when people are moving around. Transformers are like expert spotlight operators.

They learn from past patterns and combine that with real-time information to predict the perfect beam direction. This AI-powered beam selection is a game-changer, especially in situations where users are moving quickly, like on a high-speed train. It means fewer dropped connections and a much smoother experience.

- Example: AI-driven beam selection using transformers reduces handover failures and improves user throughput in high-mobility scenarios like high-speed trains.

- Interference Management & Scheduling

- Transformers enhance dynamic resource allocation by analyzing interference patterns across multiple cells and adjusting power levels accordingly. Imagine a crowded room where everyone's trying to talk at the same time – that's what interference is like in a cellular network. Transformers act like air traffic controllers for radio waves. They analyze how signals are interfering with each other across different cells and dynamically adjust power levels to minimize the chaos. This

is incredibly important in ultra-dense networks where lots of cell towers are packed closely together. By predicting this inter-cell interference, Transformers can optimize power control strategies, ensuring that everyone gets a clear signal and that the network doesn't become a noisy mess.

- Example: In ultra-dense networks, transformer models can predict inter-cell interference and optimize power control strategies to minimize signal degradation.

- Intelligent Handover Decisions

- Unlike traditional rule-based handover algorithms, transformers can analyze multi-dimensional mobility patterns to improve handover success rates. When you're on your phone and move from one cell tower's coverage to another, a "handover" needs to happen seamlessly so you don't lose your connection. Traditional methods for doing this are kind of clunky, like having a fixed rule for when to switch. Transformers, on the other hand, are much smarter. They can analyze

all sorts of information – your speed, how busy the network is, what kind of service you're using – to make much better handover decisions. So, instead of just relying on signal strength, a transformer model can dynamically adjust when to hand over, making the whole process much smoother and leading to fewer dropped calls or interrupted data. It's like having a personalized handover expert managing your connection.

- Example: Instead of a fixed RSRP threshold-based handover, a transformer model can dynamically adjust handover triggers based on user speed, network load, and QoS demands.

Transformers are bringing a new level of intelligence to the core of telecom networks as well. They're enabling proactive management by predicting potential problems before they disrupt service, from network congestion to security threats. They're also optimizing traffic flow in real-time, ensuring that network resources are used efficiently and that users get the best possible experience. Furthermore, Transformers are streamlining operations by automating complex configuration

tasks and even helping engineers make sense of all the technical data they have to deal with. In essence, they're empowering telecom operators to build more resilient, responsive, and efficient core networks that can handle the growing demands of our connected world. They are moving the industry towards a more automated and intelligent future, where networks can practically manage themselves.

- Anomaly Detection & Fault Prediction

- Core networks generate massive logs and performance metrics. Transformers help in detecting anomalies and predicting failures before they cause network outages. Core networks are constantly generating a huge amount of data – logs, performance metrics, you name it. It's like trying to find a needle in a haystack to spot potential problems. Transformers are like super-sleuths for network issues. They can sift through all that data, learn what's "normal," and quickly

identify anything that looks out of place – anomalies that might signal trouble. Even better, they can predict failures before they cause major outages.

- Example: A transformer-based system analyzing call logs and KPI trends can predict a core network congestion event before it impacts users.

- Intelligent Traffic Prediction & Load Balancing

- Core networks must handle unpredictable traffic patterns. Transformers can analyze historical and real-time data to predict network congestion and distribute traffic efficiently. Network traffic can be a wild beast – sometimes it's calm, and other times it surges unexpectedly. Core networks need to be able to handle these unpredictable patterns. Transformers are like traffic forecasters for the network. They analyze past trends and combine that with what's happening right now

to predict where congestion might occur. This allows them to distribute traffic more efficiently, like rerouting cars around a traffic jam. In a 5G core network, this AI-driven load balancing can shift traffic between different "slices" of the network, ensuring that everything runs smoothly and that latency stays low, improving reliability for all users.

- Example: AI-driven load balancing in 5G core can shift traffic between network slices to maintain low latency and improve reliability.

- Security & Threat Detection

- Transformers improve intrusion detection by identifying unusual traffic patterns, signaling anomalies, and security threats in real time. Protecting a core network from cyberattacks is a constant battle. Transformers are like highly sensitive security guards. They're excellent at spotting unusual activity – anything from suspicious traffic patterns to strange signaling anomalies – that could indicate a security threat. They can do this in real-time, giving operators a crucial

edge in defending against attacks. For example, a transformer-based system could analyze control plane messages in a 5G core network to quickly detect DDoS attacks or signaling storms, allowing security teams to respond immediately and prevent widespread disruption.

- Example: Detecting DDoS attacks or signaling storms in the 5G core network by analyzing control plane messages with transformers.

- Automated Network Configuration & Optimization

- Transformers assist in self-optimizing networks (SON) by analyzing performance trends and suggesting parameter adjustments. Managing a complex network involves a lot of fine-tuning, adjusting countless parameters to keep things running smoothly. Traditionally, this has been a manual and time-consuming process. Transformers are changing that. They're helping to create self-optimizing networks (SON) by analyzing performance trends and suggesting the best parameter adjustments.

Instead of network engineers spending hours tweaking settings, a transformer model can recommend optimal values for things like how often a user's device should be "paged," how to prioritize different types of traffic (QoS), or even how to configure network slices. This automation frees up valuable time and allows the network to adapt and optimize itself much more efficiently.

- Example: Instead of manual parameter tuning, a transformer model can suggest optimal settings for UE paging timers, QoS parameters, or network slicing policies.

- Natural Language Processing (NLP) for OAM

- Telecom operators use vast amounts of technical documentation and trouble tickets. Transformers help process, summarize, and extract actionable insights. Telecom operators are drowning in technical documentation, trouble tickets, and log files. It's a huge challenge to sift through all this information and find the crucial details needed to keep the network running smoothly. Transformers, with their natural language processing (NLP) skills, can come to the rescue. They

can process and summarize this information, extracting the key insights that humans might miss. Imagine AI-powered assistants using transformer models to interpret complex log files, suggest troubleshooting steps, and even automate the resolution of common issues. This not only saves time and effort but also helps to identify and fix problems much faster. It's like having a team of expert analysts constantly monitoring and interpreting all the network data.

- Example: AI-powered assistants using transformer models can interpret log files, suggest troubleshooting steps, and automate issue resolution.

While transformers have revolutionized AI, including applications in telecom, they are not without challenges. Addressing these challenges is an active area of research, and ongoing work is focused on making Transformers more efficient, interpretable, robust, and ethical.

- High Computational and Energy Costs

- Expensive to Train & Deploy – Transformers, especially large models like GPT-4, require massive amounts of computational power and energy, making them costly to train and deploy. Training and deploying really powerful Transformers is like running a small city – it takes a lot of energy and resources. These models, especially the cutting-edge ones like GPT-4, are incredibly hungry for computing power, meaning you need a whole lot of expensive hardware and a hefty electricity

bill. We're talking potentially millions of dollars just to train one of these state-of-the-art models. It's not something just anyone can do, which creates a barrier to entry and concentrates the power in the hands of those with deep pockets.

- Example: Training a state-of-the-art transformer can cost millions of dollars in cloud resources.

- Inefficient for Real-Time Processing – In latency-sensitive environments like 5G RAN scheduling, transformers may be too slow compared to traditional ML models. Imagine trying to make split-second decisions in a fast-paced environment. That's the challenge for real-time processing in areas like 5G RAN scheduling. While Transformers are incredibly powerful, they can sometimes be a bit slow compared to simpler, more traditional machine learning models. When you

need to make decisions about how to allocate network resources practically instantaneously, waiting for a large Transformer model to crunch the numbers might simply take too long. Think of it like trying to use a supercomputer to decide which lane to take in traffic – by the time the computer figures it out, you've already missed your turn. In these kinds of time-sensitive situations, simpler, faster models are often still the better choice.

- Example: Real-time packet scheduling in a base station cannot afford high inference times from a transformer model.

- Poor Interpretability & Debugging Complexity

- “Black Box” Nature – Unlike rule-based systems, transformers lack explainability, making it hard to understand why a model made a certain decision. In other words, you put data in, and you get a result out, but it can be really hard to understand why the model made that specific decision. This is very different from older, rule-based systems where you can clearly see the logic behind each step. When a Transformer makes a mistake, like misclassifying an anomaly

in network logs, engineers can have a tough time figuring out what went wrong. It's like trying to fix a complex machine without knowing how all the parts work together – you can see the problem, but you're not sure what's causing it. This lack of explainability can make it difficult to debug issues and improve the model's performance.

- Example: If a transformer misclassifies an anomaly in network logs, engineers may struggle to debug why it failed.

- Difficult to Tune for Telecom-Specific Problems – Unlike NLP, telecom tasks involve highly structured protocol data, which transformers are not inherently designed for. Transformers have proven incredibly effective in areas like natural language processing, but applying them to telecom-specific problems can be a bit more challenging. Telecom data, especially things like protocol information, is highly structured and follows strict rules and standards (like 3GPP). Transformers,

in their basic form, aren't necessarily designed to handle this kind of highly structured data as efficiently as they handle free-flowing text. So, if you're trying to use a Transformer to, say, optimize how devices hand over between cell towers (RRC handover parameters), you'll likely need to do a lot of customization and adaptation to make sure the model aligns with those specific telecom standards. It's not a plug-and-play situation; it requires significant engineering effort to bridge the gap

between the general capabilities of a Transformer and the very particular requirements of the telecom world.

- Example: Optimizing RRC handover parameters using transformers may require heavy customization to align with 3GPP standards.

- Scalability Challenges in Telecom

- Limited Small-Scale Effectiveness – Many telecom applications require low-complexity AI models due to hardware constraints in base stations and IoT devices. While Transformers are powerful, their size and complexity can be a real problem when you're trying to deploy AI in the real world of telecom. Many telecom applications, especially those running on base stations or tiny IoT devices, have strict limitations on how much memory and processing power they can use. These

devices often need small, lightweight AI models that can run efficiently on limited hardware. Trying to squeeze a large Transformer model into a small cell for something like beam prediction might simply be impractical. It's like trying to fit a giant truck into a tiny parking spot – it's just not going to work. So, while Transformers are great for some tasks, they're not always the best fit for situations where resources are constrained.

- Example: Deploying a transformer for beam prediction in a small cell may be impractical due to memory and processing limitations.

- Training Data Limitations in Telco – Unlike NLP models trained on vast web text, telecom data is private, fragmented, and harder to label, limiting effective model training. One of the reasons Transformers have been so successful in areas like natural language processing is the sheer amount of data they can be trained on. NLP models often learn from massive datasets scraped from the web. In the telecom world, things are very different. Telecom data, like 5G

network logs, is often highly sensitive and private, belonging to specific operators. This makes it much harder to create the kind of massive, public datasets that Transformers thrive on. The data is also often fragmented, spread across different systems, and can be difficult to label consistently. This data scarcity and the challenges of labeling it make it much harder to train effective Transformer models for telecom applications. It's like trying to build a comprehensive map of a city

when you only have access to a few scattered street maps.

- Example: 5G network logs contain sensitive operator data, making it hard to create large-scale public datasets for transformer training.

- Overfitting & Generalization Issues

- Transformers May Memorize Rather Than Generalize – When trained on limited datasets, transformers may overfit and fail to generalize well in dynamic network conditions. Transformers, like any machine learning model, can sometimes struggle with the difference between memorizing and truly understanding. When trained on a limited dataset, they might overfit, essentially memorizing the specific examples they've seen rather than learning the underlying patterns. This

means they might perform brilliantly on the data they were trained on but fail miserably when faced with new, unseen situations. In the context of telecom, this can be a real problem. For example, a Transformer model trained on one network operator's data might perform really well in that specific network. But if you try to deploy that same model in another region with different traffic patterns, network configurations, or user behavior, it might fall apart because it hasn't truly learned to

generalize – it's just memorized the quirks of the first network. It's like learning a specific route to a store by heart but then getting lost when you try to find a different store in the same city.

- Example: A model trained on one network operator’s dataset may perform poorly when deployed in another region with different traffic patterns.

- Struggle with Noisy and Imbalanced Data – Telecom data often contains imbalanced KPIs, sparse failures, and high noise, which transformers may misinterpret. Real-world telecom data is rarely clean and perfect. It's often messy, with imbalanced key performance indicators (KPIs), infrequent (sparse) failures, and a lot of noise. This can be a real challenge for Transformer models. They might misinterpret the noise as real signals or struggle to learn from the

imbalanced data, where some events are much more common than others. For example, if you're training an anomaly detection model on simulated failures, it might become really good at detecting those specific simulated scenarios but completely miss real-world issues that occur in unpredictable ways. It's like training a security system to recognize only one type of intruder – it might be completely blind to other, more sophisticated threats. This means that models trained on imperfect telecom

data need to be carefully evaluated and robustly designed to handle the inherent messiness of real-world networks.

- Example: Anomaly detection models trained on simulated failures may miss real-world issues that occur in unpredictable ways.

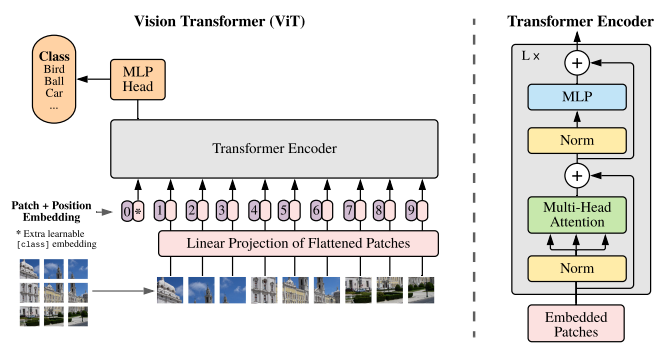

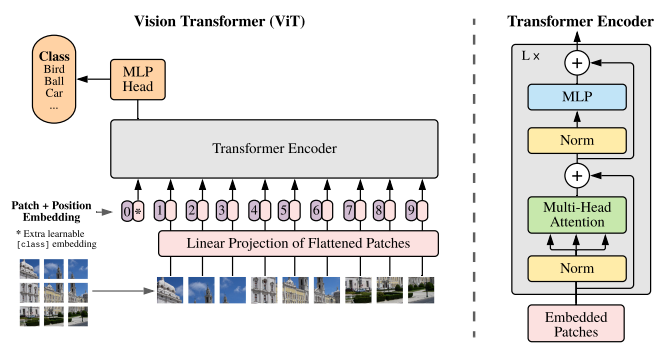

The Vision Transformer (ViT) is a new approach to computer vision that applies the transformer model, which was originally created for processing language, to image recognition. Unlike traditional convolutional neural networks (CNNs), which analyze images by focusing on small local regions, ViT divides an image into fixed-size patches and treats them like words in a sentence. However, unlike words, these image patches do not have a natural order, so ViT uses positional embeddings to keep track

of where each patch belongs. In language processing, transformers use self-attention to understand relationships between words over long distances. In ViT, this same mechanism helps connect different parts of an image, but without the built-in local structure that CNNs have. ViT changes the traditional way of analyzing images by relying entirely on self-attention instead of using the local feature detection that CNNs are built on. This means it does not focus on small regions first before understanding the whole

image. Instead, it looks at the entire image at once, learning relationships between different parts. When trained on very large datasets, ViT can perform just as well as, or even better than, CNNs in recognizing images.

Architecture Overview

The Vision Transformer (ViT) architecture shown in the image applies the transformer model, originally developed for natural language processing (NLP), to image recognition. It differs from traditional convolutional neural networks (CNNs) by discarding the concept of convolutional layers and instead treating an image as a sequence of smaller patches, similar to words in a sentence.

Image Source : AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE

Followings are some highlights of the architecture shown above

- Patch Embedding and Positional Encoding

- The image is divided into fixed-size square patches, which are then flattened into vectors.

- Each patch is linearly projected into a lower-dimensional space, making them similar to word embeddings in NLP transformers.

- A positional encoding is added to each patch since, unlike text where word order matters, image patches lack an inherent sequence.

- Transformer Encoder (Similar to NLP Transformers)

- Like in NLP transformers (e.g., BERT or GPT), ViT uses multi-head self-attention (MSA) to process all patches at once, capturing long-range dependencies across the entire image.

- Unlike CNNs, which use local receptive fields and shared filters to gradually build up spatial hierarchies, ViT uses self-attention to directly model relationships between distant patches.

- Normalization layers and feedforward networks (MLPs) are stacked in a way similar to NLP transformers.

- Class Token and MLP Head (Inspired by NLP’s CLS Token)

- A special class token is added to the sequence, just like the [CLS] token in BERT, and serves as the final representation for classification.

- The output of this token is fed into a Multi-Layer Perceptron (MLP) head to produce the final class prediction.

Reference :

- Generic / Language Model

- Vision Transformer

YouTube :

- Generic / Language Model

- Vistion Transformer

|

|