|

What is Cognition / Cognitive Process ?

Cognitive processing refers to the mental activities through which we acquire, interpret, understand, and use information. It encompasses a broad range of complex functions that our brain uses to navigate and interact with the world. These functions are interrelated, often working together to facilitate our thought processes and behaviors.

Putting it in plain languange, Cognitive processing is all about how our brain deals with information. It's how we take in, make sense of, and use what we learn. It involves many different tasks our brain does to help us understand and interact with the world around us. These tasks are often connected and work together to support how we think and behave.

The cognitive process is a complex yet fascinating sequence of steps that allows us to perceive, understand, and interact with the world around us. It begins with the raw input of our senses, which is then filtered and transformed into meaningful information. This information is actively held in our minds for immediate use or stored away for later retrieval. Through further mental manipulation, we can integrate new information with existing knowledge, leading to learning, decision-making, and

even the creation of new ideas

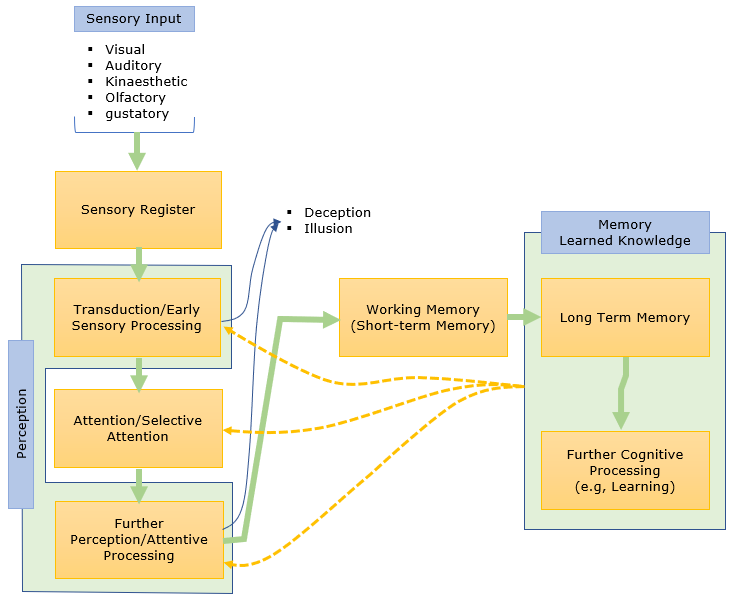

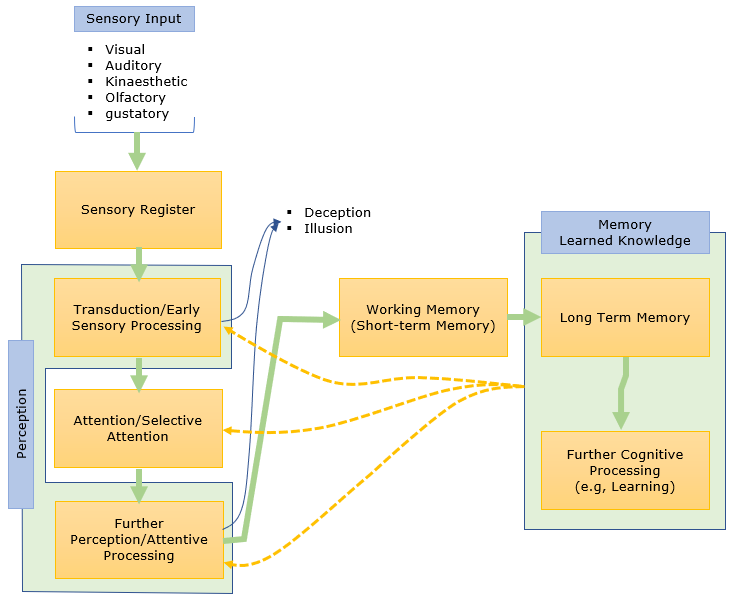

The overall cognitive process can be illustrated as follows. You may get different illustration from different persons even though most of those illustration would have some commonalities. This is just my personal version of the cognitive process.

Following is a brief description of each of the components (processing step) in the illustration.

- Input/Sensory Register: Sensory information from the environment enters the system. This is the first step where our senses (like sight, hearing, touch, smell, and taste) pick up information from the world around us.

- Transduction/Early Sensory Processing: Sensory receptors convert the sensory stimuli into neural signals. Early sensory processing in the brain extracts basic features from these signals. Some sensory input may be filtered out at this stage. In other words, the information our sensary organ pick up is turned into signals that our brain can understand. This process is called transduction. Our brain starts to

sort

out and recognize the basic features of these signals. Some

less

important information might be ignored

at this stage.

- Early Perception/Pre-attentive Processing: Some degree of automatic perceptual processing occurs, further analyzing and organizing the sensory input. The

brain automatically starts to make sense of and organize the sensory information. This happens without us needing to pay direct attention to it.

- Attention/Selective Attention: Attention filters the sensory input, directing resources toward the most relevant or salient information. Our attention acts

like a spotlight, focusing on the most important or interesting parts of the sensory information. This helps us to pay attention to what is most important and ignore the rest

- Further Perception/Attentive Processing: The selected sensory information undergoes further, more detailed perceptual processing. This may include perceptual filling-in to create a coherent perceptual experience. In other words, the information we pay attention to is processed even more by our brain. Sometimes, our brain might fill in gaps in the information to help us make sense of what we're sensing. This leads to a complete understanding

of

the sensory information.

- Working Memory (Short-term Memory): The processed information is held in working memory, where it's actively manipulated and integrated with other information. This is where the brain keeps the processed information ready for us to use right away. Here, we can think about it, link it with other information, and use it to make decisions.

- Long-Term Memory: Information from working memory can be stored here, and information in long-term memory can be brought back into working memory. Some information from our working memory is stored in our long-term memory for us to remember for a long time. We can also bring back information from our long-term memory into our working memory when we need to use it again.

NOTE : The main topics in this note will mostly focused on early part of processing before memory. Memory and further processing (like learning) will be discussed in a separate note : Memory/Learning.

Following is the summary of the cognitive process in tabular format for simplicity.

|

Stage

|

Description

|

|

Input/Sensory Register

|

Sensory information from the environment enters the system. Our senses pick up information from the world around us.

|

|

Transduction/Early Sensory Processing

|

Sensory receptors convert stimuli into neural signals. Early sensory processing in the brain extracts basic features. Some sensory input may be filtered out.

|

|

Early Perception/Pre-attentive Processing

|

Automatic perceptual processing occurs, further analyzing and organizing the sensory input. This happens without us needing to pay direct attention to it.

|

|

Attention/Selective Attention

|

Attention filters the sensory input, directing resources toward the most relevant or salient information. Our attention acts like a spotlight, focusing on the important parts

of the sensory information.

|

|

Further Perception/Attentive Processing

|

The selected sensory information undergoes further, more detailed perceptual processing. This includes perceptual filling-in to create a coherent perceptual experience.

|

|

Working Memory (Short-term Memory)

|

The processed information is held in working memory, where it's actively manipulated and integrated with other information.

|

|

Long-Term Memory

|

Information from working memory can be stored here, and information in long-term memory can be brought back into working memory.

|

Sensory Input (Sensors):

- In humans: We receive information from our five senses (visual, auditory, kinesthetic, olfactory, gustatory).

- In AI: This is analogous to input devices like cameras (for vision), microphones (for audio), and other sensors collecting data from the environment.

Sensory Register (Buffer):

- In humans: This is a temporary storage area for raw sensory data, holding it for a very brief period before it's processed further.

- In AI: Similar to a buffer in a computer, it temporarily stores the incoming raw data from sensors before it's analyzed.

Transduction/Early Sensory Processing (Feature Extraction):

- In humans: This stage involves converting sensory signals into a format the brain can understand and extracting basic features.

- In AI: This is like feature extraction algorithms, which identify and isolate specific patterns or characteristics in the raw data.

Attention/Selective Attention (Filtering and Prioritization):

- In humans: We focus on specific aspects of the sensory input, filtering out irrelevant information.

- In AI: Similar to attention mechanisms in neural networks, it determines which parts of the extracted features are most relevant and should be processed further.

Perception/Attentive Processing (Pattern Recognition and Interpretation):

- In humans: We interpret the selected sensory information, recognizing patterns, and assigning meaning to it.

- In AI: This is similar to pattern recognition algorithms, which identify and classify objects or events based on the processed features.

Working Memory (Short-term Memory) (Temporary Storage and Manipulation):

- In humans: We hold a limited amount of information in our conscious awareness for immediate use and manipulation.

- In AI: This is analogous to RAM (Random Access Memory) in a computer, which temporarily stores data that is currently being used.

Long-Term Memory (Storage):

- In humans: We store information for longer periods, forming our knowledge base.

- In AI: This is like the hard drive or database of a computer, where information is stored for later retrieval.

Further Cognitive Processing (e.g., Learning) (Analysis and Integration):

- In humans: We integrate new information with existing knowledge, make decisions, and learn.

- In AI: This is similar to machine learning algorithms, which analyze data, identify patterns, and adjust their internal models to improve performance.

Deception/Illusion (Errors and Biases):

- In humans: Our perception can be fooled by illusions or deceptive information.

- In AI: This is analogous to biases or errors in the training data or algorithms, leading to incorrect outputs or decisions.

Let me exaplan about the visual data processing as an example with the parts of the brain that are involved at each step. (NOTE : It would be beneficial to take a look at this note to

understand the description here)

- Input: Light from the environment enters your eyes. This light contains visual information about the bird flying against the backdrop of the sunset.

This is the sensory input.

- Sensory Register: The cells in your retina capture this light and convert it into electrical signals. These signals form a brief, raw image of the scene. This image is temporarily held in the sensory register. At this stage, the image is not yet "seen" or understood; it's simply a pattern of light and dark, color, and motion. The cells in your retina (in your eyes) capture this light and convert

it into electrical signals. These signals form a brief, raw

image

of the scene that is sent to the brain.

- Transduction/Early Sensory Processing: The electrical signals are sent from your retina to your brain via the optic nerve. In your brain, early sensory processing begins. Simple features of the image, such as lines

and edges, colors, and movement, are detected by specialized cells in the visual

cortex. In other words, the electrical signals from the retina are sent to the brain via the optic nerve. In the brain, these signals first reach the lateral geniculate nucleus (LGN) in the thalamus, which is a relay station for visual (and other) information. From the LGN, the signals are sent to the primary visual cortex (V1), located in the occipital lobe at the

back of the brain. In the V1, basic features like lines and edges, colors, and movement are detected.

- Attention: Among all the visual information processed, your brain then determines where to focus its attention. Perhaps the movement of the bird or the bright colors of the sunset draw your attention. The parietal

lobe, specifically the posterior parietal cortex, plays a major role in directing

visual attention. It helps determine where to focus among all the visual information processed.

- Attentive Processing/Further Perception: Once your attention is drawn, further perception takes place. Now, your brain processes the attended visual information in more detail. The bird is recognized as a bird, and the background is recognized as a sunset. Your brain may also fill in gaps based

on past experiences and knowledge, such as the type of bird it is or recognizing the type of sunset. The identified features are then sent to other areas of the visual cortex for further processing. The ventral stream (also known as the "what pathway") processes information about object identity (like recognizing the bird) and is located in the temporal lobe. The

dorsal

stream (or "where pathway") processes spatial location and motion and extends into the parietal lobe.

- Short-Term Memory/Working Memory: The perception of the bird flying across the sunset is held in your working memory. Here, you might think about the bird, remember the last time you saw such a sunset, or decide to take a photo. The prefrontal cortex plays a critical role in working memory,

holding the perceived image of the bird and sunset as you think about it, decide to take a photo, or relate it to other information.

- Long-Term Memory: If this visual experience is significant or emotional to you, it might be transferred to your long-term memory. The next time you see a similar sunset or the same type of bird, this memory might be triggered, enhancing your recognition and understanding of the scene. The

hippocampus, located in the medial temporal lobe, is crucial for transferring information from working memory into long-term memory. When you later recall the image of that sunset or bird, various regions of the cortex associated with the stored information will be activated.

Following is the summary of the cognitive process in tabular format for simplicity.

|

Stage

|

Description

|

Neural Region on the Path

|

|

Input

|

Light containing visual information from the environment enters the eyes.

|

Eye (Retina)

|

|

Sensory Register

|

Cells in the retina capture this light and convert it into electrical signals. These signals form a brief, raw image of the scene that is sent to the brain.

|

Retina

|

|

Transduction/Early Sensory Processing

|

The electrical signals from the retina are sent to the brain via the optic nerve. In the brain, these signals first reach the lateral geniculate nucleus (LGN), from where they are sent to the primary visual cortex (V1). Here, basic features are detected.

|

Optic Nerve, LGN in the Thalamus, V1 in the Occipital Lobe

|

|

Attention

|

Among all the visual information processed, the brain determines where to focus its attention. The movement of the bird or the bright colors of the sunset might draw attention.

|

Parietal Lobe (Posterior Parietal Cortex)

|

|

Attentive Processing/Further Perception

|

Once attention is drawn, further perception takes place. The brain processes the attended visual information in more detail. The bird is recognized as a bird, and the background is recognized as a sunset.

|

Temporal Lobe (Ventral Stream), Parietal Lobe (Dorsal Stream)

|

|

Short-Term Memory/Working Memory

|

The perception of the bird flying across the sunset is held in working memory, where it may be actively manipulated or related to other information.

|

Prefrontal Cortex

|

|

Long-Term Memory

|

If this visual experience is significant or emotional, it might be transferred to long-term memory. The next time you see a similar sunset or the same type of bird, this memory might be triggered.

|

Hippocampus, Various Regions of the Cortex

|

The experiment described involving chimps and humans at Japan's Primate Research Institute (PRI) provides an excellent example of the cognitive processes outlined in the diagram as shown above, specifically focusing on sensory input, working memory, and further cognitive processing.

The results of the test showed that chimps, particularly Ayumu, had a significantly higher success rate in remembering and sequencing the numbers compared to humans, including well-practiced human students. This suggests differences in cognitive capabilities, particularly in working memory and processing speed, between chimps and humans, reflecting evolutionary adaptations specific to each species.

Image Source : Sequence Order in the Range 1 to 19 by Chimpanzees on a Touchscreen Task: Processing Two-Digit Arabic Numerals

We can take this as an example of illustrating the overall cognitive process that can be described as follows :

- Sensory Input: The chimps and humans are presented with a touch screen displaying numbers from one to nine randomly scattered. This stage corresponds to the 'Sensory Input' phase where visual stimuli are gathered.

- Sensory Register: As participants observe the numbers on the screen, their sensory registers briefly hold the visual information about the location and sequence of numbers.

- Transduction/Early Sensory Processing: The raw visual data of numbers and their spatial arrangement are converted into neural signals that can be processed by the brain.

- Attention/Selective Attention: Participants must focus their attention selectively on remembering the layout of the numbers. This selective attention ensures that only crucial information (the positions of numbers) is forwarded for more complex processing.

- Further Perception/Attentive Processing: The cognitive system continues to process the information with an emphasis on spatial and numerical recognition, which is essential for the task.

- Working Memory (Short-term Memory): This is the central element of the test. Once the participant touches one number, the others disappear, necessitating the use of working memory to recall and sequence the hidden numbers correctly. For chimps like Ayumu, and the human participants, this requires holding and manipulating information in their mind without visual aid.

- Long Term Memory and Further Cognitive Processing: Although not directly mentioned in the experiment, learning from repeated attempts could involve transferring some strategies or number patterns to long-term memory, improving performance over time through further cognitive processing like analysis and strategy refinement.

Why Chimps outperform Human in this test ?

The reason why chimpanzees, particularly young ones like Ayumu, outperform humans in rapid sequential memory tasks such as the Ayumu Memory Test can be understood through various cognitive and evolutionary perspectives.

The superior performance of chimpanzees in specific memory tests likely reflects a combination of heightened sensory processing, attentional focus, and memory capabilities that are specifically honed for their survival needs. These abilities are supported by their neurological architecture and evolutionary history, which emphasize different skills than those most developed in humans.

- Cognitive Differences in Sensory Processing:

- Visual Processing: Chimpanzees may process visual information differently than humans. Their ability to quickly memorize and recall visual-spatial information suggests that their visual sensory processing is exceptionally sharp and perhaps more focused on pattern recognition and spatial arrangement, which are crucial for survival in the wild.

- Attentional Focus:

- Selective Attention: Chimpanzees may have a more finely tuned mechanism for selective attention that allows them to concentrate intensely on visual details for short periods, which is a vital skill needed for detecting food, predators, or other environmental elements in the wild.

- Working Memory Capacity:

- Eidetic Memory: Young chimpanzees often demonstrate what appears to be eidetic or photographic memory, allowing them to recall images with high precision after brief exposure. This type of memory is much more common in young children than in adults, and tends to decline with age in humans.

- Neurological Structures:

- Brain Development: There may be differences in the brain's developmental pathways and structures involved in memory and processing speed between chimpanzees and humans. For instance, the prefrontal cortex, critical for working memory, develops differently across species and could confer specific advantages in rapid memory tasks.

- Evolutionary Adaptations:

- Survival Mechanisms: The evolutionary pressures faced by chimpanzees in their natural habitats may have favored the development of quick visual and spatial memory to avoid predators, find food, and navigate complex environments. In contrast, human evolution has emphasized advanced cognitive skills such as abstract reasoning, language, and complex problem-solving, which may trade off with the type of rapid memory recall seen in chimps.

- Task-Specific Specialization:

- Test Design: The design of the memory test itself might cater more to the strengths of chimpanzees than to typical human cognitive strengths. Humans are generally better equipped for tasks that require integrating information, long-term strategic thinking, and complex decision-making.

Literal meaning of the term illusion can be defined as follows :

- perception of something objectively existing in such a way as to cause misinterpretation of its actual nature - Merriam Webster

- a perception that represents what is perceived in a way different from the way it is in reality. - Dictionary.com

- a misrepresentation of a real sensory stimulusthat is, an interpretation that contradicts objective reality as defined by general agreement - Britanica

Illusions can potentially occur at any stage of the cognitive process. They can result from errors or distortions in how we perceive, pay attention to, interpret, remember, and recall information.

At each stage, the brain is working to make sense of the information it receives, and it does so based on the input data as well as what it has learned from past experiences. Illusions essentially highlight the shortcuts and assumptions our brains make in this process. It's important to note, though, that these shortcuts and assumptions are generally very useful, as they allow us to process a large amount of information quickly and efficiently. Illusions are interesting because they are instances

where these normally effective processes lead to incorrect or unexpected outcomes. Followings are brief summary of how it happens at each stage of the cognitive process.

- Input: This is the information our senses collect from the world. Sometimes, this input can be misleading to begin with, like a picture that is designed to look like something it's not. This can create an optical illusion.

- Sensory Register: This is the initial storage place for raw sensory information. Illusions can occur if the sensory register misinterprets the raw sensory data, such as mistaking shadows for actual objects.

- Transduction/Early Sensory Processing: This is the stage where our senses convert physical signals (like light or sound waves) into neural signals. Errors in this conversion process can create illusions. For example, a bright light can temporarily overload our eyes, causing us to see spots.

- Attention: Our attention determines which sensory information we focus on. Illusions can occur when we focus on certain aspects of a stimulus and ignore others. For example, in the famous "gorilla illusion," viewers focusing on a basketball game fail to notice a person in a gorilla suit walking through the scene.

- Attentive Processing/Further Perception: This is the stage where we consciously interpret and understand sensory information. Illusions can occur here if we misinterpret the information. For instance, our brain might interpret lines of equal length as being different lengths due to the context (like in the Mller-Lyer illusion).

- Short-Term Memory/Working Memory: Illusions can occur if we misremember information. For example, if you glance at a clock and it seems to take longer than a second for the second hand to move, this is known as the "stopped clock illusion."

- Long-Term Memory: Our long-term memories can also contribute to illusions. Our past experiences and knowledge can influence how we interpret sensory information. For example, a drawing might look like random lines and shapes until we recognize it as something familiar, like a face.

NO. Not every type of illusion occurs at every stage of the cognitive process. Different types of illusions are associated with different stages of the process, depending on the nature of the illusion.

For example, optical illusions are often associated with the early stages of perception, where sensory information is being collected and processed. These can include illusions related to color, size, or perspective, which often occur due to the ways in which our visual system interprets visual cues.

On the other hand, cognitive illusions, such as the illusion of memory or illusions of understanding, often occur at later stages of the cognitive process, such as in short-term or long-term memory, or in the processes of attention and decision-making.

So, while it's possible for illusions to occur at any stage in the cognitive process, not all types of illusions will occur at every stage. The specific stage at which an illusion occurs often depends on the specific characteristics of that illusion.

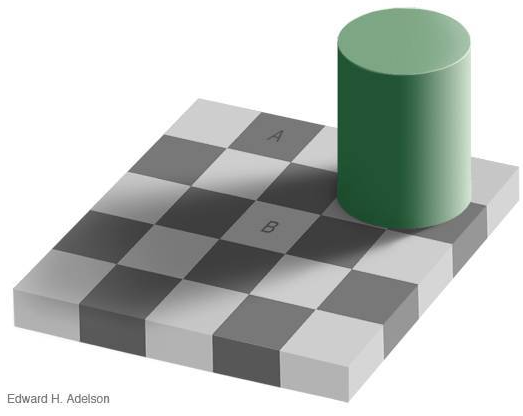

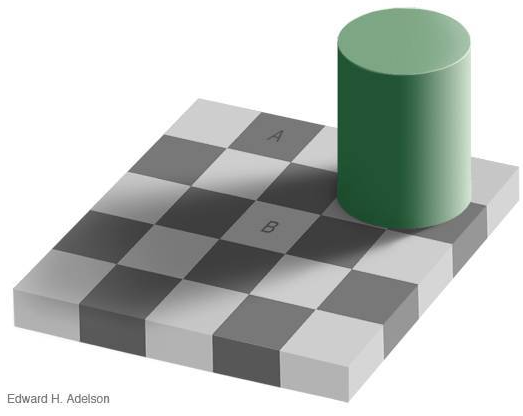

The Checker Shadow is a special picture made by a professor named Edward H. Adelson. It shows a pattern like a chessboard with a shadow on it from a green tube. On the board, there are two spots, marked as A and B, and they look like they have different gray colors. However, the amazing part is that A and B are really the same color! This picture shows us how our brain can change what we see based on what's around and what it thinks should be there.

If the explanation does not make clear sense to you, ask yourself a simple question as follows :

If you say 'NO, they are different color', you have illusion. But don't get disappointed.. you are not the only one who see them in that way. I think most of the person (even though I cannot say 100% of the person) would have the same illusion.

Source : Checkershadow Illusion by Edward H.Adelson

If I have illusion when I say A and B have different colors, does it mean that they are same color in reality ?

YES !!!.

How can you prove they are the same color ?

A simple way would be to ask computer that does not have the illusion like us. I checked the RGB values on pixels on several squares as marked below.

One obvious thing is that the square A and B shows the same RGB value, but looks pretty different to us. This is an illusion.

RGB pickup for a specific pixel : IMAGECOLORPICKUP.COM

Now let's think about how / where this illusion happens along the path of the cognitive process that was described in previous section.

- Input: The visual input is an image of a checkerboard with a shadow cast over part of it. Two specific squares are highlighted, one in the shadow (square A) and one outside the shadow (square B).

- Sensory Register: Our eyes register the image, including the light and dark squares, the shadow, and the two highlighted squares.

- Transduction/Early Sensory Processing: Our eyes convert the light coming from the image into electrical signals. The physical light intensity (luminance) coming from both squares A and B is the same

- Attention: Our attention is drawn to the two highlighted squares and the shadow across the checkerboard.

- Attentive Processing/Further Perception: This is where the illusion really takes hold. Our brain interprets the squares as being different shades because it takes into account the shadow. It assumes that square B, being in a shadow, should be darker, so if it appears the same shade as square A (not in the shadow), it must actually be lighter. This is known as color constancy, and is a powerful perceptual mechanism that usually helps us see colors

consistently in different

lighting.

- Short-Term Memory/Working Memory: We continue to perceive the squares as different colors as we examine the image and perhaps move our attention around it.

- Long-Term Memory: Our past experiences and knowledge contribute to the illusion. We know from experience that shadows make things look darker, so our brain uses this knowledge when interpreting the image. Our brain knows from past experience that objects in a shadow generally appear darker than they actually are. So, when it sees square B in the shadow, it compensates for the expected shadow effect by perceiving it as lighter than it appears

in the image.

Why we have such a illusion ? Do we have it as a result of defective cognitive process ? or is it a kind of evolved feature which has its own purpose or usage ?

Illusions as shown in the examples are not considered defects in our cognitive system. Rather, they are often seen as evidence of the sophisticated ways in which our brains process information.

For example, the Checker Shadow illusion reveals how our brains use context to interpret sensory information. In everyday life, we often encounter objects in varying lighting conditions, including in shadows. To make sense of these scenes, our brains have developed mechanisms like color constancy, which allows us to perceive the color of an object as constant, even when lighting conditions change.

This kind of context-based processing allows us to interact more effectively with our environment. It enables us to recognize objects in a variety of lighting conditions, anticipate how objects will appear in different contexts, and make more accurate judgments about the properties of objects.

So while optical illusions can certainly be surprising and sometimes disorienting, they are generally seen as signs of our cognitive system's complexity and adaptability, rather than as defects. They illustrate the interesting and often counterintuitive ways in which our brains work to interpret the world around us

Hallucinations in AI and illusions in the human brain are both instances where the information perceived does not align with the actual reality. Despite this similarity, they originate from fundamentally different mechanisms and exhibit unique characteristics. While AI hallucinations stem from errors or limitations within the model's training data, algorithms, or decision-making processes, illusions in the human brain are rooted in the misinterpretation of sensory input. This misinterpretation

can be attributed to various factors, including the limitations of our sensory systems, cognitive biases, or expectations.

AI hallucinations are often unintentional and arise when the model attempts to fill gaps in information or extrapolate beyond its training data, resulting in fabricated or nonsensical outputs. On the other hand, illusions in the human brain are a natural and common aspect of perception, often harmless and sometimes even entertaining. However, they can also be disruptive or dangerous under certain conditions.

Let's look into these two distict characteristics in more details :

Hallucinations in AI:

- Mechanism: AI hallucinations are generated due to errors or limitations in the model's training data, algorithms, or decision-making processes. These errors can lead to the AI generating outputs that are factually incorrect, nonsensical, or inconsistent with the input provided.

- Nature: AI hallucinations are typically unintentional and often result from the model attempting to fill in gaps in information or extrapolate beyond its training data. They can manifest as fabricated text, images, or other forms of output.

- Impact: AI hallucinations can be problematic, especially when the AI is used for tasks that require factual accuracy or reliable decision-making. They can lead to misinformation, misdiagnosis, or other negative consequences.

Illusions in the Human Brain:

- Mechanism: Illusions are perceptual experiences where the brain misinterprets sensory input, leading to a perception that does not match the physical reality of the stimulus. This can be due to various factors, such as the limitations of our sensory systems, cognitive biases, or expectations.

- Nature: Illusions are a natural and common aspect of human perception. They are often harmless and can even be entertaining, as in the case of optical illusions. However, some illusions can be disruptive or even dangerous, such as those experienced under the influence of drugs or certain medical conditions.

- Impact: Illusions can reveal insights into how our brains process information and construct our perception of the world. They can also have practical implications, such as influencing how we design user interfaces or safety systems.

There is considerable potential for cooperation between humans and AI systems to mitigate the effects of hallucinations in AI and illusions in the human brain. This collaborative approach can leverage the strengths of both human cognitive abilities and AIís computational power, enhancing overall performance and reliability in various applications. Here are some ways this cooperation can be structured:

Addressing AI Hallucinations:

- Human-in-the-loop Systems: Integrating human oversight into AI systems can help catch and correct hallucinations. Humans can provide feedback, validate outputs, and refine training data to improve AI accuracy.

- Explainable AI (XAI): Developing AI models that can explain their reasoning process can help humans understand why a hallucination occurred and adjust the system accordingly.

- Collaborative Fact-Checking: AI can flag potentially inaccurate information, and humans can then verify and correct it, creating a feedback loop that improves the AI's performance over time.

Addressing Human Illusions:

- AI-Assisted Perception: AI can augment human perception by providing additional information or highlighting potential biases, helping us to make more informed decisions.

- Virtual and Augmented Reality (VR/AR): These technologies can create controlled environments where illusions can be studied and understood, leading to better strategies for mitigating their negative effects.

- Personalized AI Assistants: AI can learn individual biases and tendencies, providing tailored feedback and nudges to help people overcome their own illusions.

Symbiotic Relationship:

- The key to successful cooperation lies in recognizing the strengths and weaknesses of both humans and AI. Humans excel at critical thinking, context awareness, and ethical judgment, while AI can process vast amounts of data, identify patterns, and automate tasks. By combining these capabilities, we can create systems that are more accurate, reliable, and ultimately more beneficial to society.

Examples of Current Collaboration:

- Medical Diagnosis: AI can analyze medical images and suggest potential diagnoses, but a human doctor ultimately makes the final decision and can identify potential hallucinations in the AI's analysis.

- Autonomous Vehicles: AI handles most driving tasks, but human drivers can take over in complex or uncertain situations, preventing accidents caused by AI errors.

- Content Moderation: AI can flag potentially harmful content, but human moderators review these flags and make final decisions, reducing the risk of AI bias or hallucinations.

By fostering collaboration between humans and AI, we can leverage the strengths of both to create a future where hallucinations and illusions are less likely to lead to harmful consequences.

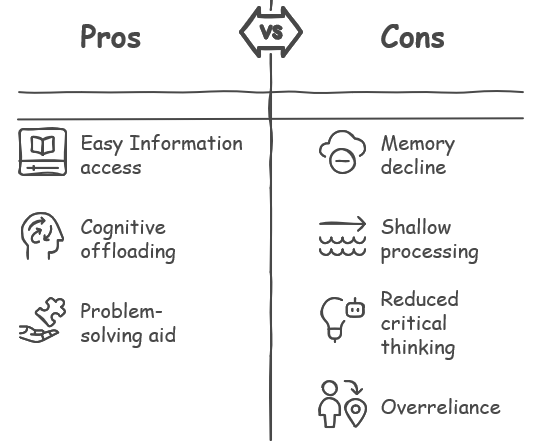

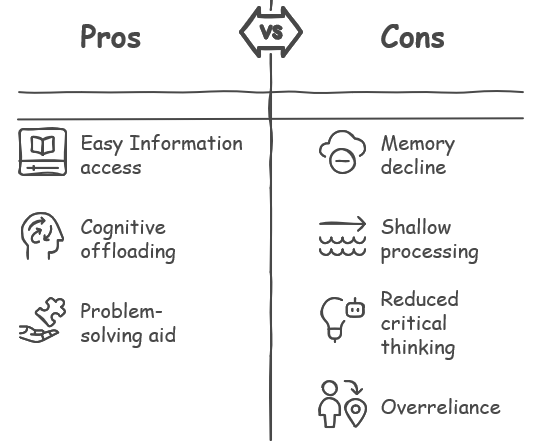

The simple answer would be : it make us smarter in some way and make us dumber in some other aspect.

The increasing reliance on digital technology, including search engines like Google and artificial intelligence like GPT, has profound effects on our cognitive processes. There are both positive and negative impacts. It has both negative and positive impact. In some way, digital technology can be very helpful, but we also need to be careful. We should still practice thinking deeply, remembering things, and solving problems on our own.

- Information Access and Knowledge Expansion(Learning More): With the internet and AI, we have almost unlimited access to information. This allows us to learn more than ever before and expand our knowledge base. Digital technology makes it easier to acquire new knowledge and skills.

- Cognitive Offloading(Doing Less Work): Digital tools can reduce our cognitive load by taking care of tasks like remembering appointments, calculating complex equations, or navigating new cities. This can free up cognitive resources for other tasks. In other words, these technologies can do some of the thinking for us. They (not we) can remember things, do hard math, and help us find our way in new places. Thanks to this help, we can spend more of our mental resources

for other

things.

- Complex Problem Solving (Solving Hard Problems): AI and digital technology can assist in solving complex problems that might be difficult or impossible for humans to solve on their own. They can process large amounts of data, identify patterns, and provide solutions quickly. In orther words, AI and digital technology can help us solve problems that are too hard for us to solve alone. They can look at a lot of information and find answers fast.

- Memory and Retention (Forgetting More): As we offload more tasks to digital tools, we may become less proficient at certain skills. For example, the use of GPS navigation may weaken our natural sense of direction and spatial skills. Similarly, reliance on search engines may impact our ability to remember information. In other words, as we let digital tools do more work for us, we might forget how to do these things ourselves. For example, if we always use GPS to find our way,

we

might forget how to read a map.

- Shallow Information Processing(Understanding Less): Because of the abundance of information available online, we may skim or browse through information without deeply understanding or retaining it. This is sometimes referred to as the "Google effect" or "digital amnesia." In other words, because there is so much information online, we might not spend enough time to really understand it. We might only read a little bit and then move on.

- Reduced Critical Thinking (Thinking Less): With AI providing answers and solutions, there may be less incentive for users to think critically or independently about problems. This could lead to less creative problem solving and a lack of deep understanding of complex issues. In other words, with AI giving us answers, we might not think about problems ourselves. This could make it harder for us to come up with new ideas or understand difficult things.

- Overreliance (Relying Too Much): If we become too reliant on these tools and they malfunction or are unavailable, we may feel lost or incapable. This can lead to stress and anxiety. In other words, if we always need these tools and then they stop working or are not available, we might not know what to do. This can make us feel stressed and worried.

Let's get into this a little further and think of how relying on digital technology can influence on each stages of cognitive process we outlined at the beginning of this note.

- Input/Sensory Register: Digital technology can alter our sensory input by exposing us to a wide array of stimuli. This could lead to overstimulation and may challenge our ability to filter and process information effectively. For example, the constant barrage of notifications from smartphones could potentially overwhelm our sensory register. In short, Digital technology like smartphones can sometimes give us too much information at once, which can be overwhelming.

- Transduction/Early Sensory Processing: This process converts sensory data into a form that can be understood by our brains. Digital screens, with their bright lights and high resolution, can impact how we process visual and auditory data. For example, prolonged exposure to screens can cause eye strain and potentially disrupt our visual processing (That is, looking at screens for a long time can make our eyes tired and might make it harder for us to understand what we see)

- Early Perception/Pre-attentive Processing: This automatic process helps us identify what's important in our environment. With digital technology, there is so much information available that it may be difficult to determine what's important or relevant, leading to potential information overload. In other words, because digital technology gives us too much of information too quckly, it can be hard to decide what is important.

- Attention/Selective Attention: Digital technology often involves multitasking (e.g., checking email while watching a video), which can affect our ability to focus on one task at a time. This divided attention can lead to lower efficiency and accuracy in our tasks. In other words, if we use digital technology to do many things at once (like checking email while watching a video), it might be harder for us to focus.

- Further Perception/Attentive Processing: With the convenience of digital technology, we might rush through information without thoroughly understanding it. We might become less patient and more inclined to seek quick answers, which may reduce the depth of our understanding. In short, with digital technology, we might rush through this and not fully understand what we are learning.

- Working Memory (Short-term Memory): Digital technology can offload some of our working memory tasks (like remembering appointments or phone numbers). This can be helpful, but it might also weaken our working memory skills if we rely on technology too much. In other words, Digital technology can help us remember things, but if we use it too much, we might forget how to remember things ourselves

- Long-Term Memory: The frequent use of search engines and AI could potentially affect our motivation to retain information in long-term memory. If we know we can easily look up information, we might not try to remember it. On the other hand, digital tools can also aid in memory retention through methods like digital flashcards or reminders. In other words, Using digital technology like search engines might make us lazy to remember things, since we can just look them up again.

But

digital tools can also help us remember things if we use them properly.

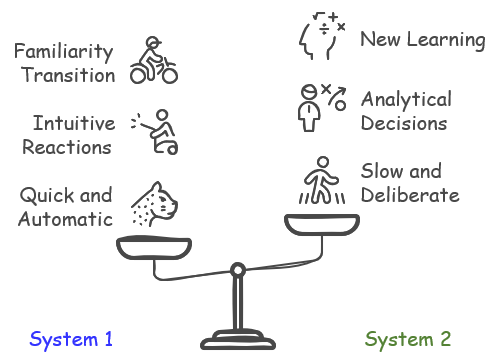

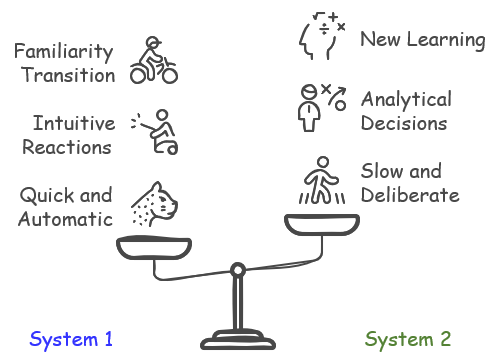

Dual process theory is a framework used to explain how people think. It is a psychological framework that suggests our cognitive functioning involves two distinct modes of thought. One mode operates quickly, automatically, and intuitively, without the need for conscious effort. The other mode is slower, more deliberate, and analytical, requiring conscious thought and effort. These two modes often work together, allowing us to navigate both familiar and new situations effectively.

System 1(Type1)operates automatically and quickly, with little or no effort and no sense of voluntary control. It's responsible for our intuitive, instinctual, and automatic reactions and judgments. It's the part of our brain that allows us to perform tasks without conscious thought, like driving a car along a familiar route, understanding simple language, or recognizing an object.

System 2(Type2) allocates attention to the effortful mental activities that demand it, including complex computations and conscious, reasoned decision-making. This system is slower, more deliberate, and more analytical. It's responsible for anything that requires attention and conscious effort, like learning a new language, solving a complex math problem, or making a decision based on careful consideration of options.

There are cases where transition happens between the two modes, for example starting from Type 2(System 2) to Type 1(System 1) or in vice versa. Followings are some of the examples :

Type 2 to Type 1 transition :

- Driving a Car: When you first learn to drive, you must consciously think about every action. You engage System 2 to remember to check your mirrors, signal before you turn, apply the brakes gently, etc. However, as you gain experience, these actions become more automatic and shift to System 1. Experienced drivers often find themselves arriving at destinations without consciously thinking through each step of the drive.

- Playing a Musical Instrument: When you first start learning an instrument, you have to consciously think about how to read the notes, how to position your fingers, etc. engaging System 2. Over time, as you practice, these actions become more automatic, shifting into System 1. A skilled musician can play a piece of music without consciously thinking about every note.

Type 1 to Type 2 transition :

- if an experienced speaker who normally speaks fluently (a System 1 process) is asked to explain the grammar rules and sentence structures they're using (which requires conscious analytical thinking), they would need to engage System 2. Similarly, someone who naturally picks up language patterns might need to engage System 2 when trying to understand the formal grammar rules in a classroom setting.

I think the two process theory is based more on theoretical discussions and has not been heavily investigated in terms of real experiments and neurological research. However, there would be some research results that you would find.

Image Source : Dual-Process Theories in Social Cognitive Neuroscience

System 1 might be associated with areas like the limbic system, basal ganglia, and cerebellum, which are involved in emotional responses, memory, automatic perceptual processing, and habit formation.

System 2 is often linked with areas of the brain like the prefrontal cortex, which is involved in executive functions like working memory and abstract reasoning.

Reference

- Cognition in General

- Illusion

- Dual process theory

YouTube

|

|