|

Python |

|||||

|

Cluster - pyTorch

In this note, I want to try to apply an approach that is completely from other notes. I wanted to use chatGPT to create a Python code that I want instead of writing it myself.

NOTE : Refer to this note for my personal experience with chatGPT coding and advtantage & limitation of the tool. In general, I got very positive impression with chatGPT utilization for coding.

This code is created first by chatGPT on Feb 03 2023 (meaning using chatGPT 3.5). For this code, I haven't started from the requirement. I asked chatGPT to write the same function as this using pyTorch.

As you notice, this code uses some math function of torch, but does not use any neural net.

NOTE : To my surprise (in negative way), chatGPT coding was a little bit disappointing in terms of pyTorch coding. It seems chatGPT became dumb. In most case, the initial response was the code with syntax errors and when I said 'fix it' it rewrite the entire code in complete different way instead of the fixing a specific part and the new code generates other syntax error. At first, I thought chatGPT would write stable, high quality Python code because I assumed that it has been trained most extensively for Python because I thought there are the most amount of python code shared by gitHub or stackoverflow etc.

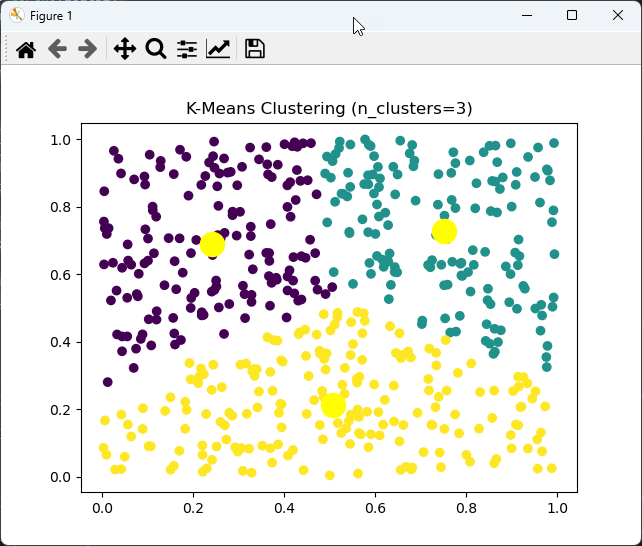

The result from this code is as follows :

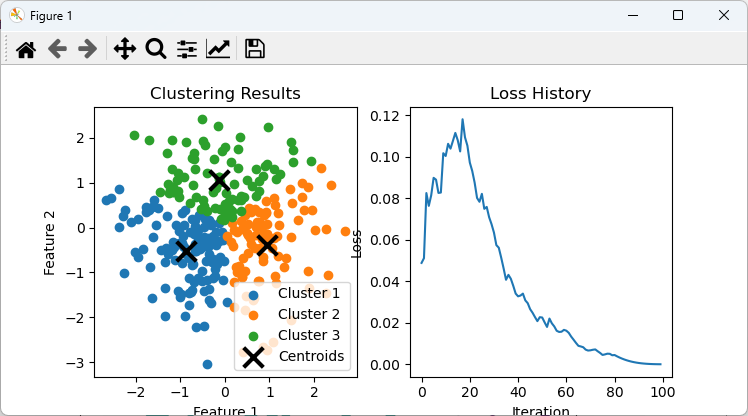

I asked chatGPT to use 'feedforward neural net' and got the same code and plot clustered output and loss values in the same figure.

NOTE : I expected a little bit different code from this. I expected chatGPT to use multiple layers of linear layers but the result is not what I expected. Probably the term 'feedforward' in my request was interpreted differently. But the outcome of the execution is exactly what I wanted get.

The result from this code is as follows :

|

|||||