|

Application to Wireless Communication

The high end wireless communication like cellular communication is largely made up of two big section, radio access network and core network. It seems obvious that AI/Machine Learning would be important part of core network operation. Questions are how much it can be applicable to radio access part especially Physical layer / MAC layer operations. In this page, I am

going to chase the ideas and use cases of Machine Learning in Radio Access Network. For core network and application layer, you may refer to a lot of videos that I linked in WhatTheyDo page. Check with Qualcomm, Ericsson, Verizon, Cisco, Networking applications in the page.

NOTE : This note is following up more generic ideas about Machine Learning application to Wireless communication. For those cases more specific to 3GPP activities, I keep another note here.

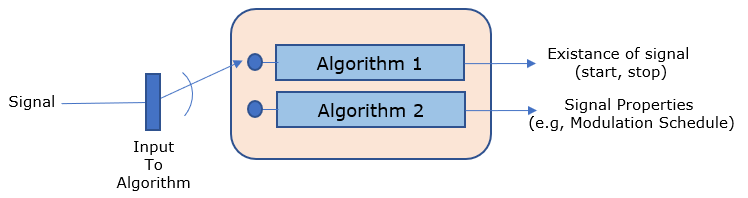

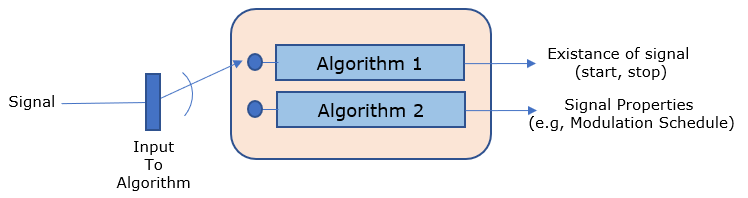

In the fast-changing world of wireless communication, Machine Learning (ML) has become a helpful tool to solve old problems, especially in Cognitive Radio (CR) systems. A key reason to use ML early on was to make signal detection and classification better—important tasks that help CR adjust to its surroundings. Usually, CR uses two main types of algorithms: one type checks if a signal exists, and the other type finds out more details about the signal, like its modulation style. At first, people mostly worked on detecting if a signal was there because it was easier to do. They used two methods for this: the Energy Detection (ED) algorithm, which relies on a threshold, and another method that doesn’t use a threshold. But these methods have problems. The threshold-based one often gives false alarms and struggles with noise, while the non-threshold one needs a lot of computing power and doesn’t work well in real-time. Also, when trying to learn more about the signal, like its modulation, those methods were weak against noise and didn’t perform well in low signal-to-noise ratio (SNR) situations. To fix these issues, ML is now being studied as a better option. Today, this evolution continues as ML is applied to modern systems like 5G and even future 6G radios. In 5G, ML helps improve speed and reliability, while in 6G, it could make networks smarter, more energy-efficient, and able to handle complex tasks like massive device connections and super-fast data rates. With ML, wireless communication is becoming more powerful and ready for the future.

Main algorithms in cognitive radio are two types as shown below. One is just to detect the existence of signal and the other one is to figure out more detailed properties of the signal.

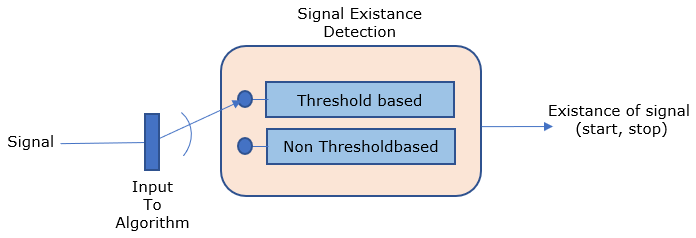

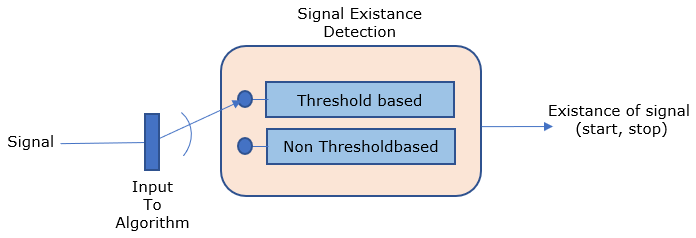

For the simplicity of implementation, in most case we focused on detecting the existence of the signal only in most of Cognitive Radio applications. There are roughly two types of algorithms being used for the dection of signal presence as shown below. One is to use ED(Energy Detection) Algorithm based on Threshold and the other one is non-threshold based algorithm.

Even though these are major algorithms being used in signal existance(presence) detection, there some some major issues with these algorithms.

- Issues with Threshold Based Algorithm

- Frequent False Alarm

- Sensitive to Noise

- Issues with Non-Threshold based Algorithm

- High Computational Complexity

- (As a result) Poor online detection performance

In some case, they used various method to figure out the detailed properties of the signal (like modulation scheme). But those methods has issues as follows.

- Highly affected by Noise

- Poor Performance in low SNR

To overcome various issues mentioned above, Machine Learning method is being investigated as alternatives to those conventional method mentioned above.

In this section, I am trying to summarize technical papers or videos with ML (Machine Learning) application to wireless PHY/MAC in simple diagrams mainly with focus on Input and Output of the network. For the details, I would recommend you to read the original papers and video that I put in the reference section. Once you have read those original documents/video, you can use the illustrations

here as a visual que to refresh your memory and understanding.

In this section, I would focus more on lower layer (PHY/MAC) of common wireless system or cellular communication system.

NOTE : What I talking in this section is mostly from generic application of AI in wireless communication, not specific to cellular communication like 5G, 6G etc. For specific application to application to cellular communication, refer to notes below.

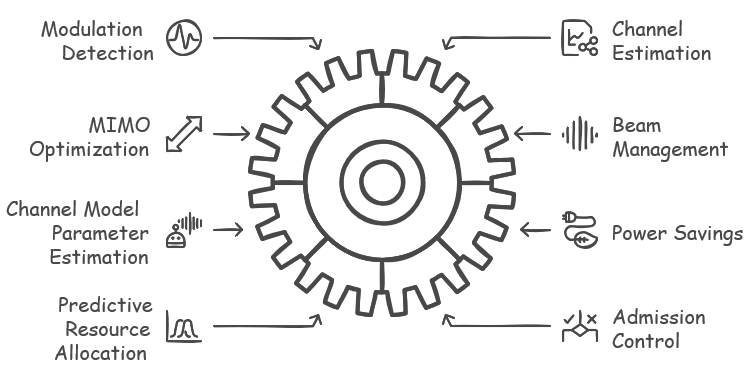

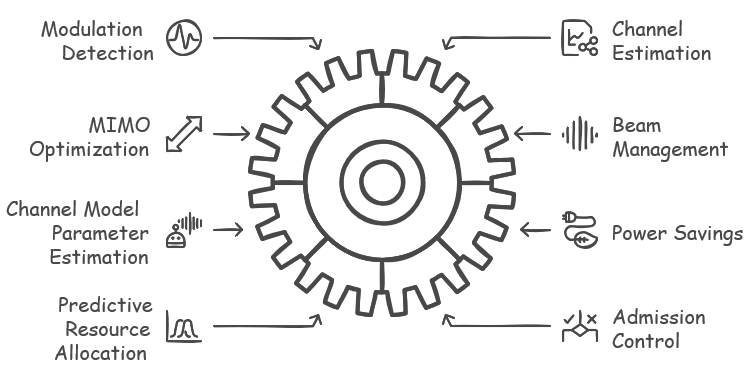

Common Use Cases that I found (probably biased based on my personal interest) is listed as below. At the very early stages of adopting ML(Machine Learning) in this area was mostly on Modulation Detection, but it does not seem to get strong attention especially in high end communication (like mobile/cellular system) because we already have simple / deterministic way of figuring out

the modulation scheme. Then various other use cases (like channel estimation, MIMO optimization, Beam Management) has been investigated and some of the use cases are being adopted in real application.

- Modulation Detection

- Traditional modulation detection relies on predefined algorithms that decode received signals based on their spectral properties.

- ML models can be trained to classify modulation schemes (e.g., QPSK, 16-QAM, 64-QAM) based on raw received signals, even in noisy environments.

- While this use case initially seemed promising, it has not gained widespread adoption in mobile networks because conventional methods (such as FFT-based detection) are already efficient and deterministic.

- However, ML-based modulation detection is still relevant in non-cooperative communication scenarios (e.g., military, cognitive radio networks), where unknown signals need classification.

- Channel Estimation / CSI Estimation

- Accurate channel estimation is critical for decoding received signals and adapting transmission parameters in dynamic wireless environments.

- Traditional methods like MMSE (Minimum Mean Square Error) and LS (Least Squares) estimation have limitations, especially in highly mobile environments.

- ML models can be trained to estimate the Channel State Information (CSI) more efficiently by learning patterns from real-time data, improving performance in scenarios with rapid fading and interference.

- Deep learning-based CSI feedback compression is also being explored to reduce the overhead of sending CSI feedback from UE to the base station.

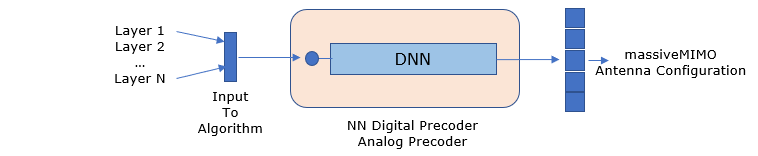

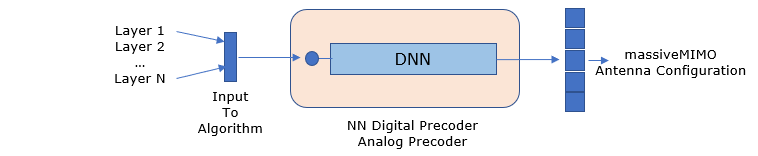

- MIMO Antenna Configuration Optimization

- In Massive MIMO (Multiple-Input Multiple-Output) systems, configuring antenna weights and beamforming vectors dynamically is challenging.

- ML can predict optimal antenna configurations based on real-time channel conditions and traffic demands.

- Techniques like Reinforcement Learning (RL) are used to optimize precoding matrices in Massive MIMO, reducing computational complexity.

- AI-driven adaptive MIMO configuration can improve spectrum efficiency in multi-user MIMO scenarios.

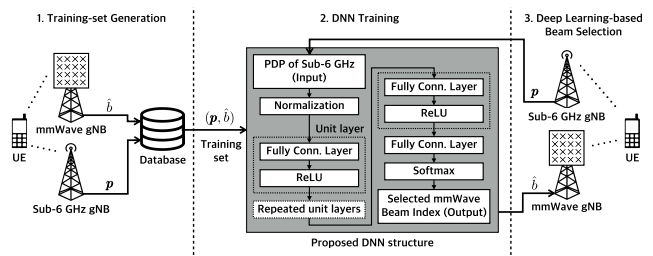

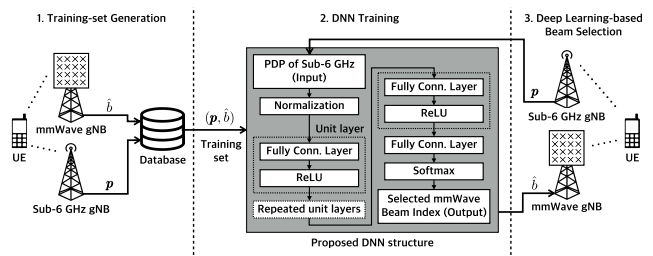

- Beam Selection / Management

- In mmWave and THz communications, beamforming is essential to overcome path loss.

- Traditional beam search methods involve scanning multiple beams, which is slow and inefficient.

- ML-based beam prediction models can infer the best beam direction based on historical data, UE movement patterns, and environmental factors.

- AI can be used for beam failure recovery—detecting when a beam is blocked and predicting alternative beams in real-time.

- Estimation of Channel Model Parameters

- ML models can be trained to estimate propagation parameters such as path loss exponent, Doppler shift, and delay spread more accurately than analytical models.

- Traditional channel models (e.g., Rayleigh, Rician, WINNER) are based on statistical assumptions, but ML-driven models can adapt to real-world conditions.

- AI-based channel prediction models are particularly useful in high-mobility scenarios like V2X (Vehicle-to-Everything) communication.

- Power Savings

- Power consumption is a critical issue in both UE (User Equipment) and network infrastructure.

- AI can optimize sleep mode scheduling for base stations, reducing power consumption during low-traffic periods.

- ML-based dynamic power scaling allows adaptive transmit power adjustments based on channel quality and QoS requirements.

- Energy-efficient AI algorithms are being explored for 6G networks, where power-hungry AI operations need to be optimized.

- Predictive Resource Allocation

- In wireless networks, spectrum and power resources need to be dynamically allocated based on demand and interference conditions.

- ML-based predictive models can forecast network congestion and pre-allocate resources to reduce latency.

- AI-driven traffic forecasting can help mobile operators proactively manage spectrum usage, reducing bottlenecks.

- Reinforcement learning algorithms can be used to optimize radio resource scheduling in real time.

- Admission Control

- Admission control determines whether a new UE connection request should be accepted or rejected based on network capacity.

- Traditional rule-based admission control struggles with dynamic traffic fluctuations and QoS (Quality of Service) constraints.

- ML models can predict network load and dynamically adjust admission thresholds to balance network capacity and QoS guarantees.

- AI can optimize multi-tenant network slicing in 5G/6G networks, ensuring fair resource allocation between different services.

- Congestion Control

- Network congestion can degrade user experience by increasing packet loss, jitter, and delays.

- AI-driven congestion control algorithms can analyze network traffic patterns and adjust scheduling parameters dynamically.

- ML-based congestion management can be integrated with 5G Core Network elements to optimize data flow control.

- AI-based TCP congestion control methods are being explored for low-latency applications, reducing bufferbloat and improving responsiveness.

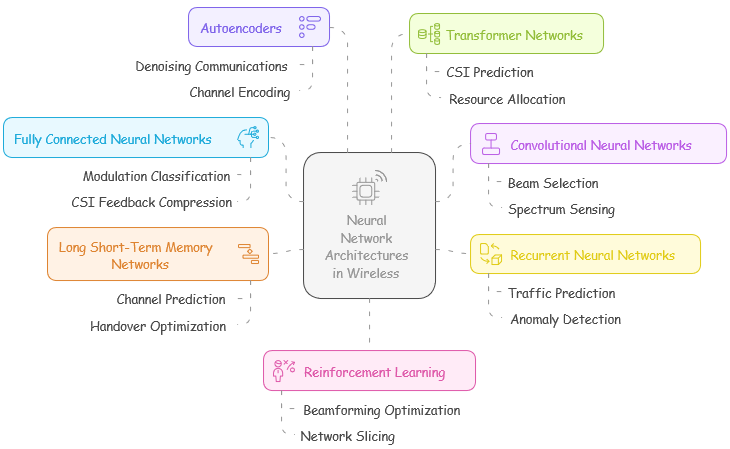

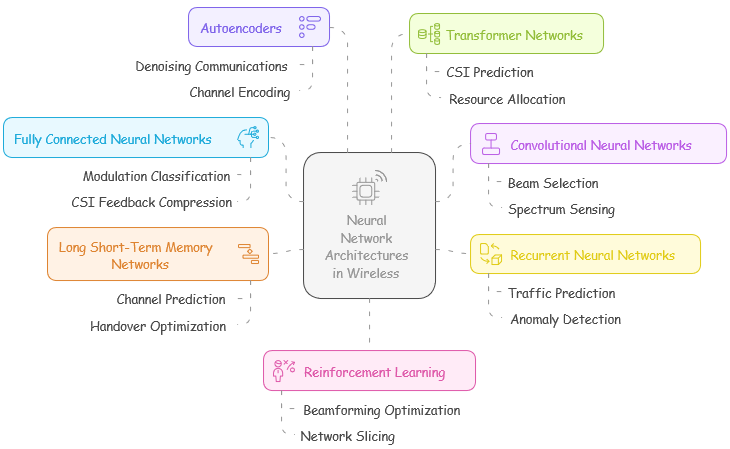

In terms of neural network architectures applied in the wireless domain, almost every well-known deep learning model has been explored in research. While these architectures may not be as complex as those used by big players like OpenAI, Google, Facebook, Tesla, or other AI-driven companies, they are still highly relevant for optimizing wireless systems. Below are the most frequently mentioned neural network architectures in the literature, along with their applications in wireless communications.

NOTE : The models and applications listed here are presented in a simplified manner for clarity. However, in real-world scenarios, AI/ML models used in wireless communication and network optimization are often not standalone solutions but rather hybrid architectures that combine multiple different models to achieve better performance, adaptability, and efficiency.

Fully Connected Neural Networks (FCNN)

- Description:

- Also known as Feedforward Neural Networks (FNN) or Linear Networks, FCNNs consist of multiple layers of neurons where every neuron in a layer is connected to all neurons in the next layer.

- These networks are relatively simple and commonly used for classification, regression, and feature extraction tasks.

- Wireless Applications:

- Modulation Classification: FCNNs can be used for automatic modulation recognition (AMR) in non-cooperative wireless communications.

- CSI Feedback Compression: Fully connected layers are used to reduce the dimensionality of channel state information (CSI) before transmitting feedback.

- Resource Allocation: FCNNs can learn optimal power and spectrum allocation strategies in cellular networks.

- Link Adaptation: Predicting modulation and coding scheme (MCS) selection based on channel conditions.

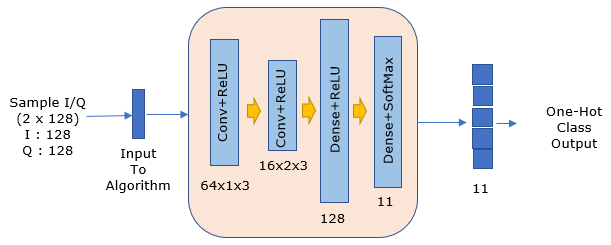

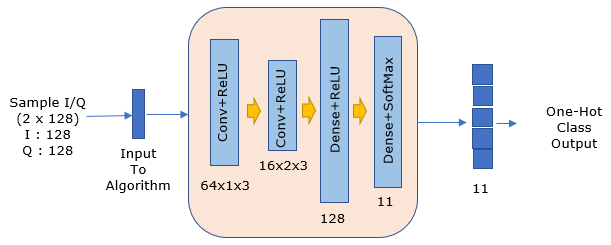

Convolutional Neural Networks (CNN)

- Description:

- CNNs are primarily used in image and signal processing, where convolutional filters extract spatial patterns from input data.

- They perform well in structured grid-like data (e.g., images, spectrograms) and are widely used for feature extraction and pattern recognition.

- Wireless Applications:

- Modulation Classification: CNNs can classify received signal waveforms based on spectrogram representations.

- Beam Selection: CNNs can process angle-of-arrival (AoA) maps to predict the optimal beam in massive MIMO.

- Spectrum Sensing: CNNs help identify unused spectrum by analyzing radio frequency (RF) spectrograms.

- Interference Detection: CNNs can detect and classify jamming or interference sources in dynamic spectrum environments.

Recurrent Neural Networks (RNN)

- Description:

- RNNs are specialized for sequential data processing, as they maintain an internal state that allows them to learn temporal dependencies in data.

- Unlike feedforward networks, RNNs have loop connections, enabling them to process variable-length sequences.

- Wireless Applications:

- Traffic Prediction: Predicting network congestion and data traffic variations over time.

- Time-Series Forecasting: Estimating channel conditions or wireless link quality based on past observations.

- User Mobility Prediction: Predicting handover decisions by analyzing historical user movement data.

- Anomaly Detection: Detecting security threats in network traffic by learning normal communication patterns.

Long Short-Term Memory Networks (LSTM)

- Description:

- LSTMs are a specialized type of RNN that address the vanishing gradient problem, allowing them to capture long-term dependencies in time-series data.

- They use gates (input, forget, output) to regulate information flow, making them more effective than standard RNNs for long sequences.

- Wireless Applications:

- Channel Prediction: LSTMs predict future channel state variations based on previous CSI values.

- Energy Consumption Forecasting: Predicting battery drain rates in IoT and mobile devices.

- Handover Optimization: LSTM models predict when a UE should switch to another base station based on mobility and signal quality trends.

- Network Fault Detection: Identifying network failures before they impact service quality by analyzing historical logs.

Autoencoders (AE)

- Description:

- Autoencoders are unsupervised learning models designed for feature compression and reconstruction.

- They consist of an encoder-decoder architecture, where the encoder compresses input data into a lower-dimensional latent space, and the decoder reconstructs the original input.

- Wireless Applications:

- CSI Feedback Compression: Autoencoders reduce the overhead in 5G massive MIMO CSI feedback, improving feedback efficiency.

- Anomaly Detection: Identifying network attacks and malfunctions by detecting deviations from normal patterns.

- Denoising Communications: Removing noise from received signals by learning to reconstruct clean versions of noisy inputs.

- Channel Encoding: Autoencoders can act as learned channel encoders and decoders, replacing traditional coding techniques.

Transformer Networks

- Description:

- Originally developed for natural language processing (NLP), Transformers use self-attention mechanisms to capture long-range dependencies in data.

- Unlike LSTMs, they parallelize computations, making them highly efficient for large datasets.

- In wireless systems, they are particularly useful for tasks requiring global context awareness.

- Wireless Applications:

- CSI Prediction & Feedback

- Transformers can model channel state variations more effectively than traditional deep learning models.

- They capture long-range dependencies in channel conditions using self-attention.

- Improves MIMO beamforming and precoding optimizations.

- Beam Selection and Beamforming Optimization

- Self-attention mechanisms can learn optimal beam directions based on historical channel conditions.

- Used in 6G beam prediction to reduce overhead in beam search.

- Massive MIMO Precoding

- Helps in large-scale antenna systems by efficiently mapping input channel matrices to precoding weights.

- Outperforms conventional deep learning models in terms of accuracy and computational efficiency.

- Predictive Resource Allocation

- Transformers can anticipate network congestion and optimize resource allocation in real time.

- Used for scheduling wireless traffic in large-scale networks.

- Wireless Traffic Forecasting

- Captures long-term trends in network load variations to optimize data flow.

- More effective than LSTMs in handling large datasets with multi-hop dependencies.

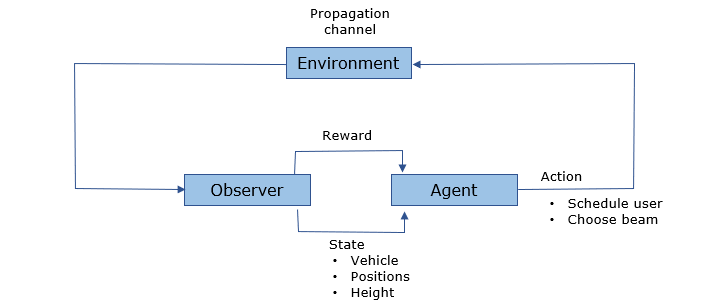

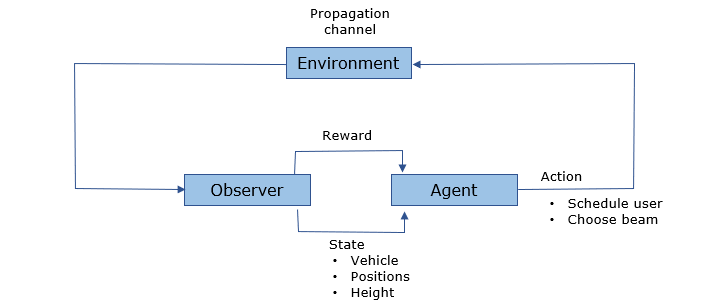

Reinforcement Learning (RL)

- Description:

- RL is a decision-making framework where an agent interacts with an environment, learning to maximize cumulative rewards over time.

- It is particularly useful in dynamic and uncertain environments.

- Wireless Applications:

- Beamforming Optimization: RL-based models can dynamically adjust beam directions in mmWave networks.

- Resource Allocation: RL agents optimize power, spectrum, and bandwidth allocation for different users.

- Handover Management: RL helps optimize handover decisions by balancing latency, interference, and signal strength.

- Network Slicing: RL is used in 5G network slicing to allocate virtualized network resources based on real-time demand.

- Adaptive Modulation and Coding (AMC): RL can learn the optimal modulation and coding scheme under varying channel conditions.

Most of the examples shown here is from various papers or articles that I read, it doesn't necessarily mean that they are really used in real applications. I am just to trying to gather various ideas and get myself familiar to Machine Learning Application to wireless commuincation protocol stack.

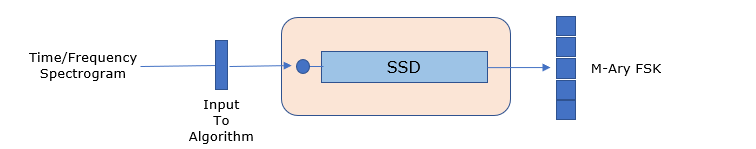

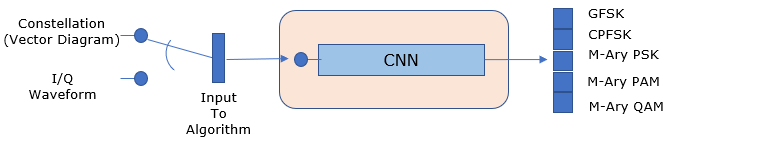

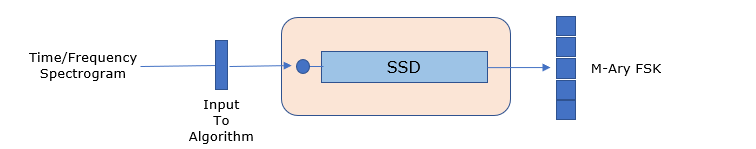

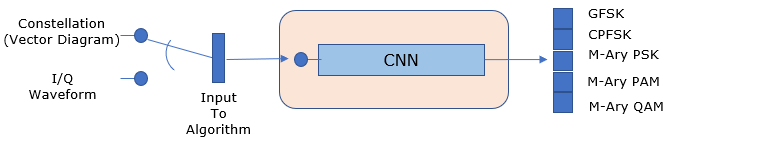

Case 1: Application of Machine Learning for Modulation Classification. Following two is summary of the model suggested in Ref 01.

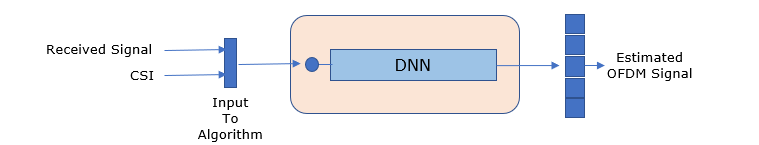

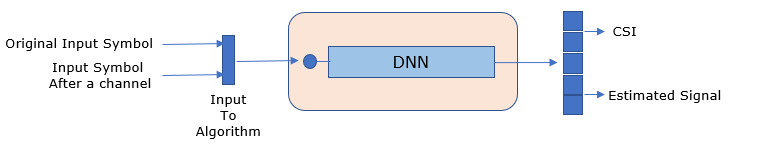

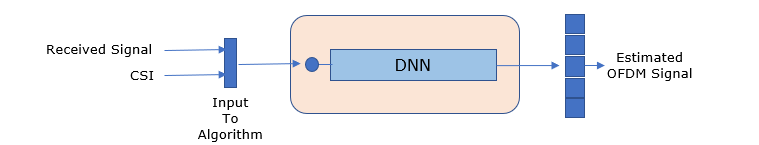

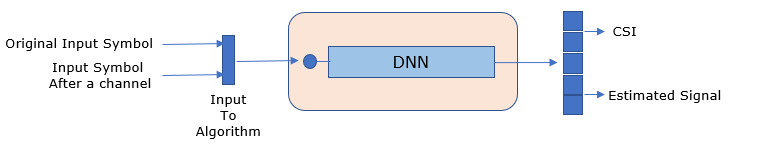

Case 2 : Application of Machine Learning for 5G Physical Layer. Following three is summary of the model suggested in Ref 06.

Case 3 : Application of Machine Learning for mmMIMO operation and Beam Selection. Following is an example of reinforcement learning for mmMIMO operation from Ref

7.

Case 4 : Application of Machine Learning for Modulation Recognition. Following is an example of a CNN for modulation identification Ref

8.

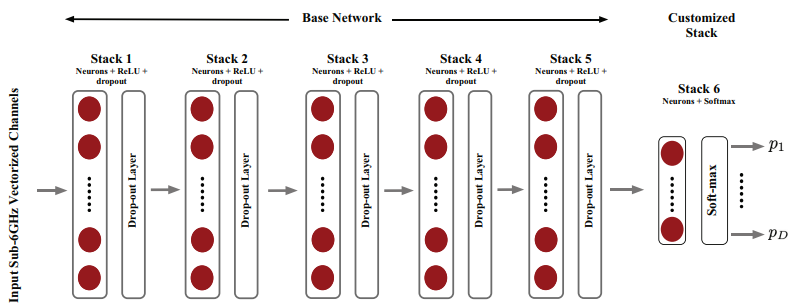

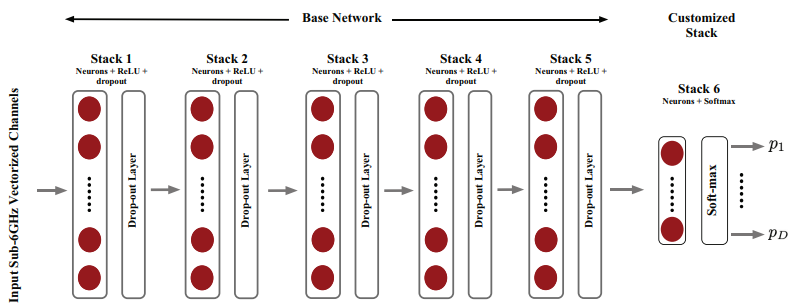

Case 5 : Application of Machine Learning for 5G Beam Selection. Following is an example of using multiple layers of FC(Fully Connected)

network for 5G Beam Selection (Ref 13)

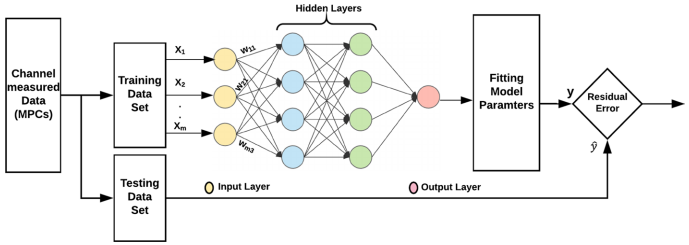

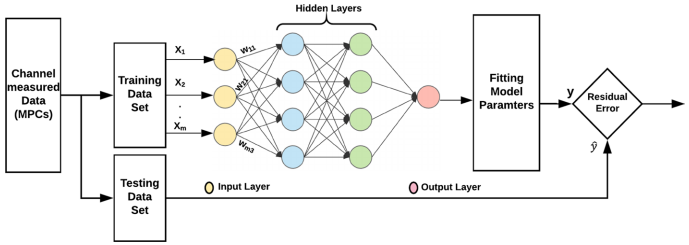

Case 6 : Application of Machine Learning for Channel Model. Following is an example of using multiple layers of FC(Fully Connected)

network for estimating channel model parameters (Ref 14).

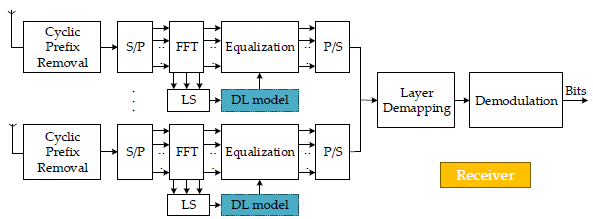

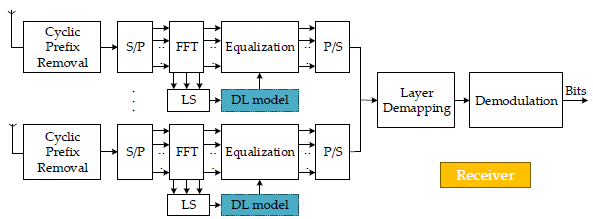

Case 7 : Application of Machine Learning for Equalization

: Following is an example of channel estimation (Ref 16)

NOTE : The block labeled as 'DL model' is Machine Learning part

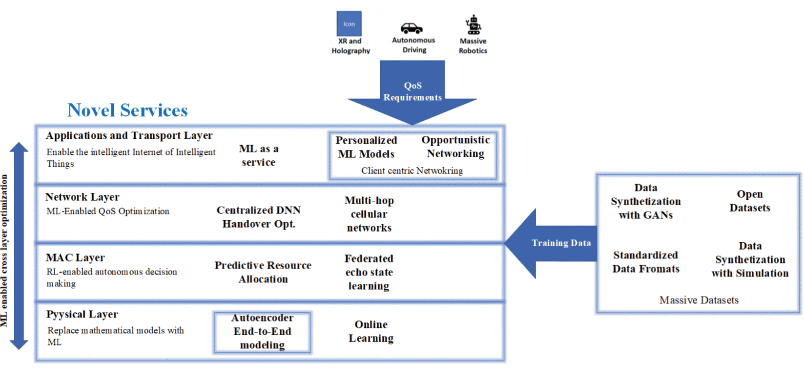

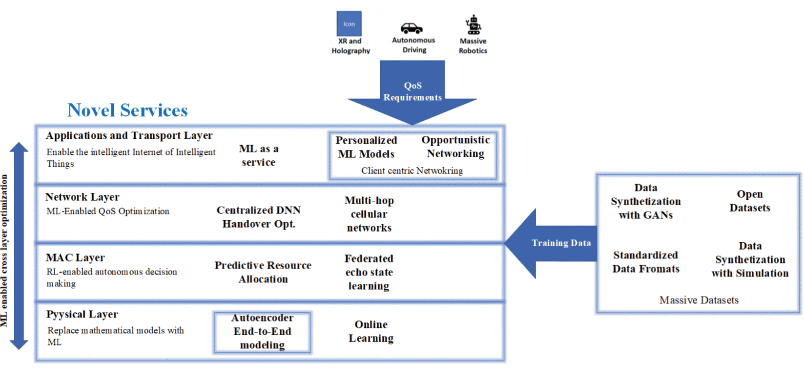

Case 8 : Machine learning models for each layer

: Following is an example of Potential Machine Learning Application and Models in 6G wireless network systems. (Ref 17)

Case 9 : Application of Machine Learning for mmWave Beam and Blockage Prediction : Following is an example of Machine learning application for prediction of mmWave Beam and Blockage prediction (Ref 19)

I am deeply curious about applying machine learning, particularly neural networks, to enhance the PHY layer and low MAC of wireless communication systems. This exploration involves tackling a range of challenges, from ensuring model interpretability and making precise adjustments to deep neural networks, to addressing data acquisition, latency, and accuracy requirements in dynamic wireless environments. Other key considerations include safeguarding privacy and security, ensuring data availability, managing implementation complexity, and optimizing feature engineering and model selection to create robust, efficient solutions for real-world wireless communication

- Data Aquisition for Training : For training phase, we can easily generate the data with software tool(e.g, Matlab) and convert it into pictorial form like vector diagram, Eye diagram, spectrogram. But how can we expect real wireless device can do the same thing ? It would require a huge additional

cost and performance. It would be like carrying a VSA(Vector Signal Analyzer) or Digital Oscilloscope within the device.

- Data Availability for Training : One of the critical factors for the success of Machine Learning as we see today (as of Dec 2019) is the availability of huge data set largely thanks to the internet, social network etc and largely thanks to groups of dedicated experts. By the nature of Neural Network/Deep

learning, it cannot learn and produce any meaningful output without huge set of training data. Now the question is 'Do we have large enough data set to train the neural network for wireless communication Phy/Mac ?'

- Is it Simple enough to be implemented for low energy ? : Even assuming that we have some means to resolve the issues mentioned above, how can we implement the network simple enough that can come out with the solution fast enough and with low energy consumption that can be utilized in mobile terminal

(e.g, mobile phone) ? ==> Recently (Sep 2021) I came across a YouTube video to present some brilliant idea of implementing Machine Learning with reduced power demand. See Pushing the boundaries of AI research at Qualcomm - Max Welling (University of

Amsterdam & Qualcomm)

- Is it fast enough for real time and latency requirement ? : In most of high end wireless communication, the latency requirement is very tight. The factors affecting the latency requirement would be :

- The latency requirement for PHY procedure (e.g, TTI timing , HARQ response timing etc)

- coherence time of the radio channel

- Usually tho latency requirement in PHY layer for those high end wireless communication is milisecond or submilisecond scale, meaning that the processing time of the machine learning algorithm should be fit into this time scale. ==> Refer to III. DEEP LEARNING AT THE PHYSICAL LAYER: SYSTEM REQUIREMENTS AND CHALLENGES of Ref [18]

- Can it meet the accuracy requirement : In most of neural network application, the requirement for the level of accuracy does not seem to be as strict as what is required for most of high end wireless physical layer (e.g, cellular communication). In wireless communication, it is expected to give

the result of 0% BLER in relatively good channel condition (or less than 10% even in a relaxed criteria). For example, if we replace some PHY process (e.g, channel estimation, modulation detection etc) with the neural network, would it give 0% BLER in a good lab condition (or in less than 10% BLER in a good live condition) ?

- Feature Engineering : Wireless communication systems are complex and have many parameters that can affect the performance, and selecting the relevant features for the machine learning model can be difficult.

- Model interpretability: Some machine learning models, such as deep neural networks, can be difficult to interpret, which can make it challenging to understand the behavior of the model and make adjustments.

- Privacy and security: Wireless communication systems often handle sensitive data and applying machine learning to these systems can raise privacy and security concerns

References

[1] Deep Learning Framework for Signal Detection and Modulation Classification (2019)

[2] Fast Deep Learning for Automatic Modulation Classification (2019)

[3] Automatic Modulation Recognition Using Deep Learning Architectures

[4] Modulation Classification with Deep Learning(Mathworks)

[5] GRCon18 - Advances in Machine Learning for Sensing and Communications Systems (YouTube, 2019)

[6] Deep Learning for Physical-Layer 5G Wireless Techniques: Opportunities, Challenges and Solutions (2019)

[7] 5G MIMO Data for Machine Learning: Application to Beam-Selection using Deep Learning (2018)

[8] Convolutional Radio Modulation Recognition Networks (2016)

[9] Machine Learning for Beam Based Mobility Optimization in NR (2017)

[10] TWS 18: Machine Learning for Context and Can ML/AI build better wireless systems? (2018)

[11] The Future of Wireless and What It Will Enable (2018)

[12] Deep Learning for Wireless Physical Layer: Opportunities and Challenges (2017)

[13] Deep Learning-Based mmWave Beam Selection for 5G NR/6G With Sub-6 GHz Channel Information: Algorithms and Prototype

Validation (2020)

[14] Machine Learning for Wireless Communication Channel Modeling: An Overview (2019)

[15] Predicting the Path Loss of Wireless Channel Models Using Machine Learning Techniques in MmWave Urban Communications (2020)

[16] Machine Learning-Based 5G-and-Beyond Channel Estimation for MIMO-OFDM Communication Systems

[17] Machine Learning Techniques for 5G and Beyond (2021)

[18] Deep Learning at the Physical Layer: System Challenges and Applications to 5G and Beyond

[19] Deep Learning for mmWave Beam and Blockage Prediction Using Sub-6GHz Channels (2019)

YouTube

|

|