|

Evolution of Algorithm / Challenges and Solution

When I started studying the neural network/Deep Learning, most of the things seemed relatively clear and the simple fully connected feedforward network seemed to work for all the problems. But as I learn more, I realized that it is not that simple as expected and started seeing so many different algorithmic tweaking (e.g, selection of transfer function, selection of optimization functions

etc) and so many different structure of the neural networks(e.g, fully connected network, CNN, RNN etc). Then this question came to my mind. Why we need to go through so many tweakings and selections ? It turns out that there is no single 'fit for all' algorithm. An algorithm works for a certain situation but does not work well for other situation. To make the neural network work better for more diverse situation, many different tricks has been invented. As the result of such inventions for a long period

of time, we now see a neural networks with such a many options to choose from.

Evolution of Models

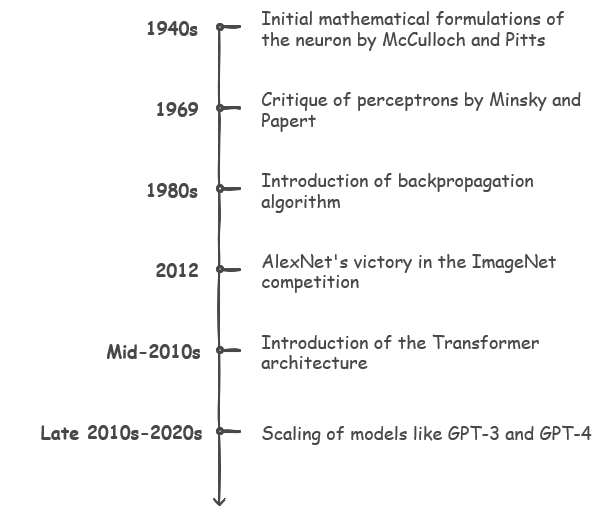

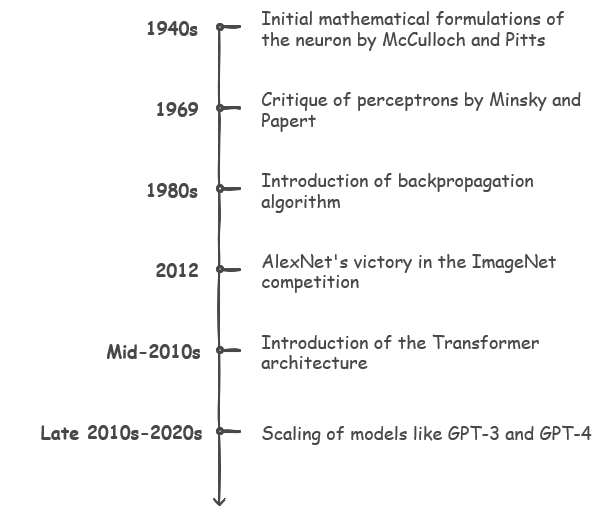

Neural networks have a rich history that spans from early theoretical developments in the 1940s to modern large-scale, multi-modal models. Initial research on the mathematical modeling of neurons laid the groundwork for perceptrons in the 1950s, which introduced the idea of learning from data. Subsequent breakthroughs, such as backpropagation, convolutional neural networks, and recurrent architectures, demonstrated the potential of deeper, more complex models to handle tasks like computer vision

and language processing. The resurgence of deep learning in the 2000s was fueled by advances in hardware (especially GPUs) and new training strategies, propelling neural networks to achieve state-of-the-art results in image classification, machine translation, and beyond. More recently, the introduction of Transformer-based models has revolutionized natural language processing, leading to increasingly large “foundation models” that exhibit impressive capabilities in language understanding, generation, and multimodal

tasks.

Some of the important mile stones in the history of neural network model development can be listed as below :

- Foundational Era (1940s–1970s)

- Initial mathematical formulations of the neuron (McCulloch–Pitts).

- Perceptrons introduced as a simple learning algorithm but limited to linear separability.

- The field faced setbacks after critiques (e.g., Minsky & Papert, 1969) highlighted perceptron limitations.

- Backpropagation and Renewed Interest (1980s–1990s)

- Backpropagation became the cornerstone for training multi-layer networks.

- Hopfield networks and Boltzmann machines opened up new approaches to memory and generative modeling.

- CNNs demonstrated success in computer vision (e.g., LeNet-5 for digit recognition).

- Deep Learning Renaissance (2000s–early 2010s)

- Deep Belief Networks and breakthroughs in unsupervised pre-training paved the way for deeper architectures.

- AlexNet’s ImageNet victory in 2012 showcased the power of GPUs and deeper CNNs, igniting widespread adoption.

- Transformers and Attention (mid-2010s–present)

- LSTM networks dominated sequence tasks until the Transformer’s attention mechanism replaced recurrence.

- The Transformer architecture revolutionized NLP (and later vision), leading to large-scale pre-trained models like BERT, GPT, and ViT.

- Scaling and Foundation Models (late 2010s–2020s)

- Rapid scaling of model parameters (GPT-2, GPT-3, GPT-4) led to emergent capabilities and improved few-shot learning.

- Multi-modal models (DALL·E, Stable Diffusion) integrate language and vision, generating impressive text-to-image outputs.

Following is the brief summary of Neural Network models.

|

Model / Concept

|

Year

|

Description

|

|

McCulloch–Pitts Neuron

|

1943

|

Proposed one of the first mathematical models of a neuron, establishing the idea that neurons could be represented by simple threshold logic.

|

|

Perceptron (Frank Rosenblatt)

|

1958

|

Single-layer neural network for binary classification tasks. Laid groundwork for neural network research.

|

|

MLP (Multi Layer Perceptron)

|

1980s

|

A feedforward neural network with one or more hidden layers and non-linear activation functions, forming the basis for many modern neural network architectures used in classification and regression tasks.

|

|

Hopfield Network (John Hopfield)

|

1982

|

A form of recurrent neural network that serves as content-addressable memory systems with binary threshold nodes.

|

|

Boltzmann Machines (Hinton & Sejnowski)

|

1985

|

Stochastic recurrent neural networks capable of learning internal representations; a precursor to more advanced energy-based and deep generative models.

|

|

Backpropagation (Werbos / Rumelhart, Hinton, Williams)

|

1974/1986

|

Algorithm for training multi-layer neural networks by propagating errors backward. While Werbos proposed it in 1974, it was popularized by Rumelhart, Hinton, and Williams in 1986.

|

|

Recurrent Neural Network (RNN) (Rumelhart, Hinton, Williams)

|

1986

|

A class of neural networks designed for sequential data processing. Introduced by Rumelhart, Hinton, and Williams, RNNs allow information to persist across time steps, making them useful for speech recognition, time series prediction, and natural language processing.

|

|

Autoencoder (LeCun, Hinton, Rumelhart, etc.)

|

1987

|

A type of neural network used for unsupervised learning, designed to encode input data into a compressed representation and then decode it back. Used in feature learning, anomaly detection, and dimensionality reduction.

|

|

Convolutional Neural Networks (CNN)

|

1989

|

Conceptual foundations by Yann LeCun and others (e.g., using backprop for handwritten digit recognition). Convolutions exploit spatial locality in data, primarily used in computer vision tasks.

|

|

LeNet-5 (Yann LeCun)

|

1998

|

A pioneering CNN architecture for digit recognition (MNIST). Demonstrated the power of convolutional networks in a practical application.

|

|

Long Short-Term Memory (LSTM) (Hochreiter & Schmidhuber)

|

1997

|

A type of recurrent neural network (RNN) designed to overcome the vanishing gradient problem in sequence modeling, enabling longer-range dependencies.

|

|

Deep Belief Networks (DBN) (Hinton et al.)

|

2006

|

Stacked probabilistic generative models that helped spark renewed interest in deep learning, showing how to pre-train deep neural networks effectively.

|

|

AlexNet (Krizhevsky, Sutskever, Hinton)

|

2012

|

CNN that famously won the ImageNet competition, ushering in the “deep learning revolution.” Demonstrated the effectiveness of GPU-accelerated training and deeper architectures.

|

|

Generative Adversarial Networks (GAN) (Goodfellow et al.)

|

2014

|

Consists of a generator and a discriminator in a minimax game framework. Capable of producing realistic images, audio, and other data.

|

|

Neural Machine Translation (Seq2Seq, Sutskever et al.)

|

2014

|

Introduced sequence-to-sequence modeling with LSTM-based encoders and decoders, vastly improving machine translation quality and general sequence transformation tasks.

|

|

ResNet (He, Zhang, Ren, Sun)

|

2015

|

Introduced residual connections (skip connections) to address vanishing gradients in very deep networks. Won the ImageNet 2015 competition and became a backbone for many vision tasks.

|

|

Deep Reinforcement Learning (DQN, AlphaGo)

|

2013–2016

|

Integration of deep learning with reinforcement learning to enable agents to learn optimal policies in complex environments. Key milestones include Deep Q-Networks (DQN) and AlphaGo, which demonstrated superhuman performance in tasks such as video game playing and board games.

|

|

Transformer (Vaswani et al.)

|

2017

|

Replaced recurrence and convolutions with attention mechanisms. Revolutionized natural language processing, enabling parallelization and better handling of long-range dependencies.

|

|

Physics-Informed Neural Networks (PINNs)

|

2017

|

Neural networks that incorporate physical laws and constraints into the learning process to solve differential equations and simulate physical systems, bridging deep learning with scientific computing.

|

|

BERT (Devlin et al.)

|

2018

|

Bidirectional Encoder Representations from Transformers. Pre-trained on large text corpora in a masked language modeling fashion, setting new benchmarks in NLP tasks.

|

|

GPT (Radford et al.)

|

2018

|

The first Generative Pre-trained Transformer. Showed that large-scale pre-training with Transformers could achieve strong results on various language tasks without task-specific architectures.

|

|

GPT-2 (OpenAI)

|

2019

|

Scaled-up version of GPT with more parameters and stronger language modeling capabilities. Its release raised discussion on responsible publication due to potential misuse.

|

|

GPT-3 (OpenAI)

|

2020

|

Significantly larger Transformer-based language model (175B parameters). Showed impressive zero-shot and few-shot learning capabilities for a variety of language tasks.

|

|

AlphaFold

|

2020

|

A deep learning model for protein structure prediction that leverages attention mechanisms and supervised learning to accurately predict 3D protein structures from amino acid sequences.

|

|

Vision Transformer (ViT) (Dosovitskiy et al.)

|

2020

|

Applied the Transformer architecture directly to image patches, achieving state-of-the-art results in image classification, highlighting the versatility of attention mechanisms beyond language.

|

|

DALL·E (OpenAI)

|

2021

|

Transformer-based model capable of generating images from text descriptions, further illustrating the potential of multimodal generative models.

|

|

Stable Diffusion (CompVis / Stability AI)

|

2022

|

Latent diffusion model enabling high-quality text-to-image generation with more accessible compute requirements than earlier large-scale generative models.

|

|

GPT-4 (OpenAI)

|

2023

|

Multi-modal large language model with improved reasoning and steering capabilities, continuing the trend of scaling Transformer-based architectures for more advanced generative AI applications.

|

Evolution of Milestone Algorithm

The development of deep learning models has been accompanied by the identification and resolution of numerous challenges that affect model performance and training efficiency. Over the years, researchers have proposed effective techniques to overcome issues such as vanishing gradients, slow convergence, and overfitting, all of which are common hurdles when working with neural networks. As the complexity of models increased, new methodologies such as batch normalization, dropout, and advanced

optimization techniques like Adam were introduced to address these concerns. Additionally, innovations like pooling layers in CNNs, recurrent connections in RNNs, and Transformer-based attention mechanisms have significantly enhanced model capabilities and performance. As the field continues to evolve, continuous advances in model efficiency, training stability, and reduction of overfitting are critical to the further success and deployment of deep learning systems. for 6 seconds

Over the years, neural network researchers have faced a range of obstacles in training deep models, including vanishing gradients, slow convergence, and overfitting. To overcome these hurdles, new algorithms and strategies emerged, such as using ReLU to mitigate vanishing gradients, employing adaptive learning rate optimizers like Adam to escape local minima, and applying batch normalization to stabilize training. These innovations, along with more recent techniques like attention mechanisms

for long-range dependencies and knowledge distillation for model compression, collectively address many of the core challenges in deep learning. The table below summarizes these issues and the corresponding solutions that have shaped the evolution of milestone algorithms.

Following is the list of important tricks that was invented to handle some of the early problems of the neural network.

|

Issues

|

Techniques to Solve the Issue

|

|

Vanishing Gradient

|

Replacing the classic sigmoid function with ReLU

|

|

Slow Convergence

|

Replacing the classic GD (Gradient Descent) with SGD (Stochastic GD)

|

|

Fluctuation in Training Due to SGD

|

Replacing SGD with mini-batch SGD

|

|

Falling into Local Minima

|

Introducing various adaptive learning rate algorithms (e.g. AdaGrad, RMSProp, Momentum, Adam)

|

|

Overfitting

|

Introducing early-stopping, regularization, and dropout

|

|

Too Big Fully Connected Network in CNN

|

Introducing pooling layer before the fully connected network

|

|

No Memory

|

Introducing RNN (Recurrent Neural Network)

|

|

Internal Covariate Shift

|

Using batch normalization to stabilize and speed up training

|

|

Vanishing/Exploding Gradients in Deep Networks

|

Adding residual (skip) connections or using gradient clipping to maintain stable gradients

|

|

Overfitting (Additional Methods)

|

Employing data augmentation, transfer learning, and cross-validation strategies

|

|

Unstable Training Due to Poor Initialization

|

Adopting modern weight initialization techniques (e.g., Xavier, He initialization)

|

|

Excessive Model Size

|

Applying pruning, quantization, or knowledge distillation to reduce parameters and memory usage

|

|

Difficulty Capturing Long-Range Dependencies

|

Using attention mechanisms or Transformer architectures to model global context

|

|

Catastrophic Forgetting (in Sequential Tasks)

|

Adopting continual learning approaches such as Elastic Weight Consolidation (EWC) or progressive networks

|

|

Hyperparameter Tuning Complexity

|

Leveraging AutoML methods, Bayesian optimization, or random search to find optimal hyperparameters

|

Reference

[1] Deep Learning for Wireless Physical Layer : Opportunities and Challenges

YouTube

|

|